Hyper-realistic digital worlds have been heralded as the most effective driving colleges for autonomous autos (AVs), since they’ve confirmed fruitful take a look at beds for safely attempting out harmful driving situations. Tesla, Waymo, and different self-driving firms all rely closely on information to allow costly and proprietary photorealistic simulators, since testing and gathering nuanced I-almost-crashed information often isn’t essentially the most straightforward or fascinating to recreate.

To that finish, scientists from MIT’s Pc Science and Synthetic Intelligence Laboratory (CSAIL) created “VISTA 2.0,” a data-driven simulation engine the place autos can study to drive in the actual world and get better from near-crash situations. What’s extra, the entire code is being open-sourced to the general public.

“Right this moment, solely firms have software program like the kind of simulation environments and capabilities of VISTA 2.0, and this software program is proprietary. With this launch, the analysis group could have entry to a strong new software for accelerating the analysis and growth of adaptive strong management for autonomous driving,” says MIT Professor and CSAIL Director Daniela Rus, senior creator on a paper in regards to the analysis.

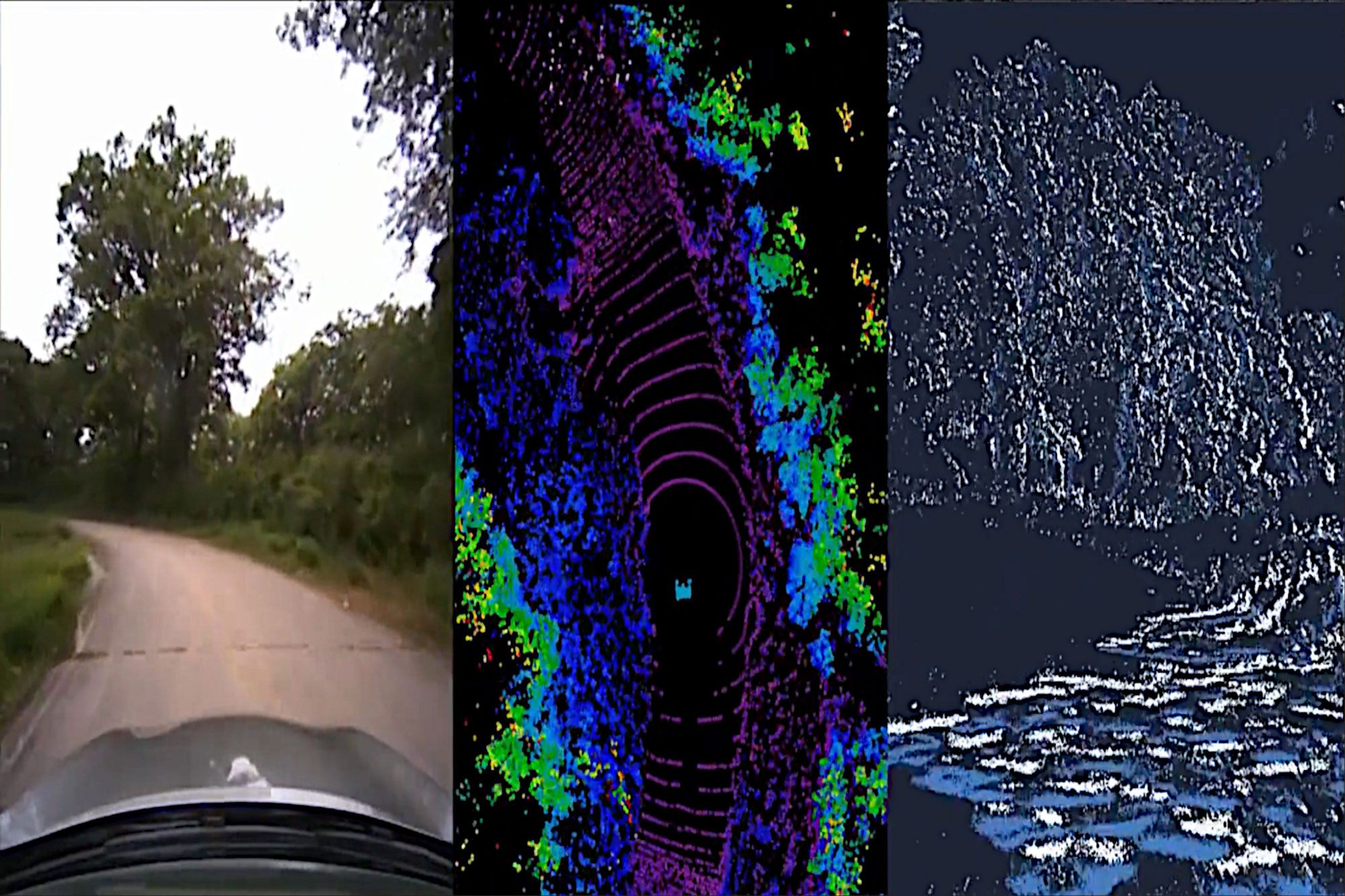

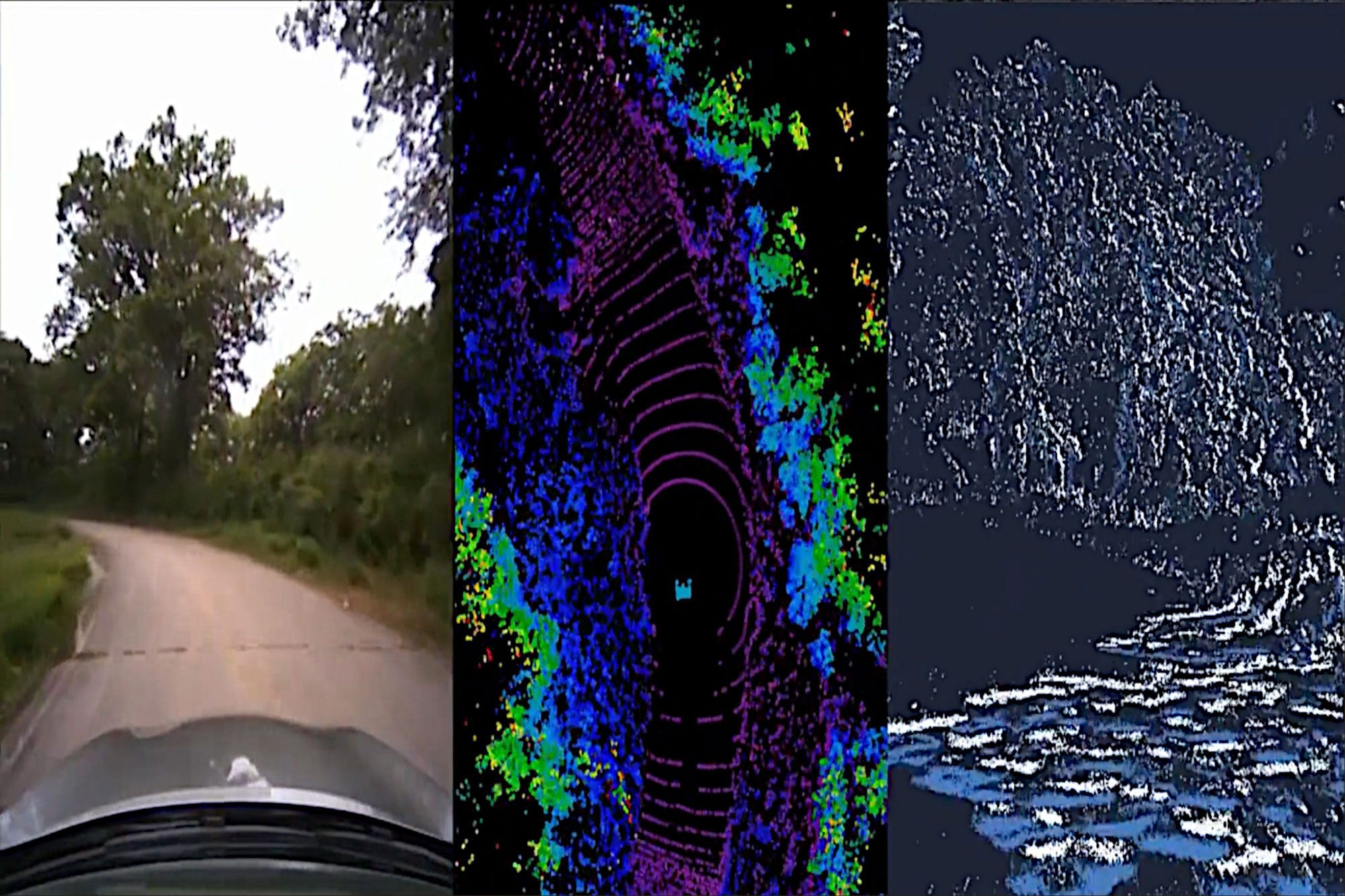

VISTA 2.0 builds off of the workforce’s earlier mannequin, VISTA, and it’s essentially completely different from current AV simulators because it’s data-driven — which means it was constructed and photorealistically rendered from real-world information — thereby enabling direct switch to actuality. Whereas the preliminary iteration supported solely single automotive lane-following with one digicam sensor, reaching high-fidelity data-driven simulation required rethinking the foundations of how completely different sensors and behavioral interactions could be synthesized.

Enter VISTA 2.0: a data-driven system that may simulate complicated sensor varieties and massively interactive situations and intersections at scale. With a lot much less information than earlier fashions, the workforce was capable of prepare autonomous autos that could possibly be considerably extra strong than these skilled on giant quantities of real-world information.

“It is a huge bounce in capabilities of data-driven simulation for autonomous autos, in addition to the rise of scale and talent to deal with higher driving complexity,” says Alexander Amini, CSAIL PhD pupil and co-lead creator on two new papers, along with fellow PhD pupil Tsun-Hsuan Wang. “VISTA 2.0 demonstrates the power to simulate sensor information far past 2D RGB cameras, but additionally extraordinarily excessive dimensional 3D lidars with thousands and thousands of factors, irregularly timed event-based cameras, and even interactive and dynamic situations with different autos as effectively.”

The workforce was capable of scale the complexity of the interactive driving duties for issues like overtaking, following, and negotiating, together with multiagent situations in extremely photorealistic environments.

Coaching AI fashions for autonomous autos entails hard-to-secure fodder of various styles of edge circumstances and unusual, harmful situations, as a result of most of our information (fortunately) is simply run-of-the-mill, day-to-day driving. Logically, we will’t simply crash into different vehicles simply to show a neural community how you can not crash into different vehicles.

Lately, there’s been a shift away from extra traditional, human-designed simulation environments to these constructed up from real-world information. The latter have immense photorealism, however the former can simply mannequin digital cameras and lidars. With this paradigm shift, a key query has emerged: Can the richness and complexity of the entire sensors that autonomous autos want, similar to lidar and event-based cameras which can be extra sparse, precisely be synthesized?

Lidar sensor information is far tougher to interpret in a data-driven world — you’re successfully attempting to generate brand-new 3D level clouds with thousands and thousands of factors, solely from sparse views of the world. To synthesize 3D lidar level clouds, the workforce used the information that the automotive collected, projected it right into a 3D house coming from the lidar information, after which let a brand new digital automobile drive round domestically from the place that unique automobile was. Lastly, they projected all of that sensory info again into the body of view of this new digital automobile, with the assistance of neural networks.

Along with the simulation of event-based cameras, which function at speeds higher than 1000’s of occasions per second, the simulator was able to not solely simulating this multimodal info, but additionally doing so all in actual time — making it attainable to coach neural nets offline, but additionally take a look at on-line on the automotive in augmented actuality setups for protected evaluations. “The query of if multisensor simulation at this scale of complexity and photorealism was attainable within the realm of data-driven simulation was very a lot an open query,” says Amini.

With that, the driving faculty turns into a celebration. Within the simulation, you’ll be able to transfer round, have several types of controllers, simulate several types of occasions, create interactive situations, and simply drop in model new autos that weren’t even within the unique information. They examined for lane following, lane turning, automotive following, and extra dicey situations like static and dynamic overtaking (seeing obstacles and shifting round so that you don’t collide). With the multi-agency, each actual and simulated brokers work together, and new brokers could be dropped into the scene and managed any which means.

Taking their full-scale automotive out into the “wild” — a.okay.a. Devens, Massachusetts — the workforce noticed fast transferability of outcomes, with each failures and successes. They had been additionally capable of exhibit the bodacious, magic phrase of self-driving automotive fashions: “strong.” They confirmed that AVs, skilled totally in VISTA 2.0, had been so strong in the actual world that they may deal with that elusive tail of difficult failures.

Now, one guardrail people depend on that may’t but be simulated is human emotion. It’s the pleasant wave, nod, or blinker swap of acknowledgement, that are the kind of nuances the workforce needs to implement in future work.

“The central algorithm of this analysis is how we will take a dataset and construct a very artificial world for studying and autonomy,” says Amini. “It’s a platform that I consider at some point may prolong in many alternative axes throughout robotics. Not simply autonomous driving, however many areas that depend on imaginative and prescient and sophisticated behaviors. We’re excited to launch VISTA 2.0 to assist allow the group to gather their very own datasets and convert them into digital worlds the place they’ll instantly simulate their very own digital autonomous autos, drive round these digital terrains, prepare autonomous autos in these worlds, after which can instantly switch them to full-sized, actual self-driving vehicles.”

Amini and Wang wrote the paper alongside Zhijian Liu, MIT CSAIL PhD pupil; Igor Gilitschenski, assistant professor in pc science on the College of Toronto; Wilko Schwarting, AI analysis scientist and MIT CSAIL PhD ’20; Tune Han, affiliate professor at MIT’s Division of Electrical Engineering and Pc Science; Sertac Karaman, affiliate professor of aeronautics and astronautics at MIT; and Daniela Rus, MIT professor and CSAIL director. The researchers offered the work on the IEEE Worldwide Convention on Robotics and Automation (ICRA) in Philadelphia.

This work was supported by the Nationwide Science Basis and Toyota Analysis Institute. The workforce acknowledges the assist of NVIDIA with the donation of the Drive AGX Pegasus.