In cooperative multi-agent reinforcement studying (MARL), as a result of its on-policy nature, coverage gradient (PG) strategies are usually believed to be much less pattern environment friendly than worth decomposition (VD) strategies, that are off-policy. Nonetheless, some latest empirical research reveal that with correct enter illustration and hyper-parameter tuning, multi-agent PG can obtain surprisingly robust efficiency in comparison with off-policy VD strategies.

Why may PG strategies work so properly? On this put up, we’ll current concrete evaluation to indicate that in sure eventualities, e.g., environments with a extremely multi-modal reward panorama, VD may be problematic and result in undesired outcomes. Against this, PG strategies with particular person insurance policies can converge to an optimum coverage in these circumstances. As well as, PG strategies with auto-regressive (AR) insurance policies can be taught multi-modal insurance policies.

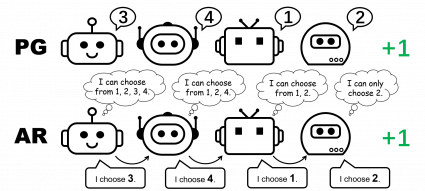

Determine 1: completely different coverage illustration for the 4-player permutation sport.

CTDE in Cooperative MARL: VD and PG strategies

Centralized coaching and decentralized execution (CTDE) is a well-liked framework in cooperative MARL. It leverages international info for simpler coaching whereas retaining the illustration of particular person insurance policies for testing. CTDE may be applied through worth decomposition (VD) or coverage gradient (PG), main to 2 various kinds of algorithms.

VD strategies be taught native Q networks and a mixing perform that mixes the native Q networks to a worldwide Q perform. The blending perform is normally enforced to fulfill the Particular person-World-Max (IGM) precept, which ensures the optimum joint motion may be computed by greedily selecting the optimum motion regionally for every agent.

Against this, PG strategies straight apply coverage gradient to be taught a person coverage and a centralized worth perform for every agent. The worth perform takes as its enter the worldwide state (e.g., MAPPO) or the concatenation of all of the native observations (e.g., MADDPG), for an correct international worth estimate.

The permutation sport: a easy counterexample the place VD fails

We begin our evaluation by contemplating a stateless cooperative sport, specifically the permutation sport. In an ![]() -player permutation sport, every agent can output

-player permutation sport, every agent can output ![]() actions

actions ![]() . Brokers obtain

. Brokers obtain ![]() reward if their actions are mutually completely different, i.e., the joint motion is a permutation over

reward if their actions are mutually completely different, i.e., the joint motion is a permutation over ![]() ; in any other case, they obtain

; in any other case, they obtain ![]() reward. Word that there are

reward. Word that there are ![]() symmetric optimum methods on this sport.

symmetric optimum methods on this sport.

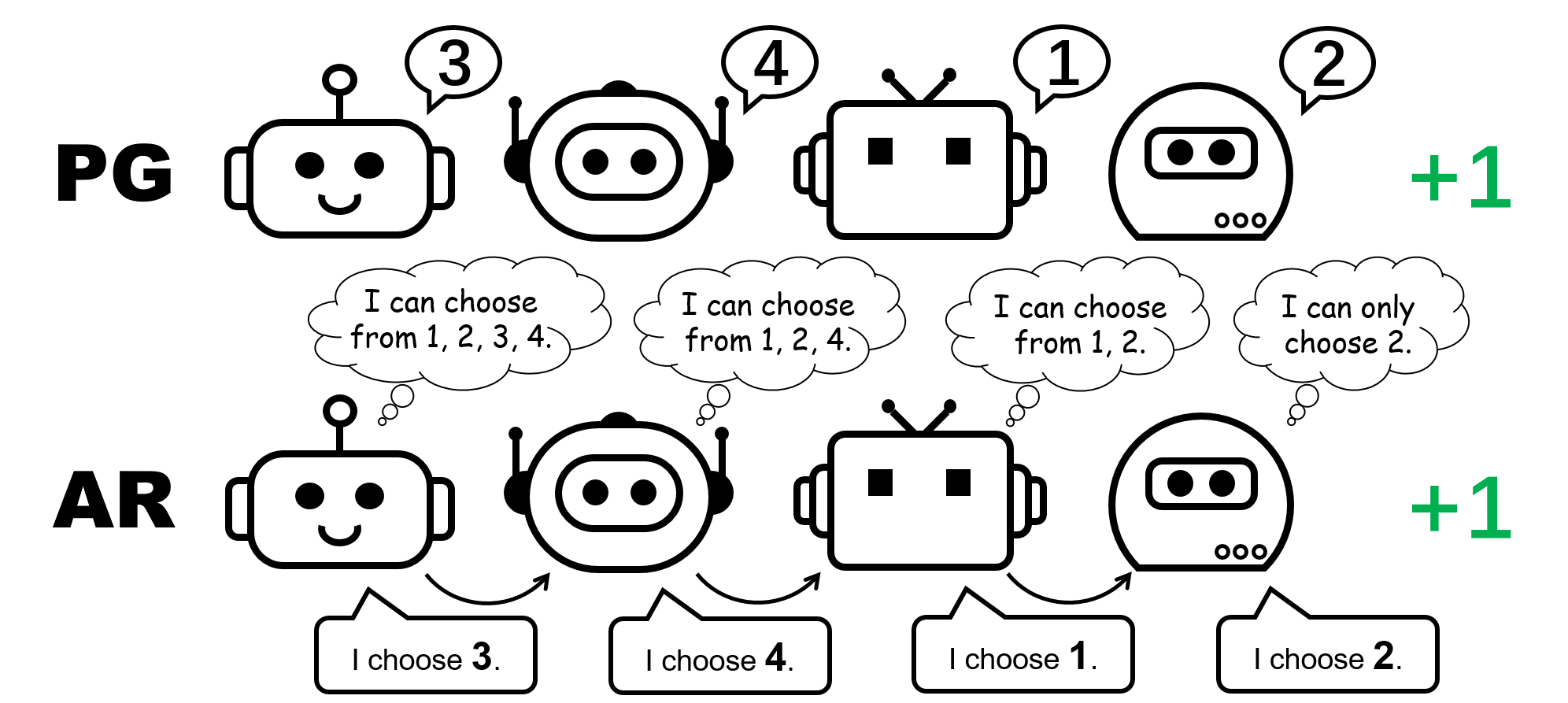

Determine 2: the 4-player permutation sport.

Allow us to give attention to the 2-player permutation sport for our dialogue. On this setting, if we apply VD to the sport, the worldwide Q-value will factorize to

![]()

the place ![]() and

and ![]() are native Q-functions,

are native Q-functions, ![]() is the worldwide Q-function, and

is the worldwide Q-function, and ![]() is the blending perform that, as required by VD strategies, satisfies the IGM precept.

is the blending perform that, as required by VD strategies, satisfies the IGM precept.

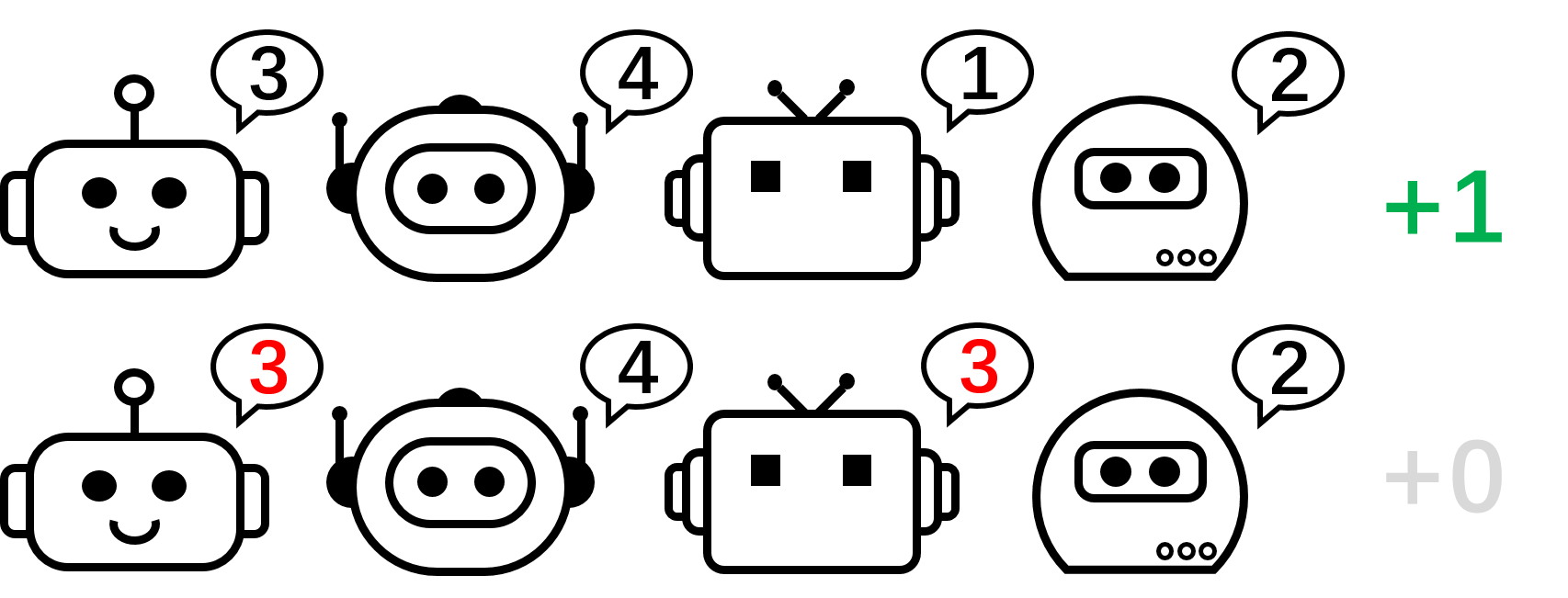

Determine 3: high-level instinct on why VD fails within the 2-player permutation sport.

We formally show that VD can not characterize the payoff of the 2-player permutation sport by contradiction. If VD strategies have been in a position to characterize the payoff, we might have

![]()

Nonetheless, if both of those two brokers have completely different native Q values, e.g. ![]() , then based on the IGM precept, we should have

, then based on the IGM precept, we should have

![]()

In any other case, if ![]() and

and ![]() , then

, then

![]()

Consequently, worth decomposition can not characterize the payoff matrix of the 2-player permutation sport.

What about PG strategies? Particular person insurance policies can certainly characterize an optimum coverage for the permutation sport. Furthermore, stochastic gradient descent can assure PG to converge to one in every of these optima underneath gentle assumptions. This means that, regardless that PG strategies are much less common in MARL in contrast with VD strategies, they are often preferable in sure circumstances which might be frequent in real-world purposes, e.g., video games with a number of technique modalities.

We additionally comment that within the permutation sport, with the intention to characterize an optimum joint coverage, every agent should select distinct actions. Consequently, a profitable implementation of PG should make sure that the insurance policies are agent-specific. This may be accomplished by utilizing both particular person insurance policies with unshared parameters (known as PG-Ind in our paper), or an agent-ID conditioned coverage (PG-ID).

PG outperform finest VD strategies on common MARL testbeds

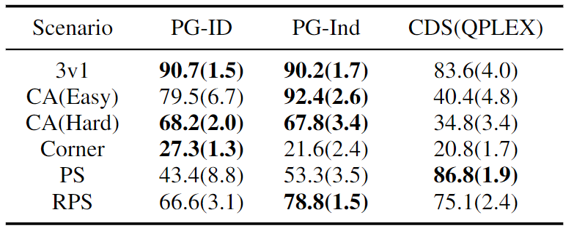

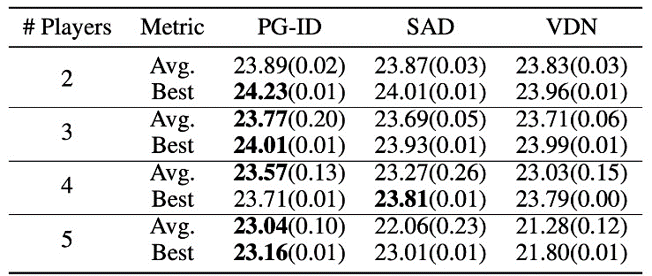

Going past the easy illustrative instance of the permutation sport, we prolong our examine to common and extra lifelike MARL benchmarks. Along with StarCraft Multi-Agent Problem (SMAC), the place the effectiveness of PG and agent-conditioned coverage enter has been verified, we present new leads to Google Analysis Soccer (GRF) and multi-player Hanabi Problem.

Determine 4: (high) successful charges of PG strategies on GRF; (backside) finest and common analysis scores on Hanabi-Full.

In GRF, PG strategies outperform the state-of-the-art VD baseline (CDS) in 5 eventualities. Apparently, we additionally discover that particular person insurance policies (PG-Ind) with out parameter sharing obtain comparable, typically even greater successful charges, in comparison with agent-specific insurance policies (PG-ID) in all 5 eventualities. We consider PG-ID within the full-scale Hanabi sport with various numbers of gamers (2-5 gamers) and examine them to SAD, a powerful off-policy Q-learning variant in Hanabi, and Worth Decomposition Networks (VDN). As demonstrated within the above desk, PG-ID is ready to produce outcomes corresponding to or higher than the most effective and common rewards achieved by SAD and VDN with various numbers of gamers utilizing the identical variety of surroundings steps.

Past greater rewards: studying multi-modal habits through auto-regressive coverage modeling

Apart from studying greater rewards, we additionally examine learn how to be taught multi-modal insurance policies in cooperative MARL. Let’s return to the permutation sport. Though we now have proved that PG can successfully be taught an optimum coverage, the technique mode that it lastly reaches can extremely rely upon the coverage initialization. Thus, a pure query will likely be:

Can we be taught a single coverage that may cowl all of the optimum modes?

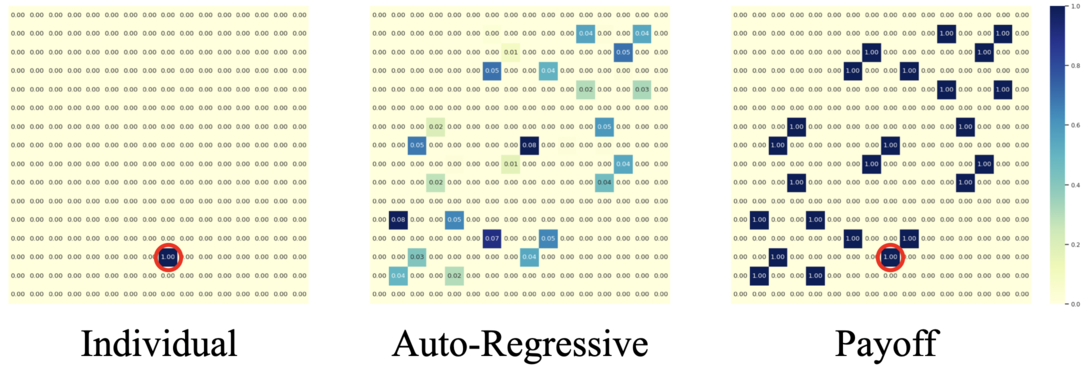

Within the decentralized PG formulation, the factorized illustration of a joint coverage can solely characterize one specific mode. Subsequently, we suggest an enhanced technique to parameterize the insurance policies for stronger expressiveness — the auto-regressive (AR) insurance policies.

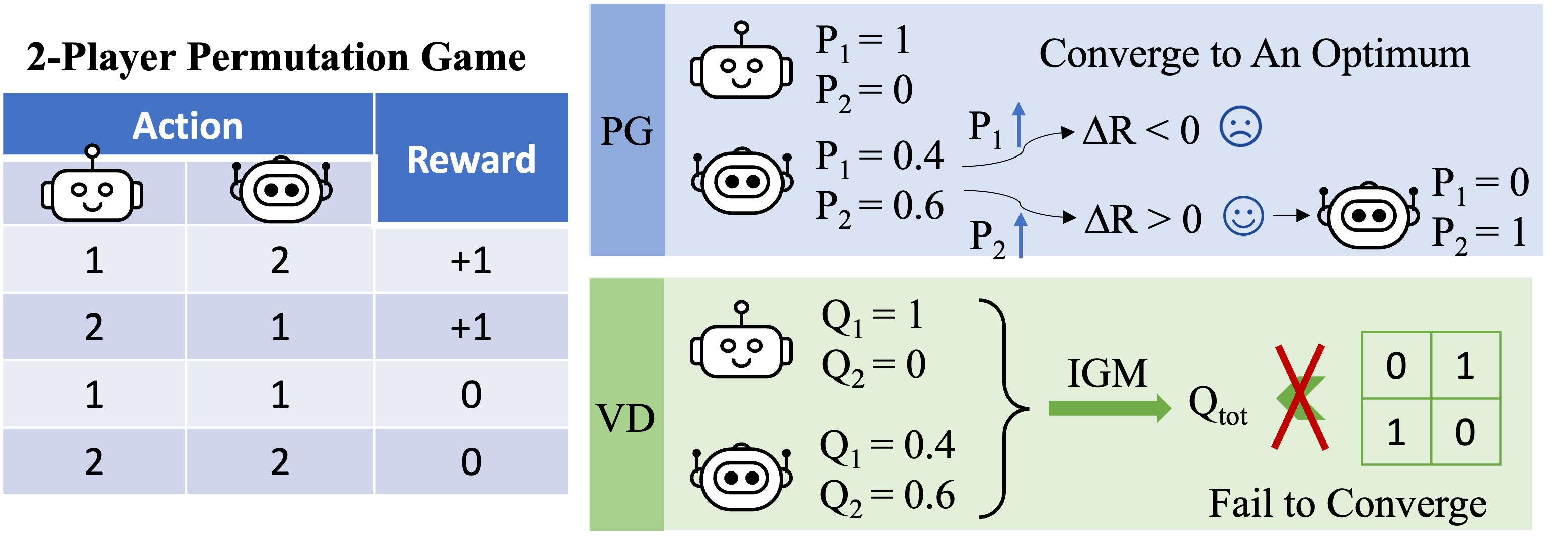

Determine 5: comparability between particular person insurance policies (PG) and auto-regressive insurance policies (AR) within the 4-player permutation sport.

Formally, we factorize the joint coverage of ![]() brokers into the type of

brokers into the type of

![]()

the place the motion produced by agent ![]() relies upon by itself remark

relies upon by itself remark ![]() and all of the actions from earlier brokers

and all of the actions from earlier brokers ![]() . The auto-regressive factorization can characterize any joint coverage in a centralized MDP. The solely modification to every agent’s coverage is the enter dimension, which is barely enlarged by together with earlier actions; and the output dimension of every agent’s coverage stays unchanged.

. The auto-regressive factorization can characterize any joint coverage in a centralized MDP. The solely modification to every agent’s coverage is the enter dimension, which is barely enlarged by together with earlier actions; and the output dimension of every agent’s coverage stays unchanged.

With such a minimal parameterization overhead, AR coverage considerably improves the illustration energy of PG strategies. We comment that PG with AR coverage (PG-AR) can concurrently characterize all optimum coverage modes within the permutation sport.

Determine: the heatmaps of actions for insurance policies discovered by PG-Ind (left) and PG-AR (center), and the heatmap for rewards (proper); whereas PG-Ind solely converge to a particular mode within the 4-player permutation sport, PG-AR efficiently discovers all of the optimum modes.

In additional complicated environments, together with SMAC and GRF, PG-AR can be taught attention-grabbing emergent behaviors that require robust intra-agent coordination that will by no means be discovered by PG-Ind.

Determine 6: (high) emergent habits induced by PG-AR in SMAC and GRF. On the 2m_vs_1z map of SMAC, the marines preserve standing and assault alternately whereas guaranteeing there is just one attacking marine at every timestep; (backside) within the academy_3_vs_1_with_keeper situation of GRF, brokers be taught a “Tiki-Taka” type habits: every participant retains passing the ball to their teammates.

Discussions and Takeaways

On this put up, we offer a concrete evaluation of VD and PG strategies in cooperative MARL. First, we reveal the limitation on the expressiveness of common VD strategies, exhibiting that they may not characterize optimum insurance policies even in a easy permutation sport. Against this, we present that PG strategies are provably extra expressive. We empirically confirm the expressiveness benefit of PG on common MARL testbeds, together with SMAC, GRF, and Hanabi Problem. We hope the insights from this work may benefit the neighborhood in direction of extra basic and extra highly effective cooperative MARL algorithms sooner or later.

This put up relies on our paper in joint with Zelai Xu: Revisiting Some Frequent Practices in Cooperative Multi-Agent Reinforcement Studying (paper, web site).

BAIR Weblog

is the official weblog of the Berkeley Synthetic Intelligence Analysis (BAIR) Lab.

BAIR Weblog

is the official weblog of the Berkeley Synthetic Intelligence Analysis (BAIR) Lab.