What’s your AI danger mitigation plan? Simply as you wouldn’t set off on a journey with out checking the roads, realizing your route, and getting ready for attainable delays or mishaps, you want a mannequin danger administration plan in place on your machine studying initiatives. A well-designed mannequin mixed with correct AI governance can assist reduce unintended outcomes like AI bias. With a mixture of the suitable individuals, processes, and expertise in place, you’ll be able to reduce the dangers related together with your AI initiatives.

Is There Such a Factor as Unbiased AI?

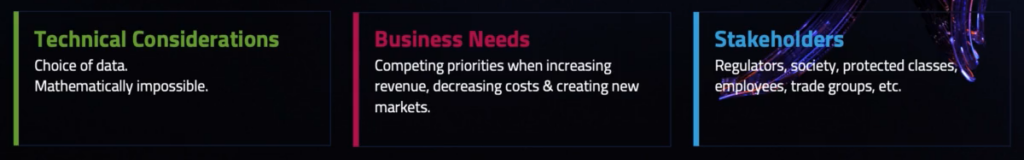

A typical concern with AI when discussing governance is bias. Is it attainable to have an unbiased AI mannequin? The laborious fact is not any. You have to be cautious of anybody who tells you in any other case. Whereas there are mathematical causes a mannequin can’t be unbiased, it’s simply as vital to acknowledge that components like competing enterprise wants may contribute to the issue. For this reason good AI governance is so vital.

So, reasonably than trying to create a mannequin that’s unbiased, as an alternative look to create one that’s honest and behaves as supposed when deployed. A good mannequin is one the place outcomes are measured alongside delicate facets of the info (e.g., gender, race, age, incapacity, and faith.)

Validating Equity All through the AI Lifecycle

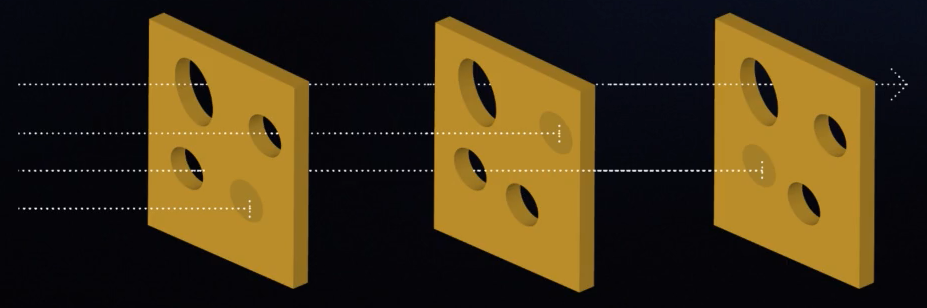

One danger mitigation methodology is a three-pronged method to mitigating danger amongst a number of dimensions of the AI lifecycle. The Swiss cheese framework acknowledges that no single set of defenses will guarantee equity by eradicating all hazards. However with a number of traces of protection, the overlapping are a strong type of danger administration. It’s a confirmed mannequin that’s labored in aviation and healthcare for many years, however it’s nonetheless legitimate to be used on enterprise AI platforms.

The primary slice is about getting the suitable individuals concerned. That you must have individuals who can establish the necessity, assemble the mannequin, and monitor its efficiency. A range of voices helps the mannequin align to a company’s values.

The second slice is having MLOps processes in place that enable for repeatable deployments. Standardized processes make monitoring mannequin updates, sustaining mannequin accuracy via continuous studying, and implementing approval workflows attainable. Workflow approval, monitoring, steady studying, and model management are all a part of system.

The third slice is the MLDev expertise that permits for frequent practices, auditable workflows, model management, and constant mannequin KPIs. You want instruments to guage the mannequin’s conduct and ensure its integrity. They need to come from a restricted and interoperable set of applied sciences to establish dangers, comparable to technical debt. The extra customized elements in your MLDev setting you have got, the extra doubtless you might be to introduce pointless complexity and unintended penalties and bias.

The Problem of Complying with New Laws

And all these layers have to be thought of in opposition to the panorama of regulation. Within the U.S., for instance, regulation can come from native, state, and federal jurisdictions. The EU and Singapore are taking comparable steps to codify rules regarding AI governance.

There’s an explosion of latest fashions and strategies but flexibility is required to adapt as new legal guidelines are applied. Complying with these proposed rules is turning into more and more extra of a problem.

In these proposals, AI regulation isn’t restricted to fields like insurance coverage and finance. We’re seeing regulatory steering attain into fields comparable to training, security, healthcare, and employment. In the event you’re not ready for AI regulation in your trade now, it’s time to start out fascinated about it—as a result of it’s coming.

Doc Design and Deployment For Laws and Readability

Mannequin danger administration will grow to be commonplace as rules enhance and are enforced. The power to doc your design and deployment selections will aid you transfer shortly—and be sure you’re not left behind. When you’ve got the layers talked about above in place, then explainability must be straightforward.

- Folks, course of, and expertise are your inside traces of protection relating to AI governance.

- Make certain you perceive who all your stakeholders are, together with those which may get ignored.

- Search for methods to have workflow approvals, model management, and important monitoring.

- Be sure to take into consideration explainable AI and workflow standardization.

- Search for methods to codify your processes. Create a course of, doc the method, and keep on with the method.

Within the recorded session Enterprise-Prepared AI: Managing Governance and Danger, you’ll be able to be taught methods for constructing good governance processes and ideas for monitoring your AI system. Get began by making a plan for governance and figuring out your present assets, in addition to studying the place to ask for assist.

Concerning the writer

Subject CTO, DataRobot

Ted Kwartler is the Subject CTO at DataRobot. Ted units product technique for explainable and moral makes use of of knowledge expertise. Ted brings distinctive insights and expertise using information, enterprise acumen and ethics to his present and former positions at Liberty Mutual Insurance coverage and Amazon. Along with having 4 DataCamp programs, he teaches graduate programs on the Harvard Extension College and is the writer of “Textual content Mining in Observe with R.” Ted is an advisor to the US Authorities Bureau of Financial Affairs, sitting on a Congressionally mandated committee referred to as the “Advisory Committee for Knowledge for Proof Constructing” advocating for data-driven insurance policies.