This story initially appeared in The Algorithm, our weekly e-newsletter on AI. To get tales like this in your inbox first, enroll right here.

Whereas the US and the EU could differ on methods to regulate tech, their lawmakers appear to agree on one factor: the West must ban AI-powered social scoring.

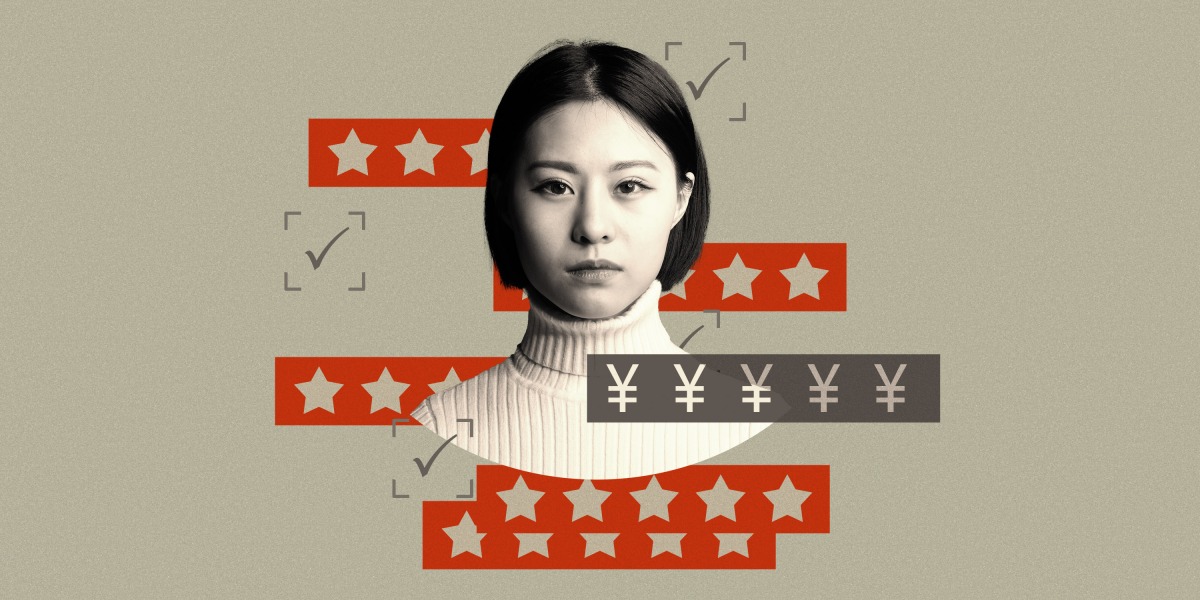

As they perceive it, social scoring is a follow by which authoritarian governments—particularly China—rank folks’s trustworthiness and punish them for undesirable behaviors, similar to stealing or not paying again loans. Primarily, it’s seen as a dystopian superscore assigned to every citizen.

The EU is at present negotiating a brand new legislation known as the AI Act, which can ban member states, and perhaps even personal corporations, from implementing such a system.

The difficulty is, it’s “primarily banning skinny air,” says Vincent Brussee, an analyst on the Mercator Institute for China Research, a German suppose tank.

Again in 2014, China introduced a six-year plan to construct a system rewarding actions that construct belief in society and penalizing the other. Eight years on, it’s solely simply launched a draft legislation that tries to codify previous social credit score pilots and information future implementation.

There have been some contentious native experiments, similar to one within the small metropolis of Rongcheng in 2013, which gave each resident a beginning private credit score rating of 1,000 that may be elevated or decreased by how their actions are judged. Individuals at the moment are in a position to decide out, and the native authorities has eliminated some controversial standards.

However these haven’t gained wider traction elsewhere and don’t apply to your entire Chinese language inhabitants. There isn’t any countrywide, all-seeing social credit score system with algorithms that rank folks.

As my colleague Zeyi Yang explains, “the fact is, that terrifying system doesn’t exist, and the central authorities doesn’t appear to have a lot urge for food to construct it, both.”

What has been applied is generally fairly low-tech. It’s a “mixture of makes an attempt to control the monetary credit score trade, allow authorities companies to share information with one another, and promote state-sanctioned ethical values,” Zeyi writes.

Kendra Schaefer, a companion at Trivium China, a Beijing-based analysis consultancy, who compiled a report on the topic for the US authorities, couldn’t discover a single case by which information assortment in China led to automated sanctions with out human intervention. The South China Morning Publish discovered that in Rongcheng, human “info gatherers” would stroll round city and write down folks’s misbehavior utilizing a pen and paper.

The parable originates from a pilot program known as Sesame Credit score, developed by Chinese language tech firm Alibaba. This was an try and assess folks’s creditworthiness utilizing buyer information at a time when the vast majority of Chinese language folks didn’t have a bank card, says Brussee. The trouble grew to become conflated with the social credit score system as a complete in what Brussee describes as a “sport of Chinese language whispers.” And the misunderstanding took on a lifetime of its personal.

The irony is that whereas US and European politicians depict this as an issue stemming from authoritarian regimes, methods that rank and penalize persons are already in place within the West. Algorithms designed to automate selections are being rolled out en masse and used to disclaim folks housing, jobs, and primary companies.

For instance in Amsterdam, authorities have used an algorithm to rank younger folks from deprived neighborhoods in response to their probability of turning into a prison. They declare the intention is to stop crime and assist supply higher, extra focused assist.

However in actuality, human rights teams argue, it has elevated stigmatization and discrimination. The younger individuals who find yourself on this checklist face extra stops from police, dwelling visits from authorities, and extra stringent supervision from college and social employees.

It’s straightforward to take a stand in opposition to a dystopian algorithm that doesn’t actually exist. However as lawmakers in each the EU and the US attempt to construct a shared understanding of AI governance, they’d do higher to look nearer to dwelling. Individuals don’t actually have a federal privateness legislation that will supply some primary protections in opposition to algorithmic resolution making.

There may be additionally a dire want for governments to conduct sincere, thorough audits of the best way authorities and corporations use AI to make selections about our lives. They won’t like what they discover—however that makes it all of the extra essential for them to look.

Deeper Studying

A bot that watched 70,000 hours of Minecraft may unlock AI’s subsequent large factor

Analysis firm OpenAI has constructed an AI that binged on 70,000 hours of movies of individuals taking part in Minecraft with a purpose to play the sport higher than any AI earlier than. It’s a breakthrough for a robust new method, known as imitation studying, that might be used to coach machines to hold out a variety of duties by watching people do them first. It additionally raises the potential that websites like YouTube might be an unlimited and untapped supply of coaching information.

Why it’s an enormous deal: Imitation studying can be utilized to coach AI to regulate robotic arms, drive automobiles, or navigate web sites. Some folks, similar to Meta’s chief AI scientist, Yann LeCun, suppose that watching movies will ultimately assist us prepare an AI with human-level intelligence. Learn Will Douglas Heaven’s story right here.

Bits and Bytes

Meta’s game-playing AI could make and break alliances like a human

Diplomacy is a well-liked technique sport by which seven gamers compete for management of Europe by shifting items round on a map. The sport requires gamers to speak to one another and spot when others are bluffing. Meta’s new AI, known as Cicero, managed to trick people to win.

It’s an enormous step ahead towards AI that may assist with complicated issues, similar to planning routes round busy visitors and negotiating contracts. However I’m not going to lie—it’s additionally an unnerving thought that an AI can so efficiently deceive people. (MIT Know-how Overview)

We may run out of information to coach AI language applications

The pattern of making ever larger AI fashions means we’d like even larger information units to coach them. The difficulty is, we would run out of appropriate information by 2026, in response to a paper by researchers from Epoch, an AI analysis and forecasting group. This could immediate the AI group to give you methods to do extra with current assets. (MIT Know-how Overview)

Secure Diffusion 2.0 is out

The open-source text-to-image AI Secure Diffusion has been given a large facelift, and its outputs are wanting so much sleeker and extra practical than earlier than. It may possibly even do palms. The tempo of Secure Diffusion’s improvement is breathtaking. Its first model solely launched in August. We’re seemingly going to see much more progress in generative AI properly into subsequent 12 months.