Did a human write that, or ChatGPT? It may be exhausting to inform — maybe too exhausting, its creator OpenAI thinks, which is why it’s engaged on a strategy to “watermark” AI-generated content material.

In a lecture on the College of Austin, laptop science professor Scott Aaronson, presently a visitor researcher at OpenAI, revealed that OpenAI is creating a device for “statistically watermarking the outputs of a textual content [AI system].” At any time when a system — say, ChatGPT — generates textual content, the device would embed an “unnoticeable secret sign” indicating the place the textual content got here from.

OpenAI engineer Hendrik Kirchner constructed a working prototype, Aaronson says, and the hope is to construct it into future OpenAI-developed methods.

“We wish it to be a lot tougher to take [an AI system’s] output and move it off as if it got here from a human,” Aaronson stated in his remarks. “This could possibly be useful for stopping educational plagiarism, clearly, but in addition, for instance, mass era of propaganda — you recognize, spamming each weblog with seemingly on-topic feedback supporting Russia’s invasion of Ukraine with out even a constructing stuffed with trolls in Moscow. Or impersonating somebody’s writing fashion with a view to incriminate them.”

Exploiting randomness

Why the necessity for a watermark? ChatGPT is a robust instance. The chatbot developed by OpenAI has taken the web by storm, exhibiting a flair not just for answering difficult questions however writing poetry, fixing programming puzzles and waxing poetic on any variety of philosophical subjects.

Whereas ChatGPT is very amusing — and genuinely helpful — the system raises apparent moral considerations. Like lots of the text-generating methods earlier than it, ChatGPT could possibly be used to put in writing high-quality phishing emails and dangerous malware, or cheat at college assignments. And as a question-answering device, it’s factually inconsistent — a shortcoming that led programming Q&A website Stack Overflow to ban solutions originating from ChatGPT till additional discover.

To know the technical underpinnings of OpenAI’s watermarking device, it’s useful to know why methods like ChatGPT work in addition to they do. These methods perceive enter and output textual content as strings of “tokens,” which might be phrases but in addition punctuation marks and components of phrases. At their cores, the methods are continuously producing a mathematical perform referred to as a likelihood distribution to determine the subsequent token (e.g., phrase) to output, taking into consideration all previously-outputted tokens.

Within the case of OpenAI-hosted methods like ChatGPT, after the distribution is generated, OpenAI’s server does the job of sampling tokens in accordance with the distribution. There’s some randomness on this choice; that’s why the identical textual content immediate can yield a special response.

OpenAI’s watermarking device acts like a “wrapper” over present text-generating methods, Aaronson stated in the course of the lecture, leveraging a cryptographic perform operating on the server degree to “pseudorandomly” choose the subsequent token. In concept, textual content generated by the system would nonetheless look random to you or I, however anybody possessing the “key” to the cryptographic perform would be capable of uncover a watermark.

“Empirically, a couple of hundred tokens appear to be sufficient to get an affordable sign that sure, this textual content got here from [an AI system]. In precept, you could possibly even take a protracted textual content and isolate which components in all probability got here from [the system] and which components in all probability didn’t.” Aaronson stated. “[The tool] can do the watermarking utilizing a secret key and it will possibly test for the watermark utilizing the identical key.”

Key limitations

Watermarking AI-generated textual content isn’t a brand new concept. Earlier makes an attempt, most rules-based, have relied on strategies like synonym substitutions and syntax-specific phrase adjustments. However outdoors of theoretical analysis printed by the German institute CISPA final March, OpenAI’s seems to be one of many first cryptography-based approaches to the issue.

When contacted for remark, Aaronson declined to disclose extra in regards to the watermarking prototype, save that he expects to co-author a analysis paper within the coming months. OpenAI additionally declined, saying solely that watermarking is amongst a number of “provenance strategies” it’s exploring to detect outputs generated by AI.

Unaffiliated lecturers and business consultants, nevertheless, shared blended opinions. They be aware that the device is server-side, which means it wouldn’t essentially work with all text-generating methods. And so they argue that it’d be trivial for adversaries to work round.

“I believe it could be pretty simple to get round it by rewording, utilizing synonyms, and so on.,” Srini Devadas, a pc science professor at MIT, informed TechCrunch through e mail. “This can be a little bit of a tug of struggle.”

Jack Hessel, a analysis scientist on the Allen Institute for AI, identified that it’d be troublesome to imperceptibly fingerprint AI-generated textual content as a result of every token is a discrete alternative. Too apparent a fingerprint would possibly lead to odd phrases being chosen that degrade fluency, whereas too delicate would depart room for doubt when the fingerprint is sought out.

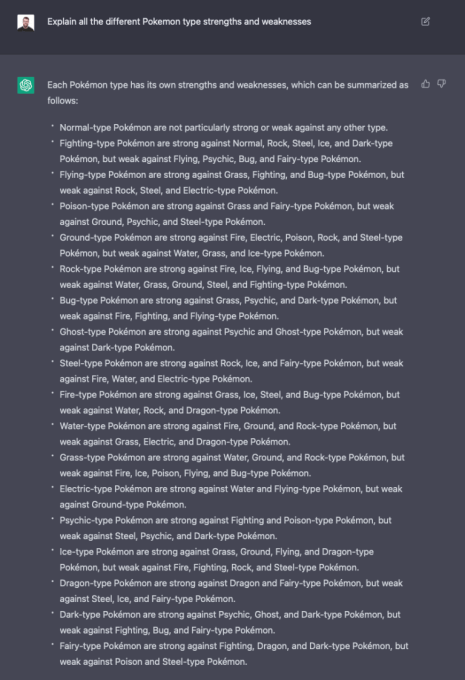

ChatGPT answering a query.

Yoav Shoham, the co-founder and co-CEO of AI21 Labs, an OpenAI rival, doesn’t suppose that statistical watermarking will likely be sufficient to assist establish the supply of AI-generated textual content. He requires a “extra complete” method that features differential watermarking, by which completely different components of textual content are watermarked otherwise, and AI methods that extra precisely cite the sources of factual textual content.

This particular watermarking method additionally requires inserting lots of belief — and energy — in OpenAI, consultants famous.

“A great fingerprinting wouldn’t be discernable by a human reader and allow extremely assured detection,” Hessel stated through e mail. “Relying on the way it’s arrange, it could possibly be that OpenAI themselves could be the one get together capable of confidently present that detection due to how the ‘signing’ course of works.”

In his lecture, Aaronson acknowledged the scheme would solely actually work in a world the place corporations like OpenAI are forward in scaling up state-of-the-art methods — they usually all conform to be accountable gamers. Even when OpenAI had been to share the watermarking device with different text-generating system suppliers, like Cohere and AI21Labs, this wouldn’t stop others from selecting to not use it.

“If [it] turns into a free-for-all, then lots of the security measures do turn out to be tougher, and would possibly even be unattainable, not less than with out authorities regulation,” Aaronson stated. “In a world the place anybody may construct their very own textual content mannequin that was simply nearly as good as [ChatGPT, for example] … what would you do there?”

That’s the way it’s performed out within the text-to-image area. Not like OpenAI, whose DALL-E 2 image-generating system is simply accessible by means of an API, Stability AI open-sourced its text-to-image tech (referred to as Steady Diffusion). Whereas DALL-E 2 has quite a lot of filters on the API degree to stop problematic pictures from being generated (plus watermarks on pictures it generates), the open supply Steady Diffusion doesn’t. Unhealthy actors have used it to create deepfaked porn, amongst different toxicity.

For his half, Aaronson is optimistic. Within the lecture, he expressed the assumption that, if OpenAI can reveal that watermarking works and doesn’t impression the standard of the generated textual content, it has the potential to turn out to be an business normal.

Not everybody agrees. As Devadas factors out, the device wants a key, which means it will possibly’t be fully open supply — doubtlessly limiting its adoption to organizations that conform to associate with OpenAI. (If the important thing had been to be made public, anybody may deduce the sample behind the watermarks, defeating their function.)

But it surely may not be so far-fetched. A consultant for Quora stated the corporate can be taken with utilizing such a system, and it doubtless wouldn’t be the one one.

“You possibly can fear that each one these items about making an attempt to be protected and accountable when scaling AI … as quickly because it severely hurts the underside traces of Google and Meta and Alibaba and the opposite main gamers, lots of it would exit the window,” Aaronson stated. “Alternatively, we’ve seen over the previous 30 years that the massive Web corporations can agree on sure minimal requirements, whether or not due to concern of getting sued, need to be seen as a accountable participant, or no matter else.”