—Tate Ryan-Mosley, senior tech coverage reporter

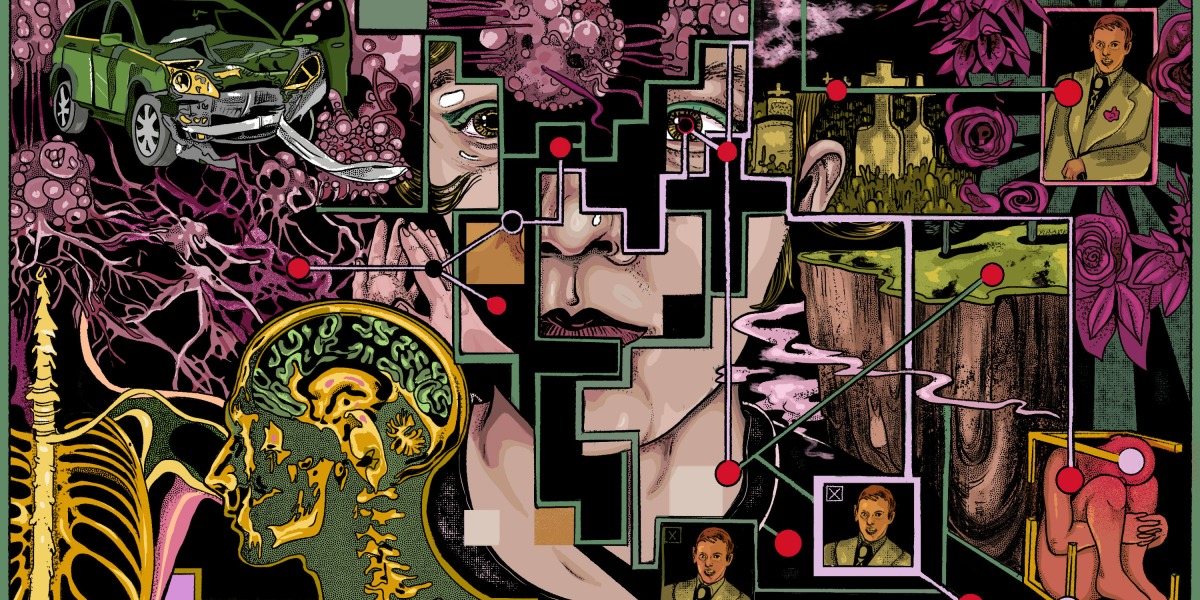

I’ve at all times been a super-Googler, dealing with uncertainty by making an attempt to be taught as a lot as I can about no matter is likely to be coming. That included my father’s throat most cancers.

I began Googling the phases of grief, and books and educational analysis about loss, from the app on my iPhone, deliberately and unintentionally consuming folks’s experiences of grief and tragedy via Instagram movies, numerous newsfeeds, and Twitter testimonials.

But with each search and click on, I inadvertently created a sticky net of digital grief. In the end, it might show almost not possible to untangle myself from what the algorithms had been serving me. I obtained out—ultimately. However why is it so arduous to unsubscribe from and decide out of content material that we don’t need, even when it’s dangerous to us? Learn the total story.

AI fashions spit out images of actual folks and copyrighted photos

The information: Picture era fashions will be prompted to supply identifiable images of actual folks, medical photos, and copyrighted work by artists, in keeping with new analysis.

How they did it: Researchers prompted Secure Diffusion and Google’s Imagen with captions for photos, comparable to an individual’s title, many instances. Then they analyzed whether or not any of the generated photos matched authentic photos within the mannequin’s database. The group managed to extract over 100 replicas of photos within the AI’s coaching set.

Why it issues: The discovering might strengthen the case for artists who’re presently suing AI corporations for copyright violations, and will probably threaten the human topics’ privateness. It might even have implications for startups wanting to make use of generative AI fashions in well being care, because it exhibits that these techniques threat leaking delicate personal data. Learn the total story.