The computational understanding of person interfaces (UI) is a key step in direction of attaining clever UI behaviors. Beforehand, we investigated varied UI modeling duties, together with widget captioning, display screen summarization, and command grounding, that tackle numerous interplay eventualities reminiscent of automation and accessibility. We additionally demonstrated how machine studying might help person expertise practitioners enhance UI high quality by diagnosing tappability confusion and offering insights for enhancing UI design. These works together with these developed by others within the subject have showcased how deep neural networks can doubtlessly remodel finish person experiences and the interplay design follow.

With these successes in addressing particular person UI duties, a pure query is whether or not we will acquire foundational understandings of UIs that may profit particular UI duties. As our first try and reply this query, we developed a multi-task mannequin to deal with a variety of UI duties concurrently. Though the work made some progress, just a few challenges stay. Earlier UI fashions closely depend on UI view hierarchies — i.e., the construction or metadata of a cell UI display screen just like the Doc Object Mannequin for a webpage — that permit a mannequin to instantly purchase detailed info of UI objects on the display screen (e.g., their varieties, textual content content material and positions). This metadata has given earlier fashions benefits over their vision-only counterparts. Nonetheless, view hierarchies usually are not all the time accessible, and are sometimes corrupted with lacking object descriptions or misaligned construction info. In consequence, regardless of the short-term beneficial properties from utilizing view hierarchies, it might in the end hamper the mannequin efficiency and applicability. As well as, earlier fashions needed to take care of heterogeneous info throughout datasets and UI duties, which regularly resulted in advanced mannequin architectures that have been tough to scale or generalize throughout duties.

In “Highlight: Cell UI Understanding utilizing Imaginative and prescient-Language Fashions with a Focus”, accepted for publication at ICLR 2023, we current a vision-only method that goals to realize common UI understanding utterly from uncooked pixels. We introduce a unified method to characterize numerous UI duties, the data for which may be universally represented by two core modalities: imaginative and prescient and language. The imaginative and prescient modality captures what an individual would see from a UI display screen, and the language modality may be pure language or any token sequences associated to the duty. We show that Highlight considerably improves accuracy on a variety of UI duties, together with widget captioning, display screen summarization, command grounding and tappability prediction.

Highlight Mannequin

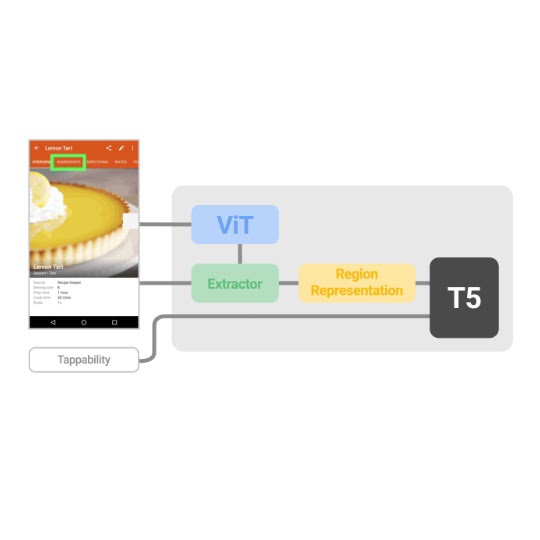

The Highlight mannequin enter features a tuple of three objects: the screenshot, the area of curiosity on the display screen, and the textual content description of the duty. The output is a textual content description or response in regards to the area of curiosity. This easy enter and output illustration of the mannequin is expressive to seize varied UI duties and permits scalable mannequin architectures. This mannequin design permits a spectrum of studying methods and setups, from task-specific fine-tuning, to multi-task studying and to few-shot studying. The Highlight mannequin, as illustrated within the above determine, leverages present structure constructing blocks reminiscent of ViT and T5 which can be pre-trained within the high-resourced, common vision-language area, which permits us to construct on high of the success of those common area fashions.

As a result of UI duties are sometimes involved with a particular object or space on the display screen, which requires a mannequin to have the ability to give attention to the thing or space of curiosity, we introduce a Focus Area Extractor to a vision-language mannequin that permits the mannequin to focus on the area in mild of the display screen context.

Specifically, we design a Area Summarizer that acquires a latent illustration of a display screen area primarily based on ViT encodings through the use of consideration queries generated from the bounding field of the area (see paper for extra particulars). Particularly, every coordinate (a scalar worth, i.e., the left, high, proper or backside) of the bounding field, denoted as a yellow field on the screenshot, is first embedded through a multilayer perceptron (MLP) as a group of dense vectors, after which fed to a Transformer mannequin alongside their coordinate-type embedding. The dense vectors and their corresponding coordinate-type embeddings are coloration coded to point their affiliation with every coordinate worth. Coordinate queries then attend to display screen encodings output by ViT through cross consideration, and the ultimate consideration output of the Transformer is used because the area illustration for the downstream decoding by T5.

|

| A goal area on the display screen is summarized through the use of its bounding field to question into display screen encodings from ViT through attentional mechanisms. |

Outcomes

We pre-train the Highlight mannequin utilizing two unlabeled datasets (an inner dataset primarily based on C4 corpus and an inner cell dataset) with 2.5 million cell UI screens and 80 million net pages. We then individually fine-tune the pre-trained mannequin for every of the 4 downstream duties (captioning, summarization, grounding, and tappability). For widget captioning and display screen summarization duties, we report CIDEr scores, which measure how comparable a mannequin textual content description is to a set of references created by human raters. For command grounding, we report accuracy that measures the share of instances the mannequin efficiently locates a goal object in response to a person command. For tappability prediction, we report F1 scores that measure the mannequin’s capacity to inform tappable objects from untappable ones.

On this experiment, we evaluate Highlight with a number of benchmark fashions. Widget Caption makes use of view hierarchy and the picture of every UI object to generate a textual content description for the thing. Equally, Screen2Words makes use of view hierarchy and the screenshot in addition to auxiliary options (e.g., app description) to generate a abstract for the display screen. In the identical vein, VUT combines screenshots and consider hierarchies for performing a number of duties. Lastly, the unique Tappability mannequin leverages object metadata from view hierarchy and the screenshot to foretell object tappability. Taperception, a follow-up mannequin of Tappability, makes use of a vision-only tappability prediction method. We look at two Highlight mannequin variants with respect to the dimensions of its ViT constructing block, together with B/16 and L/16. Highlight drastically exceeded the state-of-the-art throughout 4 UI modeling duties.

| Mannequin | Captioning | Summarization | Grounding | Tappability | |||||||||||

| Baselines |

Widget Caption | 97 | – | – | – | ||||||||||

| Screen2Words | – | 61.3 | – | – | |||||||||||

| VUT | 99.3 | 65.6 | 82.1 | – | |||||||||||

| Taperception | – | – | – | 85.5 | |||||||||||

| Tappability | – | – | – | 87.9 | |||||||||||

| Highlight | B/16 | 136.6 | 103.5 | 95.7 | 86.9 | ||||||||||

| L/16 | 141.8 | 106.7 | 95.8 | 88.4 |

We then pursue a tougher setup the place we ask the mannequin to be taught a number of duties concurrently as a result of a multi-task mannequin can considerably scale back mannequin footprint. As proven within the desk beneath, the experiments confirmed that our mannequin nonetheless performs competitively.

| Mannequin | Captioning | Summarization | Grounding | Tappability | ||||||||||

| VUT multi-task | 99.3 | 65.1 | 80.8 | – | ||||||||||

| Highlight B/16 | 140 | 102.7 | 90.8 | 89.4 | ||||||||||

| Highlight L/16 | 141.3 | 99.2 | 94.2 | 89.5 |

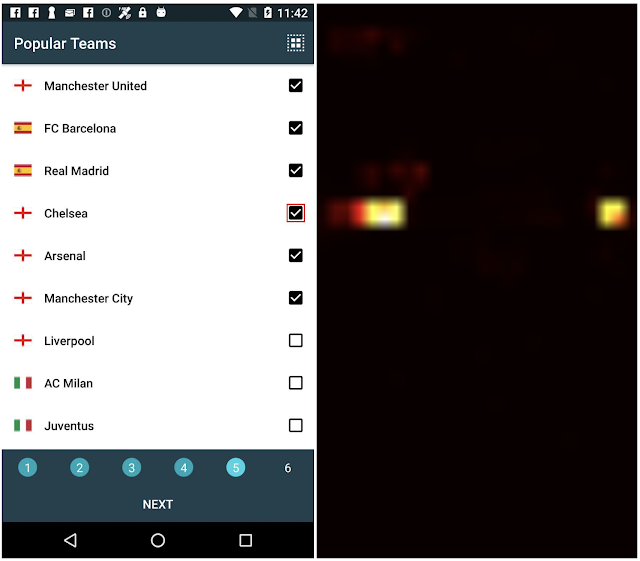

To grasp how the Area Summarizer permits Highlight to give attention to a goal area and related areas on the display screen, we analyze the consideration weights (which point out the place the mannequin consideration is on the screenshot) for each widget captioning and display screen summarization duties. Within the determine beneath, for the widget captioning process, the mannequin predicts “choose Chelsea workforce” for the checkbox on the left facet, highlighted with a crimson bounding field. We are able to see from its consideration heatmap (which illustrates the distribution of consideration weights) on the best that the mannequin learns to take care of not solely the goal area of the test field, but additionally the textual content “Chelsea” on the far left to generate the caption. For the display screen summarization instance, the mannequin predicts “web page displaying the tutorial of a studying app” given the screenshot on the left. On this instance, the goal area is the complete display screen, and the mannequin learns to take care of essential elements on the display screen for summarization.

Conclusion

We show that Highlight outperforms earlier strategies that use each screenshots and consider hierarchies because the enter, and establishes state-of-the-art outcomes on a number of consultant UI duties. These duties vary from accessibility, automation to interplay design and analysis. Our vision-only method for cell UI understanding alleviates the necessity to use view hierarchy, permits the structure to simply scale and advantages from the success of enormous vision-language fashions pre-trained for the final area. In comparison with current giant vision-language mannequin efforts reminiscent of Flamingo and PaLI, Highlight is comparatively small and our experiments present the pattern that bigger fashions yield higher efficiency. Highlight may be simply utilized to extra UI duties and doubtlessly advance the fronts of many interplay and person expertise duties.

Acknowledgment

We thank Mandar Joshi and Tao Li for his or her assist in processing the net pre-training dataset, and Chin-Yi Cheng and Forrest Huang for his or her suggestions for proofreading the paper. Because of Tom Small for his assist in creating animated figures on this put up.