Sooner or later, we think about that groups of robots will discover and develop the floor of close by planets, moons and asteroids – taking samples, constructing constructions, deploying devices. A whole bunch of vivid analysis minds are busy designing such robots. We’re occupied with one other query: present the astronauts the instruments to effectively function their robotic groups on the planetary floor, in a approach that doesn’t frustrate or exhaust them?

Obtained knowledge says that extra automation is all the time higher. In any case, with automation, the job often will get completed quicker, and the extra duties (or sub-tasks) robots can do on their very own, the much less the workload on the operator. Think about a robotic constructing a construction or establishing a telescope array, planning and executing duties by itself, much like a “manufacturing unit of the longer term”, with solely sporadic enter from an astronaut supervisor orbiting in a spaceship. That is one thing we examined within the ISS experiment SUPVIS Justin in 2017-18, with astronauts on board the ISS commanding DLR Robotic and Mechatronic Heart’s humanoid robotic, Rollin’ Justin, in Supervised Autonomy.

Nonetheless, the unstructured atmosphere and harsh lighting on planetary surfaces makes issues troublesome for even the most effective object-detection algorithms. And what occurs when issues go mistaken, or a activity must be completed that was not foreseen by the robotic programmers? In a manufacturing unit on Earth, the supervisor may go all the way down to the store ground to set issues proper – an costly and harmful journey in case you are an astronaut!

The following smartest thing is to function the robotic as an avatar of your self on the planet floor – seeing what it sees, feeling what it feels. Immersing your self within the robotic’s atmosphere, you possibly can command the robotic to do precisely what you need – topic to its bodily capabilities.

House Experiments

In 2019, we examined this in our subsequent ISS experiment, ANALOG-1, with the Work together Rover from ESA’s Human Robotic Interplay Lab. That is an all-wheel-drive platform with two robotic arms, each geared up with cameras and one fitted with a gripper and force-torque sensor, in addition to quite a few different sensors.

On a laptop computer display screen on the ISS, the astronaut – Luca Parmitano – noticed the views from the robotic’s cameras, and will transfer one digital camera and drive the platform with a custom-built joystick. The manipulator arm was managed with the sigma.7 force-feedback system: the astronaut strapped his hand to it, and will transfer the robotic arm and open its gripper by shifting and opening his personal hand. He may additionally really feel the forces from touching the bottom or the rock samples – essential to assist him perceive the scenario, for the reason that low bandwidth to the ISS restricted the standard of the video feed.

There have been different challenges. Over such giant distances, delays of as much as a second are typical, which imply that conventional teleoperation with force-feedback may need grow to be unstable. Moreover, the time delay the robotic between making contact with the atmosphere and the astronaut feeling it may possibly result in harmful motions which might harm the robotic.

To assist with this we developed a management technique: the Time Area Passivity Method for Excessive Delays (TDPA-HD). It displays the quantity of vitality that the operator places in (i.e. drive multiplied by velocity built-in over time), and sends that worth together with the rate command. On the robotic facet, it measures the drive that the robotic is exerting, and reduces the rate in order that it doesn’t switch extra vitality to the atmosphere than the operator put in.

On the human’s facet, it reduces the force-feedback to the operator in order that no extra vitality is transferred to the operator than is measured from the atmosphere. Which means the system stays secure, but in addition that the operator by no means unintentionally instructions the robotic to exert extra drive on the atmosphere than they intend to – conserving each operator and robotic secure.

This was the primary time that an astronaut had teleoperated a robotic from area whereas feeling force-feedback in all six levels of freedom (three rotational, three translational). The astronaut did all of the sampling duties assigned to him – whereas we may collect useful knowledge to validate our technique, and publish it in Science Robotics. We additionally reported our findings on the astronaut’s expertise.

Some issues had been nonetheless missing. The experiment was performed in a hangar on an outdated Dutch air base – not likely consultant of a planet floor.

Additionally, the astronaut requested if the robotic may do extra by itself – in distinction to SUPVIS Justin, when the astronauts typically discovered the Supervised Autonomy interface limiting and wished for extra immersion. What if the operator may select the extent of robotic autonomy acceptable to the duty?

Scalable Autonomy

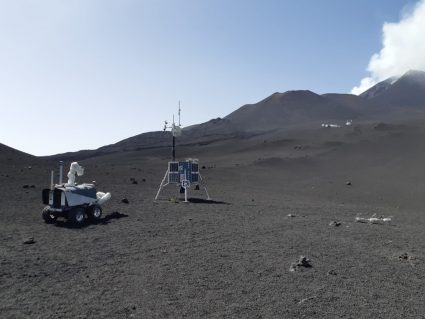

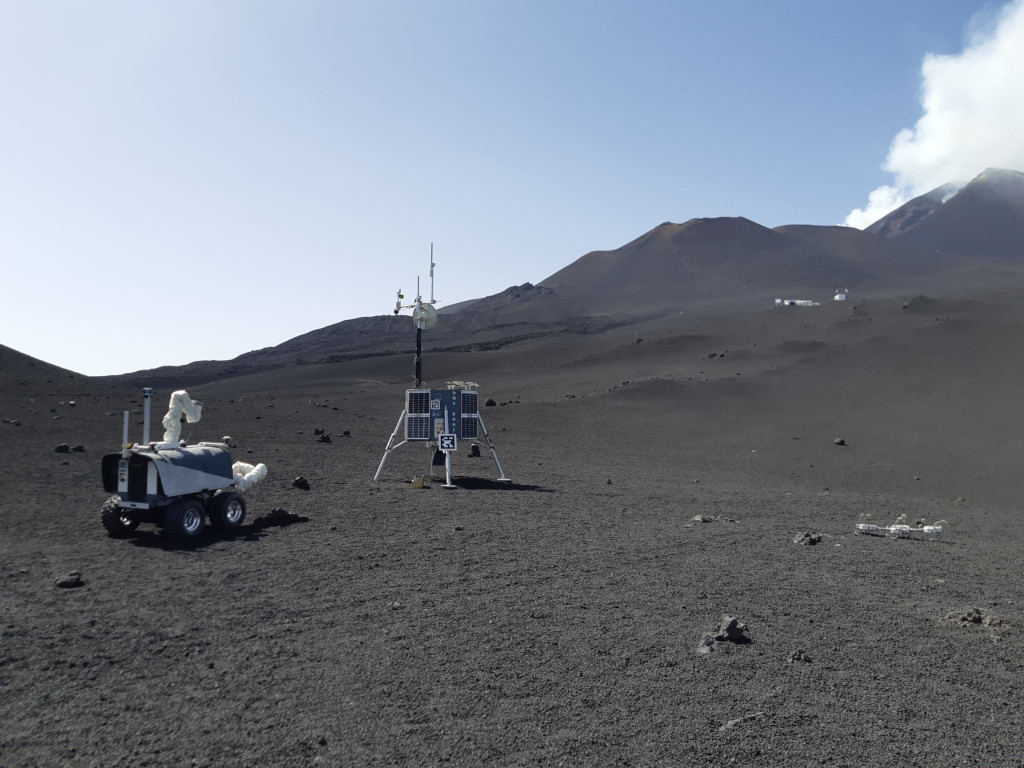

In June and July 2022, we joined the DLR’s ARCHES experiment marketing campaign on Mt. Etna. The robotic – on a lava area 2,700 metres above sea stage – was managed by former astronaut Thomas Reiter from the management room within the close by city of Catania. Wanting via the robotic’s cameras, it wasn’t a terrific leap of the creativeness to think about your self on one other planet – save for the occasional bumblebee or group of vacationers.

This was our first enterprise into “Scalable Autonomy” – permitting the astronaut to scale up or down the robotic’s autonomy, in keeping with the duty. In 2019, Luca may solely see via the robotic’s cameras and drive with a joystick, this time Thomas Reiter had an interactive map, on which he may place markers for the robotic to mechanically drive to. In 2019, the astronaut may management the robotic arm with drive suggestions; he may now additionally mechanically detect and accumulate rocks with assist from a Masks R-CNN (region-based convolutional neural community).

We realized so much from testing our system in a sensible atmosphere. Not least, that the idea that extra automation means a decrease astronaut workload is just not all the time true. Whereas the astronaut used the automated rock-picking so much, he warmed much less to the automated navigation – indicating that it was extra effort than driving with the joystick. We suspect that much more components come into play, together with how a lot the astronaut trusts the automated system, how properly it really works, and the suggestions that the astronaut will get from it on display screen – to not point out the delay. The longer the delay, the harder it’s to create an immersive expertise (consider on-line video video games with numerous lag) and due to this fact the extra engaging autonomy turns into.

What are the subsequent steps? We need to take a look at a really scalable-autonomy, multi-robot situation. We’re working in the direction of this within the challenge Floor Avatar – in a large-scale Mars-analog atmosphere, astronauts on the ISS will command a staff of 4 robots on floor. After two preliminary assessments with astronauts Samantha Christoforetti and Jessica Watkins in 2022, the primary large experiment is deliberate for 2023.

Right here the technical challenges are completely different. Past the formidable engineering problem of getting 4 robots to work along with a shared understanding of their world, we additionally must attempt to predict which duties can be simpler for the astronaut with which stage of autonomy, when and the way she may scale the autonomy up or down, and combine this all into one, intuitive person interface.

The insights we hope to realize from this is able to be helpful not just for area exploration, however for any operator commanding a staff of robots at a distance – for upkeep of photo voltaic or wind vitality parks, for instance, or search and rescue missions. An area experiment of this kind and scale will likely be our most advanced ISS telerobotic mission but – however we’re trying ahead to this thrilling problem forward.

tags: c-House

Aaron Pereira

is a researcher on the German Aerospace Centre (DLR) and a visitor researcher at ESA’s Human Robotic Interplay Lab.

Aaron Pereira

is a researcher on the German Aerospace Centre (DLR) and a visitor researcher at ESA’s Human Robotic Interplay Lab.

Neal Y. Lii

is the area head of House Robotic Help, and the co-founding head of the Modular Dexterous (Modex) Robotics Laboratory on the German Aerospace Heart (DLR).

Neal Y. Lii

is the area head of House Robotic Help, and the co-founding head of the Modular Dexterous (Modex) Robotics Laboratory on the German Aerospace Heart (DLR).

Thomas Krueger

is head of the Human Robotic Interplay Lab at ESA.