Google sees AI as a foundational and transformational expertise, with current advances in generative AI applied sciences, resembling LaMDA, PaLM, Imagen, Parti, MusicLM, and comparable machine studying (ML) fashions, a few of which are actually being included into our merchandise. This transformative potential requires us to be accountable not solely in how we advance our expertise, but additionally in how we envision which applied sciences to construct, and the way we assess the social affect AI and ML-enabled applied sciences have on the world. This endeavor necessitates basic and utilized analysis with an interdisciplinary lens that engages with — and accounts for — the social, cultural, financial, and different contextual dimensions that form the event and deployment of AI programs. We should additionally perceive the vary of potential impacts that ongoing use of such applied sciences might have on susceptible communities and broader social programs.

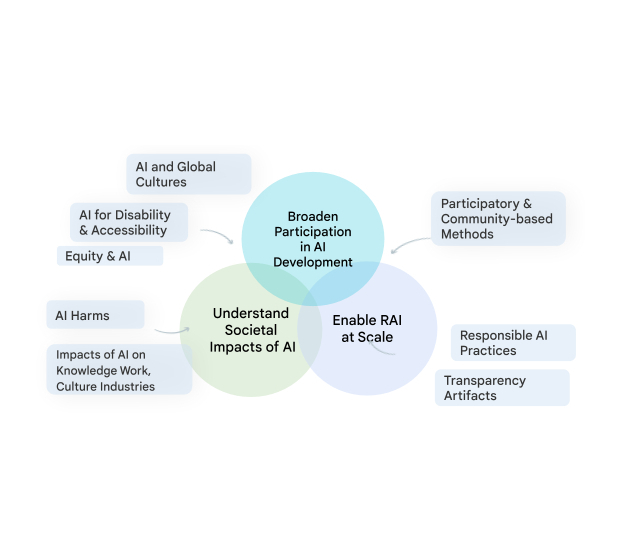

Our group, Expertise, AI, Society, and Tradition (TASC), is addressing this essential want. Analysis on the societal impacts of AI is advanced and multi-faceted; nobody disciplinary or methodological perspective can alone present the various insights wanted to grapple with the social and cultural implications of ML applied sciences. TASC thus leverages the strengths of an interdisciplinary group, with backgrounds starting from laptop science to social science, digital media and concrete science. We use a multi-method method with qualitative, quantitative, and combined strategies to critically look at and form the social and technical processes that underpin and encompass AI applied sciences. We concentrate on participatory, culturally-inclusive, and intersectional equity-oriented analysis that brings to the foreground impacted communities. Our work advances Accountable AI (RAI) in areas resembling laptop imaginative and prescient, pure language processing, well being, and basic goal ML fashions and purposes. Beneath, we share examples of our method to Accountable AI and the place we’re headed in 2023.

Theme 1: Tradition, communities, & AI

One in every of our key areas of analysis is the development of strategies to make generative AI applied sciences extra inclusive of and invaluable to folks globally, via community-engaged, and culturally-inclusive approaches. Towards this intention, we see communities as specialists of their context, recognizing their deep data of how applied sciences can and may affect their very own lives. Our analysis champions the significance of embedding cross-cultural issues all through the ML improvement pipeline. Neighborhood engagement permits us to shift how we incorporate data of what’s most necessary all through this pipeline, from dataset curation to analysis. This additionally permits us to know and account for the methods wherein applied sciences fail and the way particular communities would possibly expertise hurt. Primarily based on this understanding we now have created accountable AI analysis methods which might be efficient in recognizing and mitigating biases alongside a number of dimensions.

Our work on this space is important to making sure that Google’s applied sciences are secure for, work for, and are helpful to a various set of stakeholders around the globe. For instance, our analysis on person attitudes in the direction of AI, accountable interplay design, and equity evaluations with a concentrate on the worldwide south demonstrated the cross-cultural variations within the affect of AI and contributed assets that allow culturally-situated evaluations. We’re additionally constructing cross-disciplinary analysis communities to look at the connection between AI, tradition, and society, via our current and upcoming workshops on Cultures in AI/AI in Tradition, Moral Concerns in Inventive Functions of Pc Imaginative and prescient, and Cross-Cultural Concerns in NLP.

Our current analysis has additionally sought out views of explicit communities who’re recognized to be much less represented in ML improvement and purposes. For instance, we now have investigated gender bias, each in pure language and in contexts resembling gender-inclusive well being, drawing on our analysis to develop extra correct evaluations of bias in order that anybody creating these applied sciences can establish and mitigate harms for folks with queer and non-binary identities.

Theme 2: Enabling Accountable AI all through the event lifecycle

We work to allow RAI at scale, by establishing industry-wide finest practices for RAI throughout the event pipeline, and guaranteeing our applied sciences verifiably incorporate that finest apply by default. This utilized analysis contains accountable information manufacturing and evaluation for ML improvement, and systematically advancing instruments and practices that help practitioners in assembly key RAI objectives like transparency, equity, and accountability. Extending earlier work on Knowledge Playing cards, Mannequin Playing cards and the Mannequin Card Toolkit, we launched the Knowledge Playing cards Playbook, offering builders with strategies and instruments to doc acceptable makes use of and important details associated to a dataset. As a result of ML fashions are sometimes skilled and evaluated on human-annotated information, we additionally advance human-centric analysis on information annotation. We’ve developed frameworks to doc annotation processes and strategies to account for rater disagreement and rater variety. These strategies allow ML practitioners to higher guarantee variety in annotation of datasets used to coach fashions, by figuring out present obstacles and re-envisioning information work practices.

Future instructions

We are actually working to additional broaden participation in ML mannequin improvement, via approaches that embed a variety of cultural contexts and voices into expertise design, improvement, and affect evaluation to make sure that AI achieves societal objectives. We’re additionally redefining accountable practices that may deal with the size at which ML applied sciences function in as we speak’s world. For instance, we’re creating frameworks and constructions that may allow neighborhood engagement inside {industry} AI analysis and improvement, together with community-centered analysis frameworks, benchmarks, and dataset curation and sharing.

Specifically, we’re furthering our prior work on understanding how NLP language fashions might perpetuate bias in opposition to folks with disabilities, extending this analysis to handle different marginalized communities and cultures and together with picture, video, and different multimodal fashions. Such fashions might include tropes and stereotypes about explicit teams or might erase the experiences of particular people or communities. Our efforts to establish sources of bias inside ML fashions will result in higher detection of those representational harms and can help the creation of extra truthful and inclusive programs.

TASC is about finding out all of the touchpoints between AI and folks — from people and communities, to cultures and society. For AI to be culturally-inclusive, equitable, accessible, and reflective of the wants of impacted communities, we should tackle these challenges with inter- and multidisciplinary analysis that facilities the wants of impacted communities. Our analysis research will proceed to discover the interactions between society and AI, furthering the invention of latest methods to develop and consider AI to ensure that us to develop extra sturdy and culturally-situated AI applied sciences.

Acknowledgements

We wish to thank everybody on the group that contributed to this weblog publish. In alphabetical order by final identify: Cynthia Bennett, Eric Corbett, Aida Mostafazadeh Davani, Emily Denton, Sunipa Dev, Fernando Diaz, Mark Díaz, Shaun Kane, Shivani Kapania, Michael Madaio, Vinodkumar Prabhakaran, Rida Qadri, Renee Shelby, Ding Wang, and Andrew Zaldivar. Additionally, we wish to thank Toju Duke and Marian Croak for his or her invaluable suggestions and ideas.