Pure language processing is without doubt one of the hottest subjects of dialogue within the AI panorama. It is a vital software for creating generative AI functions that may create essays and chatbots that may work together personally with human customers. As the recognition of ChatGPT soared greater, the eye in the direction of greatest NLP fashions gained momentum. Pure language processing focuses on constructing machines that may interpret and modify pure human language.

It has developed from the sphere of computational linguistics and makes use of laptop science for understanding ideas of language. Pure language processing is an integral facet of remodeling many components of on a regular basis lives of individuals. On high of it, the business functions of NLP fashions have invited consideration to them. Allow us to be taught extra about essentially the most famend NLP fashions and the way they’re totally different from one another.

What’s the Significance of NLP Fashions?

The seek for pure language processing fashions attracts consideration to the utility of the fashions. What’s the cause for studying about NLP fashions? NLP fashions have turn into essentially the most noticeable spotlight on the planet of AI for his or her totally different use circumstances. The widespread duties for which NLP fashions have gained consideration embody sentiment evaluation, machine translation, spam detection, named entity recognition, and grammatical error correction. It will possibly additionally assist in subject modeling, textual content technology, data retrieval, query answering, and summarization duties.

All of the high NLP fashions work by means of identification of the connection between totally different elements of language, such because the letters, sentences, and phrases in a textual content dataset. NLP fashions make the most of totally different strategies for the distinct levels of information preprocessing, extraction of options, and modeling.

The info preprocessing stage helps in bettering the efficiency of the mannequin or turning phrases and characters right into a format understandable by the mannequin. Information preprocessing is an integral spotlight within the adoption of data-centric AI. A few of the notable methods for information preprocessing embody sentence segmentation, stemming and lemmatization, tokenization, and stop-word elimination.

The characteristic extraction stage focuses on options or numbers that describe the connection between paperwork and the textual content they comprise. A few of the standard methods for characteristic extraction embody bag-of-words, generic characteristic engineering, and TF-IDF. Different new methods for characteristic extraction in standard NLP fashions embody GLoVE, Word2Vec, and studying the vital options throughout coaching technique of neural networks.

The ultimate stage of modeling explains how NLP fashions are created within the first place. After you have preprocessed information, you possibly can enter it into an NLP structure which helps in modeling the info for engaging in the specified duties. For instance, numerical options can function inputs for various fashions. It’s also possible to discover deep neural networks and language fashions as essentially the most notable examples of modeling.

Wish to perceive the significance of ethics in AI, moral frameworks, ideas, and challenges? Enroll now within the Ethics Of Synthetic Intelligence (AI) Course

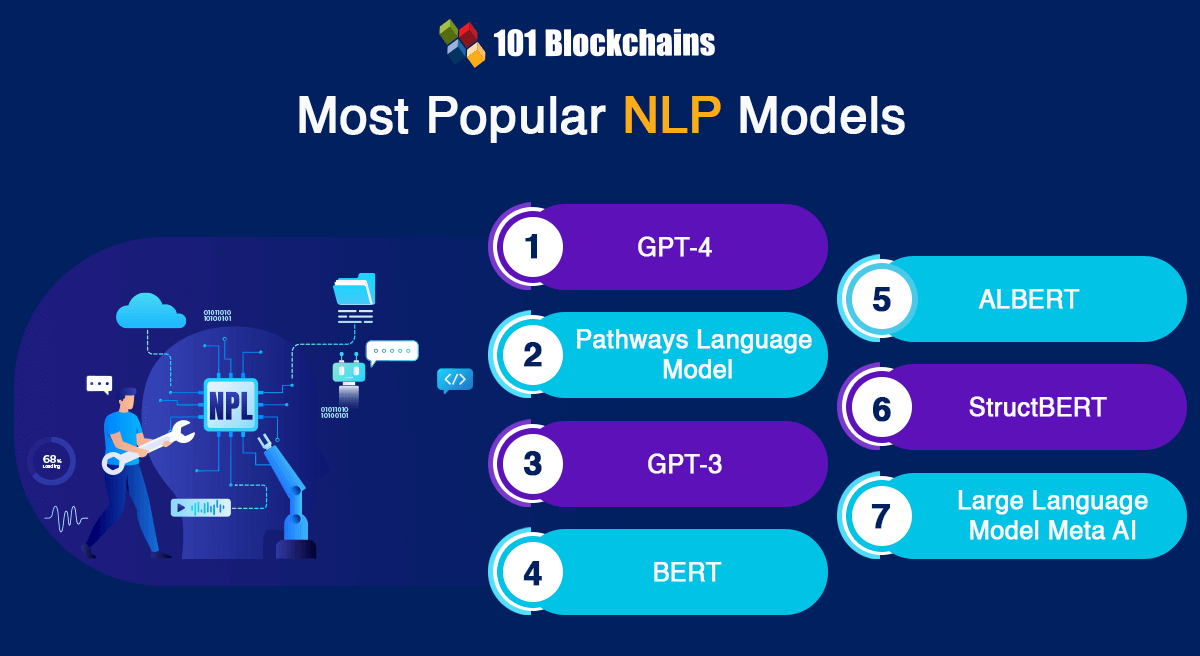

Most Widespread Pure Language Processing Fashions

The arrival of pre-trained language fashions and switch studying within the area of NLP created new benchmarks for language interpretation and technology. Newest analysis developments in NLP fashions embody the arrival of switch studying and the applying of transformers to various kinds of downstream NLP duties. Nevertheless, curiosity concerning questions similar to ‘Which NLP mannequin provides the perfect accuracy?’ would lead you in the direction of among the standard mentions.

Chances are you’ll come throughout conflicting views within the NLP neighborhood in regards to the worth of huge pre-trained language fashions. Then again, the newest developments within the area of NLP have been pushed by huge enhancements in computing capability alongside discovery of latest methods for optimizing the fashions to realize excessive efficiency. Right here is a top level view of essentially the most famend or generally used NLP fashions that you need to be careful for within the AI panorama.

-

Generative Pre-Skilled Transformer 4

Generative Pre-trained Transformer 4 or GPT-4 is the preferred NLP mannequin available in the market proper now. As a matter of truth, it tops the NLP fashions listing because of the reputation of ChatGPT. In case you have used ChatGPT Plus, then you might have used GPT-4. It’s a massive language mannequin created by OpenAI, and its multimodal nature ensures that it might take photographs and textual content as enter. Due to this fact, GPT-4 is significantly extra versatile than the earlier GPT fashions, which might solely take textual content inputs.

Through the growth course of, GPT-4 was skilled to anticipate the subsequent content material. As well as, it has to undergo fine-tuning by leveraging suggestions from people and AI programs. It served as the best instance of sustaining conformance to human values and specified insurance policies for AI use.

GPT-4 has performed an important function in enhancing the capabilities of ChatGPT. Then again, it nonetheless experiences some challenges that have been current within the earlier fashions. The important thing benefits of GPT-4 level to the truth that it has 175 billion parameters, which makes it 10 instances larger than GPT-3.5, the mannequin behind ChatGPT functionalities.

Excited to find out about ChatGPT and different AI use circumstances? Enroll now in ChatGPT Fundamentals Course

The subsequent addition amongst greatest NLP fashions is the Pathways Language Mannequin or PaLM. Some of the placing highlights of the PaLM NLP mannequin is that it has been created by the Google Analysis workforce. It represents a significant enchancment within the area of language know-how, which has nearly 540 billion parameters.

The coaching of PaLM mannequin includes environment friendly computing programs referred to as Pathways, which assist in guaranteeing coaching throughout totally different processors. Some of the essential highlights of PaLM mannequin is the scalability of its coaching course of. The coaching course of for PaLM NLP mannequin concerned 6144 TPU v4 chips, which makes it one of the vital huge TPU-based coaching fashions.

PaLM is without doubt one of the standard NLP fashions with the potential to revolutionize the NLP panorama. It used a mixture of totally different sources, together with datasets in English and plenty of different languages. The datasets used for coaching PaLM mannequin embody books, conversations, code from Github, internet paperwork, and Wikipedia content material.

With such an intensive coaching dataset, PaLM mannequin serves glorious efficiency in language duties similar to sentence completion and query answering. Then again, it additionally excels in reasoning and will help in dealing with advanced math issues alongside offering clear explanations. By way of coding, PaLM is just like specialised fashions, albeit with the requirement of much less code for studying.

GPT-3 is a transformer-based NLP mannequin that might carry out question-answering duties, translation and composing poetry. It’s also one of many high NLP fashions that may work on duties involving reasoning, like unscrambling phrases. On high of it, current developments in GPT-3 supply the pliability for writing information and producing codes. GPT-3 has the aptitude for managing statistical dependencies between totally different phrases.

The coaching information for GPT-3 included greater than 175 billion parameters alongside 45 TB of textual content sourced from the web. This characteristic makes GPT-3 one of many largest pre-trained NLP fashions. On high of it, one other attention-grabbing characteristic of GPT-3 is that it doesn’t want fine-tuning to carry out downstream duties. GPT-3 makes use of the ‘textual content in, textual content out’ API to assist builders reprogram the mannequin through the use of related directions.

Wish to be taught in regards to the fundamentals of AI and Fintech, Enroll now in AI And Fintech Masterclass

-

Bidirectional Encoder Representations from Transformers

The Bidirectional Encoder Representations from Transformers or BERT is one other promising entry on this NLP fashions listing for its distinctive options. BERT has been created by Google as a way to make sure NLP pre-training. It makes use of the transformer mannequin or a brand new neural community structure, which leverages the self-attention mechanism for understanding pure language.

BERT was created to resolve the issues related to neural machine translation or sequence transduction. Due to this fact, it might work successfully for duties that remodel the enter sequence into output sequence. For instance, text-to-speech conversion or speech recognition are among the notable use circumstances of BERT mannequin.

You will discover an affordable reply to “Which NLP mannequin provides the perfect accuracy?” by diving into particulars of transformers. The transformer mannequin makes use of two totally different mechanisms: an encoder and a decoder. The encoder works on studying the textual content enter, whereas the decoder focuses on producing predictions for the duty. You will need to observe that BERT focuses on producing an efficient language mannequin and makes use of the encoder mechanism solely.

BERT mannequin has additionally proved its effectiveness in performing nearly 11 NLP duties. The coaching information of BERT consists of 2500 million phrases from Wikipedia and 800 million phrases from the BookCorpus coaching dataset. One of many main causes for accuracy in responses of BERT is Google Search. As well as, different Google functions, together with Google Docs, additionally use BERT for correct textual content prediction.

Pre-trained language fashions are one of many distinguished highlights within the area of pure language processing. You possibly can discover that pre-trained pure language processing fashions assist enhancements in efficiency for downstream duties. Nevertheless, a rise in mannequin measurement can create issues similar to limitations of GPU/TPU reminiscence and prolonged coaching instances. Due to this fact, Google launched a lighter and extra optimized model of BERT mannequin.

The brand new mannequin, or ALBERT, featured two distinct methods for parameter discount. The 2 methods utilized in ALBERT NLP mannequin embody factorized embedding parameterization and cross-layer parameter sharing. Factorized embedding parameterization includes isolation of the scale of hidden layers from measurement of vocabulary embedding.

Then again, cross-layer parameter sharing ensures limitations on development of quite a lot of parameters alongside the depth of the community. The methods for parameter discount assist in lowering reminiscence consumption alongside growing the mannequin’s coaching pace. On high of it, ALBERT additionally presents a self-supervised loss within the case of sentence order prediction, which is a distinguished setback in BERT for inter-sentence coherence.

Change into a grasp of generative AI functions by creating expert-level abilities in immediate engineering with Immediate Engineer Profession Path

The eye in the direction of BERT has been gaining momentum because of its effectiveness in pure language understanding or NLU. As well as, it has efficiently achieved spectacular accuracy for various NLP duties, similar to semantic textual similarity, query answering, and sentiment classification. Whereas BERT is without doubt one of the greatest NLP fashions, it additionally has scope for extra enchancment. Curiously, BERT gained some extensions and reworked into StructBERT by means of incorporation of language constructions within the pre-training levels.

StructBERT depends on structural pre-training for providing efficient empirical outcomes on totally different downstream duties. For instance, it might enhance the rating on the GLUE benchmark for comparability with different revealed fashions. As well as, it might additionally enhance accuracy and efficiency for question-answering duties. Similar to many different pre-trained NLP fashions, StructBERT can assist companies with totally different NLP duties, similar to doc summarization, query answering, and sentiment evaluation.

-

Massive Language Mannequin Meta AI

The LLM of Meta or Fb or Massive Language Mannequin Meta AI arrived within the NLP ecosystem in 2023. Also called Llama, the massive language mannequin of Meta serves as a sophisticated language mannequin. As a matter of truth, it’d turn into one of the vital standard NLP fashions quickly, with nearly 70 billion parameters. Within the preliminary levels, solely accepted builders and researchers might entry the Llama mannequin. Nevertheless, it has turn into an open supply NLP mannequin now, which permits a broader neighborhood to make the most of and discover the capabilities of Llama.

One of many vital particulars about Llama is the adaptability of the mannequin. You will discover it in numerous sizes, together with the smaller variations which make the most of lesser computing energy. With such flexibility, you possibly can discover that Llama presents higher accessibility for sensible use circumstances and testing. Llama additionally presents open gates for attempting out new experiments.

Essentially the most attention-grabbing factor about Llama is that it was launched to the general public unintentionally with none deliberate occasion. The sudden arrival of Llama, with doorways open for experimentation, led to the creation of latest and associated fashions like Orca. New fashions based mostly on Llama used its distinct capabilities. For instance, Orca makes use of the great linguistic capabilities related to Llama.

Excited to be taught the basics of AI functions in enterprise? Enroll now within the AI For Enterprise Course

Conclusion

The define of high NLP fashions showcases among the most promising entries available in the market proper now. Nevertheless, the attention-grabbing factor about NLP is that you’ll find a number of fashions tailor-made for distinctive functions with totally different benefits. The expansion in use of NLP for enterprise use circumstances and actions in on a regular basis life has created curiosity about NLP fashions.

Candidates getting ready for jobs in AI must find out about new and present NLP fashions and the way they work. Pure language processing is an integral facet of AI, and the repeatedly rising adoption of AI additionally presents higher prospects for reputation of NLP fashions. Be taught extra about NLP fashions and their elements proper now.

Somebody essentially help to make significantly articles Id state This is the first time I frequented your web page and up to now I surprised with the research you made to make this actual post incredible Fantastic job

Fantastic site A lot of helpful info here Im sending it to some buddies ans additionally sharing in delicious And naturally thanks on your sweat

I loved as much as youll receive carried out right here The sketch is attractive your authored material stylish nonetheless you command get bought an nervousness over that you wish be delivering the following unwell unquestionably come more formerly again as exactly the same nearly a lot often inside case you shield this hike

I was suggested this web site by my cousin Im not sure whether this post is written by him as no one else know such detailed about my trouble You are incredible Thanks

We are delighted that you find the site enjoyable. Thank you. As always, feel free to make comments. We greatly appreciate it.