Image two groups squaring off on a soccer subject. The gamers can cooperate to attain an goal, and compete in opposition to different gamers with conflicting pursuits. That’s how the sport works.

Creating synthetic intelligence brokers that may study to compete and cooperate as successfully as people stays a thorny downside. A key problem is enabling AI brokers to anticipate future behaviors of different brokers when they’re all studying concurrently.

Due to the complexity of this downside, present approaches are usually myopic; the brokers can solely guess the subsequent few strikes of their teammates or opponents, which ends up in poor efficiency in the long term.

Researchers from MIT, the MIT-IBM Watson AI Lab, and elsewhere have developed a brand new strategy that offers AI brokers a farsighted perspective. Their machine-learning framework permits cooperative or aggressive AI brokers to contemplate what different brokers will do as time approaches infinity, not simply over just a few subsequent steps. The brokers then adapt their behaviors accordingly to affect different brokers’ future behaviors and arrive at an optimum, long-term resolution.

This framework may very well be utilized by a gaggle of autonomous drones working collectively to discover a misplaced hiker in a thick forest, or by self-driving vehicles that try to maintain passengers protected by anticipating future strikes of different autos driving on a busy freeway.

“When AI brokers are cooperating or competing, what issues most is when their behaviors converge in some unspecified time in the future sooner or later. There are quite a lot of transient behaviors alongside the best way that don’t matter very a lot in the long term. Reaching this converged habits is what we actually care about, and we now have a mathematical solution to allow that,” says Dong-Ki Kim, a graduate scholar within the MIT Laboratory for Data and Resolution Techniques (LIDS) and lead creator of a paper describing this framework.

The senior creator is Jonathan P. How, the Richard C. Maclaurin Professor of Aeronautics and Astronautics and a member of the MIT-IBM Watson AI Lab. Co-authors embody others on the MIT-IBM Watson AI Lab, IBM Analysis, Mila-Quebec Synthetic Intelligence Institute, and Oxford College. The analysis shall be offered on the Convention on Neural Data Processing Techniques.

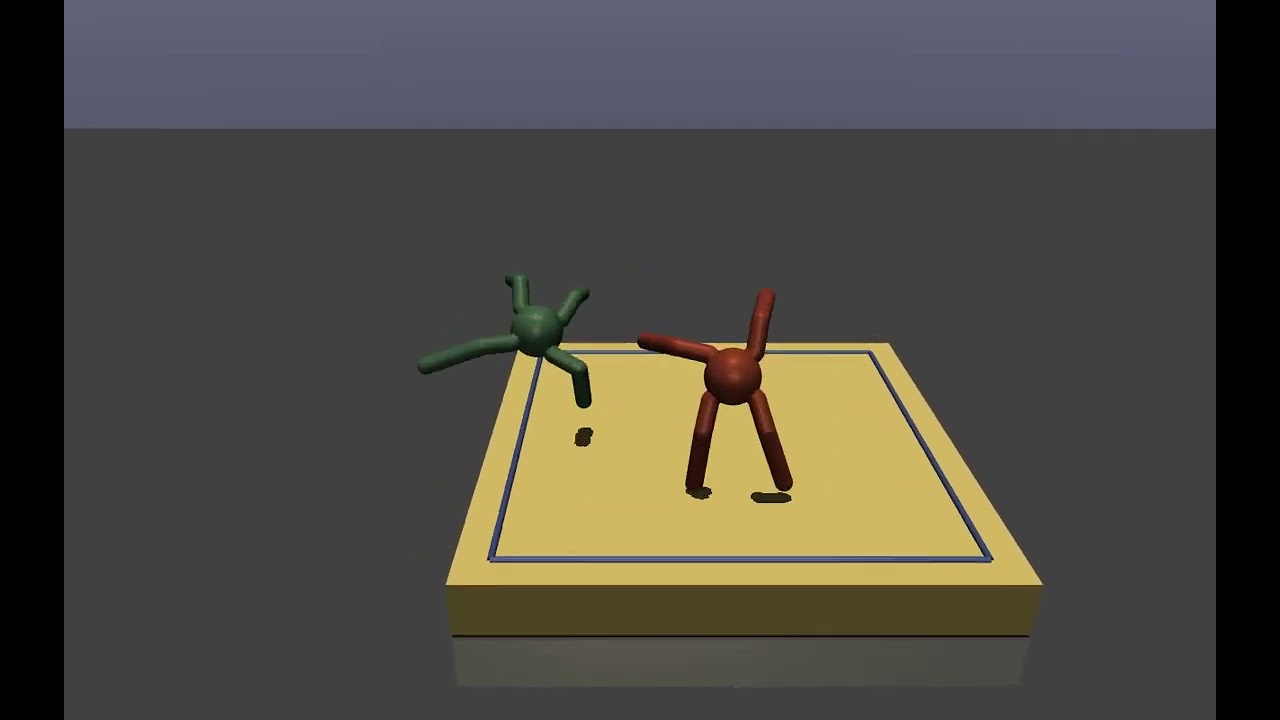

On this demo video, the crimson robotic, which has been skilled utilizing the researchers’ machine-learning system, is ready to defeat the inexperienced robotic by studying more practical behaviors that reap the benefits of the continually altering technique of its opponent.

Extra brokers, extra issues

The researchers targeted on an issue often called multiagent reinforcement studying. Reinforcement studying is a type of machine studying during which an AI agent learns by trial and error. Researchers give the agent a reward for “good” behaviors that assist it obtain a purpose. The agent adapts its habits to maximise that reward till it will definitely turns into an skilled at a job.

However when many cooperative or competing brokers are concurrently studying, issues develop into more and more advanced. As brokers take into account extra future steps of their fellow brokers, and the way their very own habits influences others, the issue quickly requires far an excessive amount of computational energy to resolve effectively. That is why different approaches solely deal with the quick time period.

“The AIs actually wish to take into consideration the tip of the sport, however they don’t know when the sport will finish. They want to consider methods to preserve adapting their habits into infinity to allow them to win at some far time sooner or later. Our paper primarily proposes a brand new goal that permits an AI to consider infinity,” says Kim.

However since it’s unattainable to plug infinity into an algorithm, the researchers designed their system so brokers deal with a future level the place their habits will converge with that of different brokers, often called equilibrium. An equilibrium level determines the long-term efficiency of brokers, and a number of equilibria can exist in a multiagent state of affairs. Subsequently, an efficient agent actively influences the longer term behaviors of different brokers in such a means that they attain a fascinating equilibrium from the agent’s perspective. If all brokers affect one another, they converge to a basic idea that the researchers name an “energetic equilibrium.”

The machine-learning framework they developed, often called FURTHER (which stands for FUlly Reinforcing acTive affect witH averagE Reward), permits brokers to learn to adapt their behaviors as they work together with different brokers to attain this energetic equilibrium.

FURTHER does this utilizing two machine-learning modules. The primary, an inference module, permits an agent to guess the longer term behaviors of different brokers and the educational algorithms they use, based mostly solely on their prior actions.

This info is fed into the reinforcement studying module, which the agent makes use of to adapt its habits and affect different brokers in a means that maximizes its reward.

“The problem was fascinated about infinity. We had to make use of quite a lot of totally different mathematical instruments to allow that, and make some assumptions to get it to work in follow,” Kim says.

Successful in the long term

They examined their strategy in opposition to different multiagent reinforcement studying frameworks in a number of totally different eventualities, together with a pair of robots preventing sumo-style and a battle pitting two 25-agent groups in opposition to each other. In each situations, the AI brokers utilizing FURTHER received the video games extra typically.

Since their strategy is decentralized, which implies the brokers study to win the video games independently, it’s also extra scalable than different strategies that require a central pc to manage the brokers, Kim explains.

The researchers used video games to check their strategy, however FURTHER may very well be used to deal with any type of multiagent downside. As an illustration, it may very well be utilized by economists in search of to develop sound coverage in conditions the place many interacting entitles have behaviors and pursuits that change over time.

Economics is one utility Kim is especially enthusiastic about finding out. He additionally needs to dig deeper into the idea of an energetic equilibrium and proceed enhancing the FURTHER framework.

This analysis is funded, partially, by the MIT-IBM Watson AI Lab.