Within the new analysis, the Stanford group needed to know if neurons within the motor cortex contained helpful details about speech actions, too. That’s, may they detect how “topic T12” was attempting to maneuver her mouth, tongue, and vocal cords as she tried to speak?

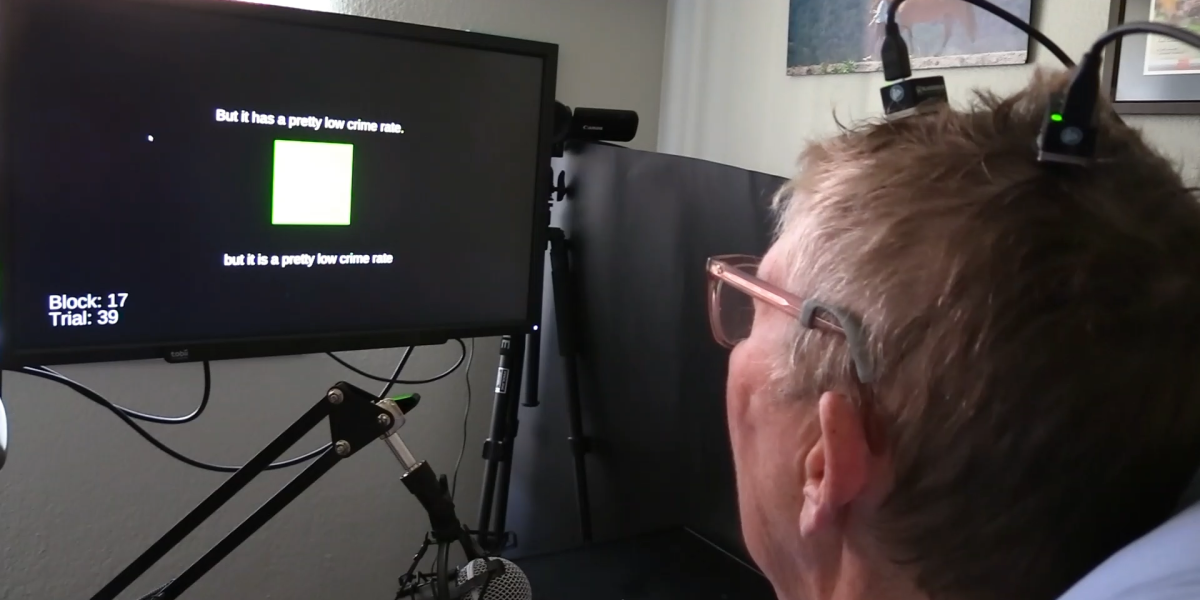

These are small, delicate actions, and in response to Sabes, one massive discovery is that only a few neurons contained sufficient data to let a pc program predict, with good accuracy, what phrases the affected person was attempting to say. That data was conveyed by Shenoy’s group to a pc display screen, the place the affected person’s phrases appeared as they have been spoken by the pc.

The brand new outcome builds on earlier work by Edward Chang on the College of California, San Francisco, who has written that speech entails the most complex actions individuals make. We push out air, add vibrations that make it audible, and kind it into phrases with our mouth, lips, and tongue. To make the sound “f,” you set your high enamel in your decrease lip and push air out—simply one in all dozens of mouth actions wanted to talk.

A path ahead

Chang beforehand used electrodes positioned on high of the mind to allow a volunteer to talk via a pc, however of their preprint, the Stanford researchers say their system is extra correct and three to 4 occasions quicker.

“Our outcomes present a possible path ahead to revive communication to individuals with paralysis at conversational speeds,” wrote the researchers, who included Shenoy and neurosurgeon Jaimie Henderson.

David Moses, who works with Chang’s group at UCSF, says the present work reaches “spectacular new efficiency benchmarks.” But at the same time as data proceed to be damaged, he says, “it should grow to be more and more necessary to display steady and dependable efficiency over multi-year time scales.” Any business mind implant may have a tough time getting previous regulators, particularly if it degrades over time or if the accuracy of the recording falls off.

WILLETT, KUNZ ET AL

The trail ahead is prone to embrace each extra refined implants and nearer integration with synthetic intelligence.

The present system already makes use of a few sorts of machine studying applications. To enhance its accuracy, the Stanford group employed software program that predicts what phrase usually comes subsequent in a sentence. “I” is extra usually adopted by “am” than “ham,” regardless that these phrases sound related and will produce related patterns in somebody’s mind.