The sphere of pure language processing (NLP) has been revolutionized by language fashions educated on giant quantities of textual content knowledge. Scaling up the scale of language fashions typically results in improved efficiency and pattern effectivity on a spread of downstream NLP duties. In lots of instances, the efficiency of a big language mannequin might be predicted by extrapolating the efficiency pattern of smaller fashions. For example, the impact of scale on language mannequin perplexity has been empirically proven to span greater than seven orders of magnitude.

However, efficiency for sure different duties doesn’t enhance in a predictable vogue. For instance, the GPT-3 paper confirmed that the flexibility of language fashions to carry out multi-digit addition has a flat scaling curve (roughly random efficiency) for fashions from 100M to 13B parameters, at which level the efficiency jumped considerably. Given the rising use of language fashions in NLP analysis and functions, you will need to higher perceive talents reminiscent of these that may come up unexpectedly.

In “Emergent Skills of Giant Language Fashions,” lately revealed within the Transactions on Machine Studying Analysis (TMLR), we talk about the phenomena of emergent talents, which we outline as talents that aren’t current in small fashions however are current in bigger fashions. Extra particularly, we examine emergence by analyzing the efficiency of language fashions as a perform of language mannequin scale, as measured by whole floating level operations (FLOPs), or how a lot compute was used to coach the language mannequin. Nonetheless, we additionally discover emergence as a perform of different variables, reminiscent of dataset dimension or variety of mannequin parameters (see the paper for full particulars). General, we current dozens of examples of emergent talents that end result from scaling up language fashions. The existence of such emergent talents raises the query of whether or not extra scaling might probably additional develop the vary of capabilities of language fashions.

Emergent Prompted Duties

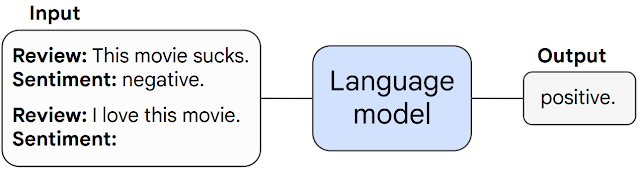

First we talk about emergent talents which will come up in prompted duties. In such duties, a pre-trained language mannequin is given a immediate for a process framed as subsequent phrase prediction, and it performs the duty by finishing the response. With none additional fine-tuning, language fashions can typically carry out duties that weren’t seen throughout coaching.

We name a prompted process emergent when it unpredictably surges from random efficiency to above-random at a particular scale threshold. Under we present three examples of prompted duties with emergent efficiency: multi-step arithmetic, taking college-level exams, and figuring out the meant which means of a phrase. In every case, language fashions carry out poorly with little or no dependence on mannequin dimension as much as a threshold at which level their efficiency all of a sudden begins to excel.

|

| The flexibility to carry out multi-step arithmetic (left), succeed on college-level exams (center), and establish the meant which means of a phrase in context (proper) all emerge just for fashions of sufficiently giant scale. The fashions proven embody LaMDA, GPT-3, Gopher, Chinchilla, and PaLM. |

Efficiency on these duties solely turns into non-random for fashions of ample scale — for example, above 1022 coaching FLOPs for the arithmetic and multi-task NLU duties, and above 1024 coaching FLOPs for the phrase in context duties. Notice that though the size at which emergence happens might be totally different for various duties and fashions, no mannequin confirmed easy enchancment in conduct on any of those duties. Dozens of different emergent prompted duties are listed in our paper.

Emergent Prompting Methods

The second class of emergent talents encompasses prompting methods that increase the capabilities of language fashions. Prompting methods are broad paradigms for prompting that may be utilized to a spread of various duties. They’re thought of emergent once they fail for small fashions and may solely be utilized by a sufficiently-large mannequin.

One instance of an emergent prompting technique is named “chain-of-thought prompting”, for which the mannequin is prompted to generate a collection of intermediate steps earlier than giving the ultimate reply. Chain-of-thought prompting allows language fashions to carry out duties requiring advanced reasoning, reminiscent of a multi-step math phrase drawback. Notably, fashions purchase the flexibility to do chain-of-thought reasoning with out being explicitly educated to take action. An instance of chain-of-thought prompting is proven within the determine beneath.

|

| Chain of thought prompting allows sufficiently giant fashions to resolve multi-step reasoning issues. |

The empirical outcomes of chain-of-thought prompting are proven beneath. For smaller fashions, making use of chain-of-thought prompting doesn’t outperform commonplace prompting, for instance, when utilized to GSM8K, a difficult benchmark of math phrase issues. Nonetheless, for giant fashions (1024 FLOPs), chain-of-thought prompting considerably improves efficiency in our checks, reaching a 57% clear up charge on GSM8K.

|

| Chain-of-thought prompting is an emergent potential — it fails to enhance efficiency for small language fashions, however considerably improves efficiency for giant fashions. Right here we illustrate the distinction between commonplace and chain-of-thought prompting at totally different scales for 2 language fashions, LaMDA and PaLM. |

Implications of Emergent Skills

The existence of emergent talents has a spread of implications. For instance, as a result of emergent few-shot prompted talents and techniques aren’t explicitly encoded in pre-training, researchers could not know the total scope of few-shot prompted talents of present language fashions. Furthermore, the emergence of recent talents as a perform of mannequin scale raises the query of whether or not additional scaling will probably endow even bigger fashions with new emergent talents.

Figuring out emergent talents in giant language fashions is a primary step in understanding such phenomena and their potential impression on future mannequin capabilities. Why does scaling unlock emergent talents? As a result of computational assets are costly, can emergent talents be unlocked by way of different strategies with out elevated scaling (e.g., higher mannequin architectures or coaching strategies)? Will new real-world functions of language fashions develop into unlocked when sure talents emerge? Analyzing and understanding the behaviors of language fashions, together with emergent behaviors that come up from scaling, is a crucial analysis query as the sphere of NLP continues to develop.

Acknowledgements

It was an honor and privilege to work with Rishi Bommasani, Colin Raffel, Barret Zoph, Sebastian Borgeaud, Dani Yogatama, Maarten Bosma, Denny Zhou, Donald Metzler, Ed H. Chi, Tatsunori Hashimoto, Oriol Vinyals, Percy Liang, Jeff Dean, and William Fedus.