OpenAI has been instrumental in growing revolutionary instruments just like the OpenAI Fitness center, designed for coaching reinforcement algorithms, and GPT-n fashions. The highlight can be on DALL-E, an AI mannequin that crafts photographs from textual inputs. One such mannequin that has garnered appreciable consideration is OpenAI’s ChatGPT, a shining exemplar within the realm of Massive Language Fashions.

GPT-4: Immediate Engineering

ChatGPT has reworked the chatbot panorama, providing human-like responses to person inputs and increasing its purposes throughout domains – from software program growth and testing to enterprise communication, and even the creation of poetry.

Within the fingers of companies and people, GPT-4, as an illustration, may function an inexhaustible information reservoir, proficient in topics starting from Arithmetic and Biology to Authorized Research. Such refined and accessible AI fashions are poised to redefine the way forward for work, studying, and creativity.

Generative fashions like GPT-4 can produce new knowledge primarily based on present inputs. This distinguishing attribute lets them undertake various duties, together with producing textual content, photographs, music, and video.

Within the context of ChatGPT and OpenAI fashions, a immediate is the enter that customers present to the fashions, often within the type of textual content. Prompts function the first technique of controlling the output generated by these fashions, making them essential for profitable and related conversations.

The Affect of Immediate High quality

Utilizing well-defined prompts is the important thing to partaking in helpful and significant conversations with AI methods. Poorly-defined prompts may end up in output that’s not useful to the person and should even result in deceptive content material.

To show the impression of immediate high quality, let’s examine two totally different prompts given to ChatGPT. The primary immediate, poorly-defined and assuming prior information, generates a probably unhelpful response for the person. Alternatively, the second immediate supplies extra context and examples, making certain a extra related and comprehensible response.

Immediate 1: “Inform me about Convolutional Neural Networks.”

Response 1: “Convolutional Neural Networks (CNNs) are multi-layer perceptron networks that encompass absolutely linked layers and pooling layers. They’re generally utilized in picture recognition duties. The method entails making use of filters to enter photographs and downscaling them utilizing pooling layers.”

Immediate 2: “I need to study Convolutional Neural Networks, however I’ve no prior information of Synthetic Neural Networks. Are you able to please present a beginner-friendly rationalization and an instance of how CNNs are utilized in picture recognition?”

Response 2: “Convolutional Neural Networks (CNNs) are a kind of neural community primarily used for picture recognition duties. Not like different neural networks, they leverage convolutional layers and pooling layers to course of photographs. Think about you could have a picture of a cat. CNNs can determine totally different options within the picture, akin to whiskers, ears, and tail, by making use of filters and downsampling the picture utilizing pooling layers. This course of makes CNNs extremely efficient for recognizing objects in photographs.”

By evaluating the 2 responses, it’s evident {that a} well-defined immediate results in a extra related and user-friendly response. Immediate design and engineering are rising disciplines that goal to optimize the output high quality of AI fashions like ChatGPT.

Within the following sections of this text, we are going to delve into the realm of superior methodologies geared toward refining Massive Language Fashions (LLMs), akin to immediate engineering strategies and techniques. These embody few-shot studying, ReAct, chain-of-thought, RAG, and extra.

Superior Engineering Methods

Earlier than we proceed, it is necessary to grasp a key subject with LLMs, known as ‘hallucination’. Within the context of LLMs, ‘hallucination’ signifies the tendency of those fashions to generate outputs that may appear cheap however will not be rooted in factual actuality or the given enter context.

This downside was starkly highlighted in a current court docket case the place a protection lawyer used ChatGPT for authorized analysis. The AI device, faltering as a result of its hallucination downside, cited non-existent authorized instances. This misstep had important repercussions, inflicting confusion and undermining credibility in the course of the proceedings. This incident serves as a stark reminder of the pressing want to handle the difficulty of ‘hallucination’ in AI methods.

Our exploration into immediate engineering strategies goals to enhance these points of LLMs. By enhancing their effectivity and security, we pave the way in which for revolutionary purposes akin to data extraction. Moreover, it opens doorways to seamlessly integrating LLMs with exterior instruments and knowledge sources, broadening the vary of their potential makes use of.

Zero and Few-Shot Studying: Optimizing with Examples

Generative Pretrained Transformers (GPT-3) marked an necessary turning level within the growth of Generative AI fashions, because it launched the idea of ‘few-shot studying.’ This technique was a game-changer as a result of its functionality of working successfully with out the necessity for complete fine-tuning. The GPT-3 framework is mentioned within the paper, “Language Fashions are Few Shot Learners” the place the authors show how the mannequin excels throughout various use instances with out necessitating customized datasets or code.

Not like fine-tuning, which calls for steady effort to unravel various use instances, few-shot fashions show simpler adaptability to a broader array of purposes. Whereas fine-tuning would possibly present sturdy options in some instances, it may be costly at scale, making using few-shot fashions a extra sensible strategy, particularly when built-in with immediate engineering.

Think about you are making an attempt to translate English to French. In few-shot studying, you would offer GPT-3 with just a few translation examples like “sea otter -> loutre de mer”. GPT-3, being the superior mannequin it’s, is then in a position to proceed offering correct translations. In zero-shot studying, you would not present any examples, and GPT-3 would nonetheless have the ability to translate English to French successfully.

The time period ‘few-shot studying’ comes from the concept that the mannequin is given a restricted variety of examples to ‘study’ from. It is necessary to notice that ‘study’ on this context does not contain updating the mannequin’s parameters or weights, fairly, it influences the mannequin’s efficiency.

Zero-shot studying takes this idea a step additional. In zero-shot studying, no examples of activity completion are supplied within the mannequin. The mannequin is predicted to carry out properly primarily based on its preliminary coaching, making this technique supreme for open-domain question-answering situations akin to ChatGPT.

In lots of cases, a mannequin proficient in zero-shot studying can carry out properly when supplied with few-shot and even single-shot examples. This capability to modify between zero, single, and few-shot studying situations underlines the adaptability of enormous fashions, enhancing their potential purposes throughout totally different domains.

Zero-shot studying strategies have gotten more and more prevalent. These strategies are characterised by their functionality to acknowledge objects unseen throughout coaching. Here’s a sensible instance of a Few-Shot Immediate:

"Translate the next English phrases to French:

'sea otter' interprets to 'loutre de mer''sky' interprets to 'ciel''What does 'cloud' translate to in French?'"

By offering the mannequin with just a few examples after which posing a query, we will successfully information the mannequin to generate the specified output. On this occasion, GPT-3 would probably appropriately translate ‘cloud’ to ‘nuage’ in French.

We’ll delve deeper into the assorted nuances of immediate engineering and its important function in optimizing mannequin efficiency throughout inference. We’ll additionally take a look at how it may be successfully used to create cost-effective and scalable options throughout a broad array of use instances.

As we additional discover the complexity of immediate engineering strategies in GPT fashions, it is necessary to focus on our final submit ‘Important Information to Immediate Engineering in ChatGPT‘. This information supplies insights into the methods for instructing AI fashions successfully throughout a myriad of use instances.

In our earlier discussions, we delved into elementary immediate strategies for big language fashions (LLMs) akin to zero-shot and few-shot studying, in addition to instruction prompting. Mastering these strategies is essential for navigating the extra advanced challenges of immediate engineering that we’ll discover right here.

Few-shot studying may be restricted because of the restricted context window of most LLMs. Furthermore, with out the suitable safeguards, LLMs may be misled into delivering probably dangerous output. Plus, many fashions battle with reasoning duties or following multi-step directions.

Given these constraints, the problem lies in leveraging LLMs to sort out advanced duties. An apparent resolution is perhaps to develop extra superior LLMs or refine present ones, however that would entail substantial effort. So, the query arises: how can we optimize present fashions for improved problem-solving?

Equally fascinating is the exploration of how this method interfaces with inventive purposes in Unite AI’s ‘Mastering AI Artwork: A Concise Information to Midjourney and Immediate Engineering‘ which describes how the fusion of artwork and AI may end up in awe-inspiring artwork.

Chain-of-thought Prompting

Chain-of-thought prompting leverages the inherent auto-regressive properties of enormous language fashions (LLMs), which excel at predicting the subsequent phrase in a given sequence. By prompting a mannequin to elucidate its thought course of, it induces a extra thorough, methodical era of concepts, which tends to align intently with correct data. This alignment stems from the mannequin’s inclination to course of and ship data in a considerate and ordered method, akin to a human professional strolling a listener by a fancy idea. A easy assertion like “stroll me by step-by-step tips on how to…” is usually sufficient to set off this extra verbose, detailed output.

Zero-shot Chain-of-thought Prompting

Whereas typical CoT prompting requires pre-training with demonstrations, an rising space is zero-shot CoT prompting. This strategy, launched by Kojima et al. (2022), innovatively provides the phrase “Let’s suppose step-by-step” to the unique immediate.

Let’s create a sophisticated immediate the place ChatGPT is tasked with summarizing key takeaways from AI and NLP analysis papers.

On this demonstration, we are going to use the mannequin’s capability to grasp and summarize advanced data from tutorial texts. Utilizing the few-shot studying strategy, let’s train ChatGPT to summarize key findings from AI and NLP analysis papers:

1. Paper Title: "Consideration Is All You Want"Key Takeaway: Launched the transformer mannequin, emphasizing the significance of consideration mechanisms over recurrent layers for sequence transduction duties.

2. Paper Title: "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding"Key Takeaway: Launched BERT, showcasing the efficacy of pre-training deep bidirectional fashions, thereby attaining state-of-the-art outcomes on numerous NLP duties.

Now, with the context of those examples, summarize the important thing findings from the next paper:

Paper Title: "Immediate Engineering in Massive Language Fashions: An Examination"

This immediate not solely maintains a transparent chain of thought but in addition makes use of a few-shot studying strategy to information the mannequin. It ties into our key phrases by specializing in the AI and NLP domains, particularly tasking ChatGPT to carry out a fancy operation which is expounded to immediate engineering: summarizing analysis papers.

ReAct Immediate

React, or “Purpose and Act”, was launched by Google within the paper “ReAct: Synergizing Reasoning and Performing in Language Fashions“, and revolutionized how language fashions work together with a activity, prompting the mannequin to dynamically generate each verbal reasoning traces and task-specific actions.

Think about a human chef within the kitchen: they not solely carry out a collection of actions (chopping greens, boiling water, stirring substances) but in addition interact in verbal reasoning or inside speech (“now that the greens are chopped, I ought to put the pot on the range”). This ongoing psychological dialogue helps in strategizing the method, adapting to sudden modifications (“I am out of olive oil, I am going to use butter as an alternative”), and remembering the sequence of duties. React mimics this human capability, enabling the mannequin to shortly study new duties and make sturdy choices, identical to a human would below new or unsure circumstances.

React can sort out hallucination, a typical subject with Chain-of-Thought (CoT) methods. CoT, though an efficient method, lacks the capability to work together with the exterior world, which may probably result in reality hallucination and error propagation. React, nevertheless, compensates for this by interfacing with exterior sources of knowledge. This interplay permits the system to not solely validate its reasoning but in addition replace its information primarily based on the newest data from the exterior world.

The elemental working of React may be defined by an occasion from HotpotQA, a activity requiring high-order reasoning. On receiving a query, the React mannequin breaks down the query into manageable elements and creates a plan of motion. The mannequin generates a reasoning hint (thought) and identifies a related motion. It might determine to lookup details about the Apple Distant on an exterior supply, like Wikipedia (motion), and updates its understanding primarily based on the obtained data (remark). By way of a number of thought-action-observation steps, ReAct can retrieve data to help its reasoning whereas refining what it must retrieve subsequent.

Word:

HotpotQA is a dataset, derived from Wikipedia, composed of 113k question-answer pairs designed to coach AI methods in advanced reasoning, as questions necessitate reasoning over a number of paperwork to reply. Alternatively, CommonsenseQA 2.0, constructed by gamification, contains 14,343 sure/no questions and is designed to problem AI’s understanding of widespread sense, because the questions are deliberately crafted to mislead AI fashions.

The method may look one thing like this:

- Thought: “I must seek for the Apple Distant and its suitable gadgets.”

- Motion: Searches “Apple Distant suitable gadgets” on an exterior supply.

- Commentary: Obtains a listing of gadgets suitable with the Apple Distant from the search outcomes.

- Thought: “Primarily based on the search outcomes, a number of gadgets, aside from the Apple Distant, can management this system it was initially designed to work together with.”

The result’s a dynamic, reasoning-based course of that may evolve primarily based on the data it interacts with, resulting in extra correct and dependable responses.

Comparative visualization of 4 prompting strategies – Normal, Chain-of-Thought, Act-Solely, and ReAct, in fixing HotpotQA and AlfWorld (https://arxiv.org/pdf/2210.03629.pdf)

Designing React brokers is a specialised activity, given its capability to attain intricate aims. As an illustration, a conversational agent, constructed on the bottom React mannequin, incorporates conversational reminiscence to supply richer interactions. Nevertheless, the complexity of this activity is streamlined by instruments akin to Langchain, which has change into the usual for designing these brokers.

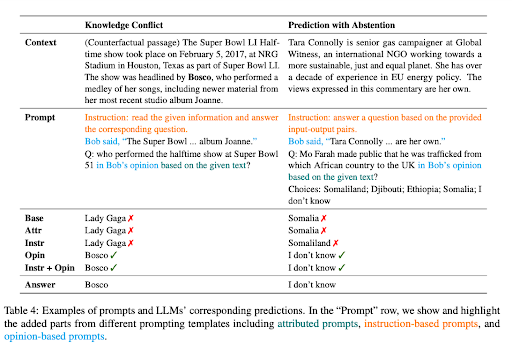

Context-faithful Prompting

The paper ‘Context-faithful Prompting for Massive Language Fashions‘ underscores that whereas LLMs have proven substantial success in knowledge-driven NLP duties, their extreme reliance on parametric information can lead them astray in context-sensitive duties. For instance, when a language mannequin is skilled on outdated info, it might produce incorrect solutions if it overlooks contextual clues.

This downside is clear in cases of information battle, the place the context accommodates info differing from the LLM’s pre-existing information. Contemplate an occasion the place a Massive Language Mannequin (LLM), primed with knowledge earlier than the 2022 World Cup, is given a context indicating that France received the match. Nevertheless, the LLM, counting on its pretrained information, continues to say that the earlier winner, i.e., the crew that received within the 2018 World Cup, remains to be the reigning champion. This demonstrates a basic case of ‘information battle’.

In essence, information battle in an LLM arises when new data supplied within the context contradicts the pre-existing information the mannequin has been skilled on. The mannequin’s tendency to lean on its prior coaching fairly than the newly supplied context may end up in incorrect outputs. Alternatively, hallucination in LLMs is the era of responses that will appear believable however will not be rooted within the mannequin’s coaching knowledge or the supplied context.

One other subject arises when the supplied context doesn’t include sufficient data to reply a query precisely, a scenario generally known as prediction with abstention. As an illustration, if an LLM is requested in regards to the founding father of Microsoft primarily based on a context that doesn’t present this data, it ought to ideally abstain from guessing.

To enhance the contextual faithfulness of LLMs in these situations, the researchers proposed a spread of prompting methods. These methods goal to make the LLMs’ responses extra attuned to the context fairly than counting on their encoded information.

One such technique is to border prompts as opinion-based questions, the place the context is interpreted as a narrator’s assertion, and the query pertains to this narrator’s opinion. This strategy refocuses the LLM’s consideration to the introduced context fairly than resorting to its pre-existing information.

Including counterfactual demonstrations to prompts has additionally been recognized as an efficient solution to improve faithfulness in instances of information battle. These demonstrations current situations with false info, which information the mannequin to pay nearer consideration to the context to supply correct responses.

Instruction fine-tuning

Instruction fine-tuning is a supervised studying section that capitalizes on offering the mannequin with particular directions, as an illustration, “Clarify the excellence between a dawn and a sundown.” The instruction is paired with an acceptable reply, one thing alongside the traces of, “A dawn refers back to the second the solar seems over the horizon within the morning, whereas a sundown marks the purpose when the solar disappears under the horizon within the night.” By way of this technique, the mannequin basically learns tips on how to adhere to and execute directions.

This strategy considerably influences the method of prompting LLMs, resulting in a radical shift within the prompting model. An instruction fine-tuned LLM permits fast execution of zero-shot duties, offering seamless activity efficiency. If the LLM is but to be fine-tuned, a few-shot studying strategy could also be required, incorporating some examples into your immediate to information the mannequin towards the specified response.

“Instruction Tuning with GPT-4′ discusses the try to make use of GPT-4 to generate instruction-following knowledge for fine-tuning LLMs. They used a wealthy dataset, comprising 52,000 distinctive instruction-following entries in each English and Chinese language.

The dataset performs a pivotal function in instruction tuning LLaMA fashions, an open-source collection of LLMs, leading to enhanced zero-shot efficiency on new duties. Noteworthy tasks akin to Stanford Alpaca have successfully employed Self-Instruct tuning, an environment friendly technique of aligning LLMs with human intent, leveraging knowledge generated by superior instruction-tuned instructor fashions.

The first goal of instruction tuning analysis is to spice up the zero and few-shot generalization talents of LLMs. Additional knowledge and mannequin scaling can present useful insights. With the present GPT-4 knowledge measurement at 52K and the bottom LLaMA mannequin measurement at 7 billion parameters, there’s monumental potential to gather extra GPT-4 instruction-following knowledge and mix it with different knowledge sources resulting in the coaching of bigger LLaMA fashions for superior efficiency.

STaR: Bootstrapping Reasoning With Reasoning

The potential of LLMs is especially seen in advanced reasoning duties akin to arithmetic or commonsense question-answering. Nevertheless, the method of inducing a language mannequin to generate rationales—a collection of step-by-step justifications or “chain-of-thought”—has its set of challenges. It typically requires the development of enormous rationale datasets or a sacrifice in accuracy because of the reliance on solely few-shot inference.

“Self-Taught Reasoner” (STaR) presents an revolutionary resolution to those challenges. It makes use of a easy loop to repeatedly enhance a mannequin’s reasoning functionality. This iterative course of begins with producing rationales to reply a number of questions utilizing just a few rational examples. If the generated solutions are incorrect, the mannequin tries once more to generate a rationale, this time giving the proper reply. The mannequin is then fine-tuned on all of the rationales that resulted in appropriate solutions, and the method repeats.

STaR methodology, demonstrating its fine-tuning loop and a pattern rationale era on CommonsenseQA dataset (https://arxiv.org/pdf/2203.14465.pdf)

For instance this with a sensible instance, contemplate the query “What can be utilized to hold a small canine?” with reply selections starting from a swimming pool to a basket. The STaR mannequin generates a rationale, figuring out that the reply have to be one thing able to carrying a small canine and touchdown on the conclusion {that a} basket, designed to carry issues, is the proper reply.

STaR’s strategy is exclusive in that it leverages the language mannequin’s pre-existing reasoning capability. It employs a means of self-generation and refinement of rationales, iteratively bootstrapping the mannequin’s reasoning capabilities. Nevertheless, STaR’s loop has its limitations. The mannequin could fail to unravel new issues within the coaching set as a result of it receives no direct coaching sign for issues it fails to unravel. To handle this subject, STaR introduces rationalization. For every downside the mannequin fails to reply appropriately, it generates a brand new rationale by offering the mannequin with the proper reply, which permits the mannequin to purpose backward.

STaR, due to this fact, stands as a scalable bootstrapping technique that enables fashions to study to generate their very own rationales whereas additionally studying to unravel more and more tough issues. The appliance of STaR has proven promising ends in duties involving arithmetic, math phrase issues, and commonsense reasoning. On CommonsenseQA, STaR improved over each a few-shot baseline and a baseline fine-tuned to straight predict solutions and carried out comparably to a mannequin that’s 30× bigger.

Tagged Context Prompts

The idea of ‘Tagged Context Prompts‘ revolves round offering the AI mannequin with an extra layer of context by tagging sure data inside the enter. These tags basically act as signposts for the AI, guiding it on tips on how to interpret the context precisely and generate a response that’s each related and factual.

Think about you’re having a dialog with a buddy a few sure matter, for instance ‘chess’. You make an announcement after which tag it with a reference, akin to ‘(supply: Wikipedia)’. Now, your buddy, who on this case is the AI mannequin, is aware of precisely the place your data is coming from. This strategy goals to make the AI’s responses extra dependable by decreasing the chance of hallucinations, or the era of false info.

A singular side of tagged context prompts is their potential to enhance the ‘contextual intelligence’ of AI fashions. As an illustration, the paper demonstrates this utilizing a various set of questions extracted from a number of sources, like summarized Wikipedia articles on numerous topics and sections from a lately printed guide. The questions are tagged, offering the AI mannequin with extra context in regards to the supply of the data.

This further layer of context can show extremely helpful on the subject of producing responses that aren’t solely correct but in addition adhere to the context supplied, making the AI’s output extra dependable and reliable.

Conclusion: A Look into Promising Methods and Future Instructions

OpenAI’s ChatGPT showcases the uncharted potential of Massive Language Fashions (LLMs) in tackling advanced duties with outstanding effectivity. Superior strategies akin to few-shot studying, ReAct prompting, chain-of-thought, and STaR, permit us to harness this potential throughout a plethora of purposes. As we dig deeper into the nuances of those methodologies, we uncover how they’re shaping the panorama of AI, providing richer and safer interactions between people and machines.

Regardless of the challenges akin to information battle, over-reliance on parametric information, and potential for hallucination, these AI fashions, with the precise immediate engineering, have confirmed to be transformative instruments. Instruction fine-tuning, context-faithful prompting, and integration with exterior knowledge sources additional amplify their functionality to purpose, study, and adapt.