Within the first article of this sequence, we mentioned communal computing units and the issues they create–or, extra exactly, the issues that come up as a result of we don’t actually perceive what “communal” means. Communal units are supposed for use by teams of individuals in houses and places of work. Examples embrace fashionable dwelling assistants and sensible shows just like the Amazon Echo, Google House, Apple HomePod, and plenty of others. If we don’t create these units with communities of individuals in thoughts, we’ll proceed to construct the mistaken ones.

Ever because the idea of a “person” was invented (which was most likely later than you assume), we’ve assumed that units are “owned” by a single person. Somebody buys the system and units up the account; it’s their system, their account. Once we’re constructing shared units with a person mannequin, that mannequin rapidly runs into limitations. What occurs while you need your property assistant to play music for a cocktail party, however your preferences have been skewed by your youngsters’s listening habits? We, as customers, have sure expectations for what a tool ought to do. However we, as technologists, have sometimes ignored our personal expectations when designing and constructing these units.

This expectation isn’t a brand new one both. The phone within the kitchen was for everybody’s use. After the discharge of the iPad in 2010 Craig Hockenberry mentioned the nice worth of communal computing but in addition the considerations:

“Once you cross it round, you’re giving everybody who touches it the chance to mess along with your non-public life, whether or not deliberately or not. That makes me uneasy.”

Communal computing requires a brand new mindset that takes under consideration customers’ expectations. If the units aren’t designed with these expectations in thoughts, they’re destined for the landfill. Customers will ultimately expertise “weirdness” and “annoyance” that grows to mistrust of the system itself. As technologists, we regularly name these weirdnesses “edge circumstances.” That’s exactly the place we’re mistaken: they’re not edge circumstances, however they’re on the core of how individuals wish to use these units.

Within the first article, we listed 5 core questions we should always ask about communal units:

- Id: Do we all know all the people who find themselves utilizing the system?

- Privateness: Are we exposing (or hiding) the appropriate content material for all the individuals with entry?

- Safety: Are we permitting all the individuals utilizing the system to do or see what they need to and are we defending the content material from folks that shouldn’t?

- Expertise: What’s the contextually acceptable show or subsequent motion?

- Possession: Who owns all the knowledge and companies hooked up to the system that a number of individuals are utilizing?

On this article, we’ll take a deeper take a look at these questions, to see how the issues manifest and how one can perceive them.

Id

The entire issues we’ve listed begin with the concept that there may be one registered and recognized one that ought to use the system. That mannequin doesn’t match actuality: the identification of a communal system isn’t a single individual, however everybody who can work together with it. This might be anybody capable of faucet the display, make a voice command, use a distant, or just be sensed by it. To grasp this communal mannequin and the issues it poses, begin with the one that buys and units up the system. It’s related to that particular person’s account, like a private Amazon account with its order historical past and procuring listing. Then it will get troublesome. Who doesn’t, can’t, or shouldn’t have full entry to an Amazon account? Would you like everybody who comes into your own home to have the ability to add one thing to your procuring listing?

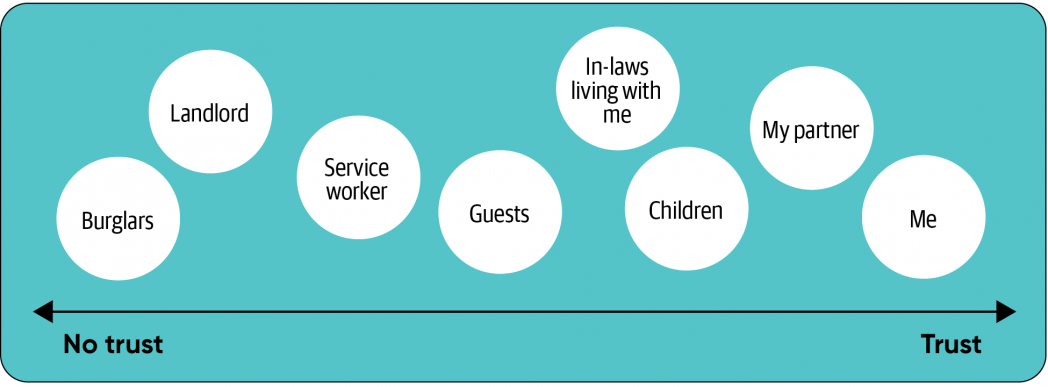

If you consider the spectrum of people that might be in your own home, they vary from individuals whom you belief, to individuals who you don’t actually belief however who must be there, to those that you shouldn’t belief in any respect.

Along with people, we have to contemplate the teams that every individual might be a part of. These group memberships are known as “pseudo-identities”; they’re sides of an individual’s full identification. They’re normally outlined by how the individual related themself with a bunch of different individuals. My life at work, dwelling, a highschool buddies group, and as a sports activities fan present totally different elements of my identification. Once I’m with different individuals who share the identical pseudo-identity, we will share info. When there are individuals from one group in entrance of a tool I could keep away from exhibiting content material that’s related to one other group (or one other private pseudo-identity). This may sound summary, however it isn’t; when you’re with buddies in a sports activities bar, you most likely need notifications in regards to the groups you observe. You most likely don’t need information about work, except it’s an emergency.

There are essential the reason why we present a selected side of our identification in a selected context. When designing an expertise, you have to contemplate the identification context and the place the expertise will happen. Most lately this has provide you with make money working from home. Many individuals speak about ‘bringing your entire self to work,’ however don’t understand that “your entire self” isn’t at all times acceptable. Distant work adjustments when and the place I ought to work together with work. For a wise display in my kitchen, it’s acceptable to have content material that’s associated to my dwelling and household. Is it acceptable to have all of my work notifications and conferences there? Might it’s an issue for youngsters to have the power to affix my work calls? What does my IT group require so far as safety of labor units versus private dwelling units?

With these units we might have to modify to a special pseudo-identity to get one thing completed. I could must be reminded of a piece assembly. Once I get a notification from a detailed good friend, I must determine whether or not it’s acceptable to reply based mostly on the opposite individuals round me.

The pandemic has damaged down the limitations between dwelling and work. The pure context change from being at work and worrying about work issues after which going dwelling to fret about dwelling issues is not the case. Folks must make a aware effort to “flip off work” and to alter the context. Simply because it’s the center of the workday doesn’t at all times imply I wish to be bothered by work. I could wish to change contexts to take a break. Such context shifts add nuance to the way in which the present pseudo-identity must be thought of, and to the overarching context you have to detect.

Subsequent, we have to contemplate identities as teams that I belong to. I’m a part of my household, and my household would doubtlessly wish to discuss with different households. I dwell in a home that’s on my road alongside different neighbors. I’m a part of a corporation that I determine as my work. These are all pseudo-identities we should always contemplate, based mostly on the place the system is positioned and in relation to different equally essential identities.

The crux of the issue with communal units is the a number of identities which might be or could also be utilizing the system. This requires higher understanding of who, the place, and why individuals are utilizing the system. We have to contemplate the kinds of teams which might be a part of the house and workplace.

Privateness

As we contemplate the identities of all individuals with entry to the system, and the identification of the place the system is to be a part of, we begin to contemplate what privateness expectations individuals could have given the context during which the system is used.

Privateness is tough to know. The framework I’ve discovered most useful is Contextual Integrity which was launched by Helen Nissenbaum within the e book Privateness in Context. Contextual Integrity describes 4 key points of privateness:

- Privateness is offered by acceptable flows of knowledge.

- Acceptable info flows are those who conform to contextual info norms.

- Contextual informational norms refer to 5 unbiased parameters: knowledge topic, sender, recipient, info sort, and transmission precept.

- Conceptions of privateness are based mostly on moral considerations that evolve over time.

What’s most essential about Contextual Integrity is that privateness will not be about hiding info away from the general public however giving individuals a approach to management the circulation of their very own info. The context during which info is shared determines what is acceptable.

This circulation both feels acceptable, or not, based mostly on key traits of the knowledge (from Wikipedia):

- The information topic: Who or what is that this about?

- The sender of the info: Who’s sending it?

- The recipient of the info: Who will ultimately see or get the info?

- The data sort: What sort of knowledge is that this (e.g. a photograph, textual content)?

- The transmission precept: In what set of norms is that this being shared (e.g. college, medical, private communication)?

We not often acknowledge how a refined change in one among these parameters might be a violation of privateness. It might be fully acceptable for my good friend to have a bizarre picture of me, however as soon as it will get posted on an organization intranet web site it violates how I would like info (a photograph) to circulation. The recipient of the info has modified to one thing I not discover acceptable. However I may not care whether or not an entire stranger (like a burglar) sees the picture, so long as it by no means will get again to somebody I do know.

For communal use circumstances, the sender or receiver of knowledge is commonly a bunch. There could also be a number of individuals within the room throughout a video name, not simply the individual you’re calling. Folks can stroll out and in. I is perhaps proud of some individuals in my dwelling seeing a selected picture, however discover it embarrassing whether it is proven to friends at a cocktail party.

We should additionally contemplate what occurs when different individuals’s content material is proven to those that shouldn’t see it. This content material might be pictures or notifications from individuals outdoors the communal area that might be seen by anybody in entrance of the system. Smartphones can cover message contents while you aren’t close to your telephone for this precise motive.

The companies themselves can broaden the ‘receivers’ of knowledge in ways in which create uncomfortable conditions. In Privateness in Context, Nissenbaum talks about the privateness implications of Google Avenue View when it locations pictures of individuals’s homes on Google Maps. When a home was solely seen to individuals who walked down the road that was one factor, however when anybody on this planet can entry an image of a home, that adjustments the parameters in a method that causes concern. Most lately, IBM used Flickr pictures that had been shared beneath a Inventive Commons license to coach facial recognition algorithms. Whereas this didn’t require any change to phrases of the service it was a shock to individuals and could also be in violation of the Inventive Commons license. In the long run, IBM took the dataset down.

Privateness issues for communal units ought to give attention to who’s getting access to info and whether or not it’s acceptable based mostly on individuals’s expectations. With out utilizing a framework like contextual inquiry we shall be caught speaking about generalized guidelines for knowledge sharing, and there’ll at all times be edge circumstances that violate somebody’s privateness.

A observe about youngsters

Youngsters make identification and privateness particularly difficult. About 40% of all households have a toddler. Youngsters shouldn’t be an afterthought. In the event you aren’t compliant with native legal guidelines you will get in quite a lot of hassle. In 2019, YouTube needed to settle with the FTC for a $170 million effective for promoting advertisements focusing on youngsters. It will get sophisticated as a result of the ‘age of consent’ is determined by the area as properly: COPPA within the US is for individuals beneath 13 years outdated, CCPA in California is for individuals beneath 16, and GDPR total is beneath 16 years outdated however every member state can set its personal. The second you acknowledge youngsters are utilizing your platforms, you have to accommodate them.

For communal units, there are lots of use circumstances for youngsters. As soon as they understand they will play no matter music they need (together with tracks of fart sounds) on a shared system they may do it. Youngsters give attention to the exploration over the duty and can find yourself discovering far more in regards to the system than mother and father may. Adjusting your practices after constructing a tool is a recipe for failure. You’ll find that the paradigms you select for different events gained’t align with the expectations for youngsters, and modifying your software program to accommodate youngsters is troublesome or inconceivable. It’s essential to account for youngsters from the start.

Safety

To get to a house assistant, you normally must cross by means of a house’s outer door. There’s normally a bodily limitation by means of a lock. There could also be alarm techniques. Lastly, there are social norms: you don’t simply stroll into another person’s home with out knocking or being invited.

As soon as you’re previous all of those locks, alarms, and norms, anybody can entry the communal system. Few issues inside a house are restricted–presumably a protected with essential paperwork. When a communal system requires authentication, it’s normally subverted in a roundabout way for comfort: for instance, a password is perhaps taped to it, or a password could by no means have been set.

The idea of Zero Belief Networks speaks to this drawback. It comes right down to a key query: is the chance related to an motion higher than the belief now we have that the individual performing the motion is who they are saying they’re?

Passwords, passcodes, or cellular system authentication grow to be nuisances; these supposed secrets and techniques are often shared between everybody who has entry to the system. Passwords is perhaps written down for individuals who can’t keep in mind them, making them seen to much less trusted individuals visiting your family. Have we not realized something because the film Conflict Video games?

Once we contemplate the chance related to an motion, we have to perceive its privateness implications. Would the motion expose somebody’s info with out their data? Would it not enable an individual to fake to be another person? Might one other occasion inform simply the system was being utilized by an imposter?

There’s a tradeoff between the belief and threat. The system must calculate whether or not we all know who the individual is and whether or not the individual desires the knowledge to be proven. That must be weighed in opposition to the potential threat or hurt if an inappropriate individual is in entrance of the system.

A number of examples of this tradeoff:

| Characteristic | Danger and belief calculation | Doable points |

| Displaying a photograph when the system detects somebody within the room | Photograph content material sensitivity, who’s within the room | Displaying an inappropriate picture to an entire stranger |

| Beginning a video name | Particular person’s account getting used for the decision, the precise individual beginning the decision | When the opposite facet picks up it might not be who they thought it might be |

| Taking part in a private music playlist | Private suggestions being impacted | Incorrect future suggestions |

| Mechanically ordering one thing based mostly on a voice command | Comfort of ordering, approval of the procuring account’s proprietor | Delivery an merchandise that shouldn’t have been ordered |

This will get even trickier when individuals not within the dwelling can entry the units remotely. There have been circumstances of harassment, intimidation, and home abuse by individuals whose entry ought to have been revoked: for instance, an ex-partner turning off the heating system. When ought to somebody be capable to entry communal units remotely? When ought to their entry be controllable from the units themselves? How ought to individuals be reminded to replace their entry management lists? How does primary safety upkeep occur inside a communal area?

See how a lot work this takes in a latest account of professional bono safety work for a harassed mom and her son. Or how a YouTuber was blackmailed, surveilled, and harassed by her sensible dwelling. Apple even has a guide for this kind of scenario.

At dwelling, the place there’s no company IT group to create insurance policies and automation to maintain issues safe, it’s subsequent to inconceivable to handle all of those safety points. Even some firms have hassle with it. We have to work out how customers will preserve and configure a communal system over time. Configuration for units within the dwelling and workplace could be wrought with numerous various kinds of wants over time.

For instance, what occurs when somebody leaves the house and is not a part of it? We might want to take away their entry and should even discover it essential to dam them from sure companies. That is highlighted with the circumstances of harassment of individuals by means of spouses that also management the communal units. Ongoing upkeep of a selected system is also triggered by a change in wants by the neighborhood. A house system could also be used to simply play music or test the climate at first. However when a brand new child comes dwelling, having the ability to do video calling with shut relations could grow to be a better precedence.

Finish customers are normally very dangerous at altering configuration after it’s set. They might not even know that they will configure one thing within the first place. This is the reason individuals have made a enterprise out of establishing dwelling stereo and video techniques. Folks simply don’t perceive the applied sciences they’re placing of their homes. Does that imply we want some sort of handy-person that does dwelling system setup and administration? When extra sophisticated routines are required to satisfy the wants, how does somebody enable for adjustments with out writing code, if they’re allowed to?

Communal units want new paradigms of safety that transcend the usual login. The world inside a house is protected by a barrier like a locked door; the capabilities of communal units ought to respect that. This implies each eradicating friction in some circumstances and growing it in others.

A observe about biometrics

(Supply: Google Face Match video, https://youtu.be/ODy_xJHW6CI?t=26)

Biometric authentication for voice and face recognition may also help us get a greater understanding of who’s utilizing a tool. Examples of biometric authentication embrace FaceID for the iPhone and voice profiles for Amazon Alexa. There’s a push for regulation of facial recognition applied sciences, however opt-in for authentication functions tends to be carved out.

Nonetheless, biometrics aren’t with out issues. Along with points with pores and skin tone, gender bias, and native accents, biometrics assumes that everybody is prepared to have a biometric profile on the system–and that they might be legally allowed to (for instance, youngsters might not be allowed to consent to a biometric profile). It additionally assumes this know-how is safe. Google FaceMatch makes it very clear it’s only a know-how for personalization, somewhat than authentication. I can solely guess they’ve legalese to keep away from legal responsibility when an unauthorized individual spoofs somebody’s face, say by taking a photograph off the wall and exhibiting it to the system.

What can we imply by “personalization?” Once you stroll right into a room and FaceMatch identifies your face, the Google House Hub dings, reveals your face icon, then reveals your calendar (whether it is linked), and a feed of customized playing cards. Apple’s FaceID makes use of many ranges of presentation assault detection (often known as “anti-spoofing”): it verifies your eyes are open and you’re looking on the display, and it makes use of a depth sensor to ensure it isn’t “seeing” a photograph. The telephone can then present hidden notification content material or open the telephone to the house display. This measurement of belief and threat is benefited by understanding who might be in entrance of the system. We will’t overlook that the machine studying that’s doing biometrics will not be a deterministic calculation; there may be at all times a point of uncertainty.

Social and data norms outline what we contemplate acceptable, who we belief, and the way a lot. As belief goes up, we will take extra dangers in the way in which we deal with info. Nonetheless, it’s troublesome to attach belief with threat with out understanding individuals’s expectations. I’ve entry to my companion’s iPhone and know the passcode. It might be a violation of a norm if I walked over and unlocked it with out being requested, and doing so will result in diminished belief between us.

As we will see, biometrics does provide some advantages however gained’t be the panacea for the distinctive makes use of of communal units. Biometrics will enable these prepared to opt-in to the gathering of their biometric profile to realize customized entry with low friction, however it can by no means be useable for everybody with bodily entry.

Experiences

Folks use a communal system for brief experiences (checking the climate), ambient experiences (listening to music or glancing at a photograph), and joint experiences (a number of individuals watching a film). The system wants to pay attention to norms inside the area and between the a number of individuals within the area. Social norms are guidelines by which individuals determine how one can act in a selected context or area. Within the dwelling, there are norms about what individuals ought to and mustn’t do. If you’re a visitor, you attempt to see if individuals take their sneakers off on the door; you don’t rearrange issues on a bookshelf; and so forth.

Most software program is constructed to work for as many individuals as potential; that is known as generalization. Norms stand in the way in which of generalization. Right this moment’s know-how isn’t adequate to adapt to each potential scenario. One technique is to simplify the software program’s performance and let the people implement norms. For instance, when a number of individuals discuss to an Echo on the identical time, Alexa will both not perceive or it can take motion on the final command. Multi-turn conversations between a number of individuals are nonetheless of their infancy. That is effective when there are understood norms–for instance, between my companion and I. However it doesn’t work so properly while you and a toddler are each making an attempt to shout instructions.

Norms are attention-grabbing as a result of they are usually realized and negotiated over time, however are invisible. Experiences which might be constructed for communal use want to pay attention to these invisible norms by means of cues that may be detected from peoples’ actions and phrases. This will get particularly difficult as a result of a dialog between two individuals may embrace info topic to totally different expectations (in a Contextual Integrity sense) about how that info is used. With sufficient knowledge, fashions could be created to “learn between the traces” in each useful and harmful methods.

Video video games already cater to a number of individuals’s experiences. With the Nintendo Swap or some other gaming system, a number of individuals can play collectively in a joint expertise. Nonetheless, the foundations governing these experiences are by no means utilized to, say, Netflix. The idea is at all times that one individual holds the distant. How may these experiences be improved if software program may settle for enter from a number of sources (distant controls, voice, and so on.) to construct a number of films that’s acceptable for everybody watching?

Communal expertise issues spotlight inequalities in households. With ladies doing extra family coordination than ever, there’s a must rebalance the duties for households. More often than not these coordination duties are relegated to non-public units, typically the spouse’s cell phone, after they contain the complete household (although there’s a digital divide outdoors the US). With out transferring these experiences into a spot that everybody can take part in, we’ll proceed these inequalities.

To this point, know-how has been nice at intermediating individuals for coordination by means of techniques like textual content messaging, social networks, and collaborative paperwork. We don’t construct interplay paradigms that enable for a number of individuals to interact on the identical time of their communal areas. To do that we have to tackle that the norms that dictate what is acceptable conduct are invisible and pervasive within the areas these applied sciences are deployed.

Possession

Many of those units are usually not actually owned by the individuals who purchase them. As half of the present pattern in direction of subscription-based enterprise fashions, the system gained’t perform when you don’t subscribe to a service. These companies have license agreements that specify what you may and can’t do (which you’ll learn in case you have a few hours to spare and can perceive them).

For instance, this has been a problem for followers of Amazon’s Blink digital camera. The house automation business is fragmented: there are lots of distributors, every with its personal utility to manage their explicit units. However most individuals don’t wish to use totally different apps to manage their lighting, their tv, their safety cameras, and their locks. Due to this fact, individuals have began to construct controllers that span the totally different ecosystems. Doing so has brought on Blink customers to get their accounts suspended.

What’s even worse is that these license agreements can change every time the corporate desires. Licenses are often modified with nothing greater than a notification, after which one thing that was beforehand acceptable is now forbidden. In 2020, Wink abruptly utilized a month-to-month service cost; when you didn’t pay, the system would cease working. Additionally in 2020, Sonos brought on a stir by saying they had been going to “recycle” (disable) outdated units. They ultimately modified their coverage.

The problem isn’t simply what you are able to do along with your units; it’s additionally what occurs to the info they create. Amazon’s Ring partnership with one in ten US police departments troubles many privateness teams as a result of it creates an enormous surveillance program. What when you don’t wish to be part of the police state? Ensure you test the appropriate field and browse your phrases of service. In the event you’re designing a tool, you have to require customers to choose in to knowledge sharing (particularly as areas adapt GDPR and CCPA-like regulation).

Whereas methods like federated studying are on the horizon, to keep away from latency points and mass knowledge assortment, it stays to be seen whether or not these methods are passable for firms that accumulate knowledge. Is there a profit to each organizations and their clients to restrict or obfuscate the transmission of information away from the system?

Possession is especially difficult for communal units. It is a collision between the expectations of shoppers who put one thing of their dwelling; these expectations run straight in opposition to the way in which rent-to-use companies are pitched. Till we acknowledge that {hardware} put in a house is totally different from a cloud service, we’ll by no means get it proper.

Plenty of issues, now what?

Now that now we have dived into the assorted issues that rear their head with communal units, what can we do about it? Within the subsequent article we focus on a approach to contemplate the map of the communal area. This helps construct a greater understanding of how the communal system matches within the context of the area and companies that exist already.

We will even present an inventory of dos and don’ts for leaders, builders, and designers to think about when constructing a communal system.