Ever since ChatGPT exploded onto the tech scene in November of final yr, it’s been serving to folks write every kind of fabric, generate code, and discover data. It and different giant language fashions (LLMs) have facilitated duties from fielding customer support calls to taking quick meals orders. Given how helpful LLMs have been for people within the brief time they’ve been round, how may a ChatGPT for robots influence their capacity to study and do new issues? Researchers at Google DeepMind determined to search out out, and printed their findings in a weblog submit and paper launched final week.

They name their system RT-2. It’s brief for robotics transformer 2, and it’s the successor to robotics transformer 1, which the corporate launched on the finish of final yr. RT-1 was primarily based on a small language and imaginative and prescient program and particularly skilled to do many duties. The software program was utilized in Alphabet X’s On a regular basis Robots, enabling them to do over 700 completely different duties with a 97 p.c success charge. However when prompted to do new duties they weren’t skilled for, robots utilizing RT-1 have been solely profitable 32 p.c of the time.

RT-2 virtually doubles this charge, efficiently performing new duties 62 p.c of the time it’s requested to. The researchers name RT-2 a vision-language-action (VLA) mannequin. It makes use of textual content and pictures it sees on-line to study new abilities. That’s not so simple as it sounds; it requires the software program to first “perceive” an idea, then apply that understanding to a command or set of directions, then perform actions that fulfill these directions.

One instance the paper’s authors give is disposing of trash. In earlier fashions, the robotic’s software program must first be skilled to determine trash. For instance, if there’s a peeled banana on a desk with the peel subsequent to it, the bot could be proven that the peel is trash whereas the banana isn’t. It will then be taught how you can decide up the peel, transfer it to a trash can, and deposit it there.

RT-2 works just a little otherwise, although. For the reason that mannequin intakes a great deal of data and information from the web, it has a common understanding of what trash is, and although it’s not skilled to throw trash away, it will possibly piece collectively the steps to finish this process.

The LLMs the researchers used to coach RT-2 are PaLI-X (a imaginative and prescient and language mannequin with 55 billion parameters), and PaLM-E (what Google calls an embodied multimodal language mannequin, developed particularly for robots, with 12 billion parameters). “Parameter” refers to an attribute a machine studying mannequin defines primarily based on its coaching information. Within the case of LLMs, they mannequin the relationships between phrases in a sentence and weigh how doubtless it’s {that a} given phrase might be preceded or adopted by one other phrase.

By discovering the relationships and patterns between phrases in a large dataset, the fashions study from their very own inferences. They’ll ultimately to determine how completely different ideas relate to one another, and discern context. In RT-2’s case, it interprets that data into generalized directions for robotic actions.

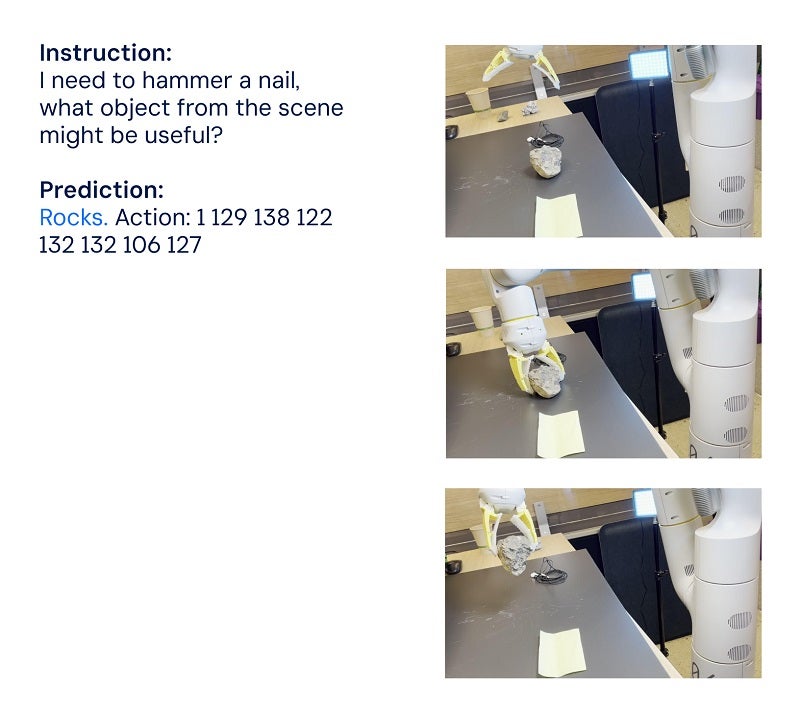

These actions are represented for the robotic as tokens, that are normally used to signify pure language textual content within the type of phrase fragments. On this case, the tokens are elements of an motion, and the software program strings a number of tokens collectively to carry out an motion. This construction additionally allows the software program to carry out chain-of-thought reasoning, that means it will possibly reply to questions or prompts that require some extent of reasoning.

Examples the staff provides embody selecting an object to make use of as a hammer when there’s no hammer obtainable (the robotic chooses a rock) and selecting the very best drink for a drained particular person (the robotic chooses an power drink).

“RT-2 exhibits improved generalization capabilities and semantic and visible understanding past the robotic information it was uncovered to,” the researchers wrote in a Google weblog submit. “This contains decoding new instructions and responding to consumer instructions by performing rudimentary reasoning, similar to reasoning about object classes or high-level descriptions.”

The dream of general-purpose robots that may assist people with no matter could come up—whether or not in a house, a business setting, or an industrial setting—received’t be achievable till robots can study on the go. What looks like probably the most primary intuition to us is, for robots, a posh mixture of understanding context, having the ability to cause by it, and take actions to unravel issues that weren’t anticipated to pop up. Programming them to react appropriately to a wide range of unplanned situations is not possible, in order that they want to have the ability to generalize and study from expertise, similar to people do.

RT-2 is a step on this route. The researchers do acknowledge, although, that whereas RT-2 can generalize semantic and visible ideas, it’s not but capable of study new actions by itself. Moderately, it applies the actions it already is aware of to new situations. Maybe RT-3 or 4 will be capable of take these abilities to the following stage. Within the meantime, because the staff concludes of their weblog submit, “Whereas there’s nonetheless an incredible quantity of labor to be accomplished to allow useful robots in human-centered environments, RT-2 exhibits us an thrilling future for robotics simply inside grasp.”

Picture Credit score: Google DeepMind