As an example, TaylorMade Golf Firm turned to Microsoft Syntex for a complete doc administration system to prepare and safe emails, attachments and different paperwork for mental property and patent filings. On the time, firm attorneys manually managed this content material, spending hours submitting and transferring paperwork to be shared and processed later.

With Microsoft Syntex, these paperwork are robotically categorised, tagged and filtered in a means that’s safer and makes them simple to search out by search as a substitute of needing to dig by a standard file and folder system. TaylorMade can be exploring methods to make use of Microsoft Syntex to robotically course of orders, receipts and different transactional paperwork for the accounts payable and finance groups.

Different prospects are utilizing Microsoft Syntex for contract administration and meeting, famous Teper. Whereas each contract could have distinctive components, they’re constructed with frequent clauses round monetary phrases, change management, timeline and so forth. Fairly than write these frequent clauses from scratch every time, individuals can use Syntex to assemble them from varied paperwork after which introduce modifications.

“They want AI and machine studying to identify, ‘Hey, this paragraph could be very completely different from our normal phrases. This might use some further oversight,’” he mentioned.

“When you’re making an attempt to learn a 100-page contract and search for the factor that’s considerably modified, that’s a number of work versus the AI serving to with that,” he added. “After which there’s the workflow round these contracts: Who approves them? The place are they saved? How do you discover them afterward? There’s a giant a part of this that’s metadata.”

When DALL∙E 2 will get private

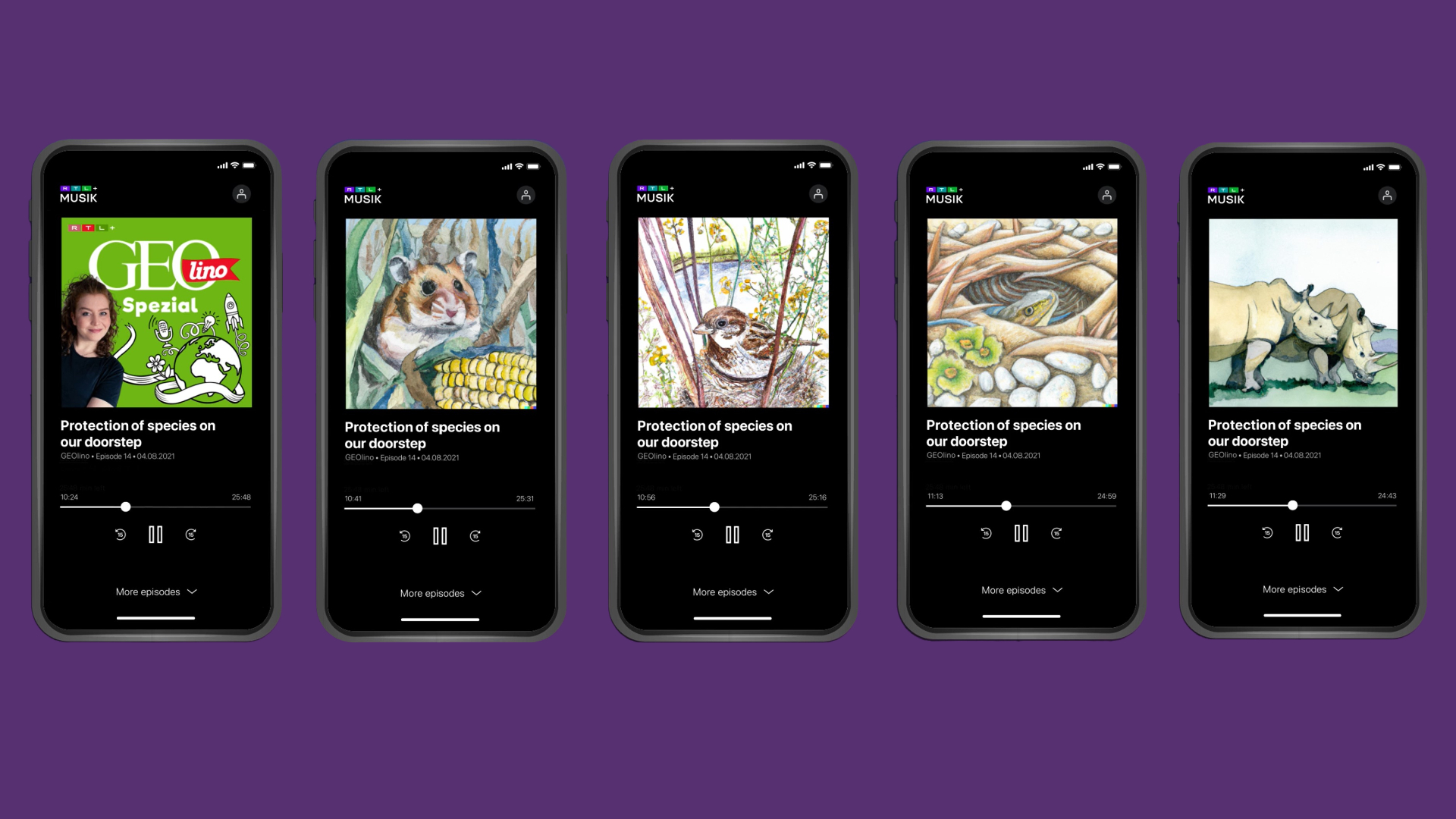

The provision of DALL∙E 2 in Azure OpenAI Service has sparked a collection of explorations at RTL Deutschland, Germany’s largest privately held cross-media firm, about the way to generate personalised photos based mostly on prospects’ pursuits. For instance, in RTL’s knowledge, analysis and AI competence middle, knowledge scientists are testing varied methods to reinforce the person expertise by generative imagery.

RTL Deutschland’s streaming service RTL+ is increasing to supply on-demand entry to tens of millions of movies, music albums, podcasts, audiobooks and e-magazines. The platform depends closely on photos to seize individuals’s consideration, mentioned Marc Egger, senior vp of knowledge merchandise and expertise for the RTL knowledge group.

“Even when you’ve got the proper advice, you continue to don’t know whether or not the person will click on on it as a result of the person is utilizing visible cues to determine whether or not she or he is concerned about consuming one thing. So paintings is actually essential, and you need to have the appropriate paintings for the appropriate individual,” he mentioned.

Think about a romcom film a couple of skilled soccer participant who will get transferred to Paris and falls in love with a French sportswriter. A sports activities fan is perhaps extra inclined to take a look at the film if there’s a picture of a soccer recreation. Somebody who loves romance novels or journey is perhaps extra concerned about a picture of the couple kissing below the Eiffel Tower.

Combining the ability of DALL∙E 2 and metadata about what sort of content material a person has interacted with previously provides the potential to supply personalised imagery on a beforehand inconceivable scale, Egger mentioned.

“If in case you have tens of millions of customers and tens of millions of belongings, you’ve gotten the issue that you just can’t scale it – the workforce doesn’t exist,” he mentioned. “You’ll by no means have sufficient graphic designers to create all of the personalised photos you need. So, that is an enabling expertise for doing issues you wouldn’t in any other case have the ability to do.”

Egger’s group can be contemplating the way to use DALL∙E 2 in Azure OpenAI Service to create visuals for content material that at the moment lacks imagery, akin to podcast episodes and scenes in audiobooks. As an example, metadata from a podcast episode may very well be used to generate a novel picture to accompany it, moderately than repeating the identical generic podcast picture again and again.

Alongside related strains, an individual who’s listening to an audiobook on their telephone would sometimes take a look at the identical ebook cowl artwork for every chapter. DALL∙E 2 may very well be used to generate a novel picture to accompany every scene in every chapter.

Utilizing DALL∙E 2 by Azure OpenAI Service, Egger added, gives entry to different Azure companies and instruments in a single place, which permits his group to work effectively and seamlessly. “As with all different software-as-a-service merchandise, we will ensure that if we want large quantities of images created by DALL∙E, we’re not fearful about having it on-line.”

The suitable and accountable use of DALL∙E 2

No AI expertise has elicited as a lot pleasure as programs akin to DALL∙E 2 that may generate photos from pure language descriptions, in response to Sarah Chook, a Microsoft principal group challenge supervisor for Azure AI.

“Individuals love photos, and for somebody like me who just isn’t visually inventive in any respect, I’m in a position to make one thing way more stunning than I might ever have the ability to utilizing different visible instruments,” she mentioned of DALL∙E 2. “It’s giving people a brand new software to precise themselves creatively and talk in compelling and enjoyable and fascinating methods.”

Her group focuses on the event of instruments and methods that information individuals towards the acceptable and accountable use of AI instruments akin to DALL∙E 2 in Azure AI and that restrict their use in ways in which might trigger hurt.

To assist forestall DALL∙E 2 from delivering inappropriate outputs in Azure OpenAI Service, OpenAI eliminated probably the most express sexual and violent content material from the dataset used to coach the mannequin, and Azure AI deployed filters to reject prompts that violate content material coverage.

As well as, the group has built-in methods that forestall DALL∙E 2 from creating photos of celebrities in addition to objects which can be generally used to attempt to trick the system into producing sexual or violent content material. On the output aspect, the group has added fashions that take away AI generated photos that seem to include grownup, gore and different forms of inappropriate content material.