This has been a wild yr for AI. In case you’ve spent a lot time on-line, you’ve most likely ran into pictures generated by AI programs like DALL-E 2 or Secure Diffusion, or jokes, essays, or different textual content written by ChatGPT, the newest incarnation of OpenAI’s giant language mannequin GPT-3.

Generally it’s apparent when an image or a chunk of textual content has been created by an AI. However more and more, the output these fashions generate can simply idiot us into pondering it was made by a human. And enormous language fashions particularly are assured bullshitters: they create textual content that sounds appropriate however in truth could also be stuffed with falsehoods.

Whereas that doesn’t matter if it’s only a little bit of enjoyable, it may have critical penalties if AI fashions are used to supply unfiltered well being recommendation or present different types of essential data. AI programs might additionally make it stupidly simple to supply reams of misinformation, abuse, and spam, distorting the data we devour and even our sense of actuality. It might be notably worrying round elections, for instance.

The proliferation of those simply accessible giant language fashions raises an essential query: How will we all know whether or not what we learn on-line is written by a human or a machine? I’ve simply printed a narrative wanting into the instruments we at present have to identify AI-generated textual content. Spoiler alert: Right now’s detection instrument package is woefully insufficient towards ChatGPT.

However there’s a extra critical long-term implication. We could also be witnessing, in actual time, the start of a snowball of bullshit.

Massive language fashions are educated on knowledge units which might be constructed by scraping the web for textual content, together with all of the poisonous, foolish, false, malicious issues people have written on-line. The completed AI fashions regurgitate these falsehoods as reality, and their output is unfold in every single place on-line. Tech corporations scrape the web once more, scooping up AI-written textual content that they use to coach greater, extra convincing fashions, which people can use to generate much more nonsense earlier than it’s scraped time and again, advert nauseam.

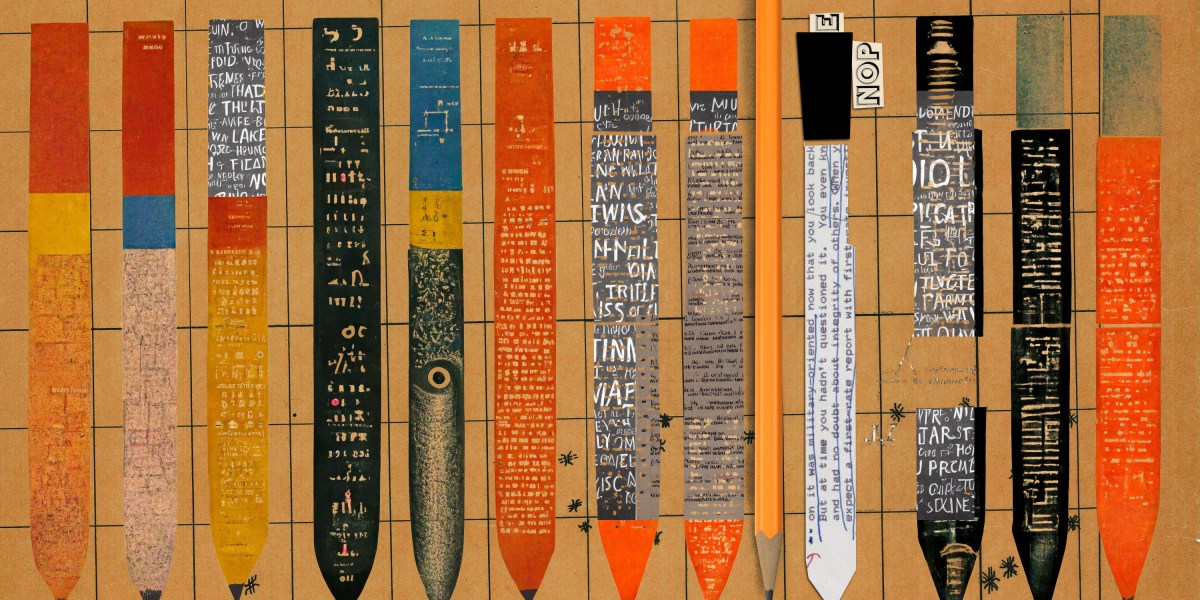

This drawback—AI feeding on itself and producing more and more polluted output—extends to pictures. “The web is now eternally contaminated with pictures made by AI,” Mike Prepare dinner, an AI researcher at King’s Faculty London, instructed my colleague Will Douglas Heaven in his new piece on the way forward for generative AI fashions.

“The photographs that we made in 2022 will probably be part of any mannequin that’s made any longer.”