This submit is the foreword written by Brad Smith for Microsoft’s report Governing AI: A Blueprint for the Future. The primary a part of the report particulars 5 methods governments ought to contemplate insurance policies, legal guidelines, and rules round AI. The second half focuses on Microsoft’s inner dedication to moral AI, exhibiting how the corporate is each operationalizing and constructing a tradition of accountable AI.

“Don’t ask what computer systems can do, ask what they need to do.”

That’s the title of the chapter on AI and ethics in a ebook I co–authored in 2019. On the time, we wrote that, “This can be one of many defining questions of our technology.” 4 years later, the query has seized heart stage not simply on this planet’s capitals, however round many dinner tables.

As individuals have used or heard concerning the energy of OpenAI’s GPT-4 basis mannequin, they’ve typically been shocked and even astounded. Many have been enthused and even excited. Some have been involved and even frightened. What has grow to be clear to virtually everyone seems to be one thing we famous 4 years in the past – we’re the primary technology within the historical past of humanity to create machines that may make choices that beforehand might solely be made by individuals.

Nations world wide are asking frequent questions. How can we use this new expertise to resolve our issues? How can we keep away from or handle new issues it would create? How can we management expertise that’s so highly effective?

These questions name not just for broad and considerate dialog, however decisive and efficient motion. This paper presents a few of our concepts and solutions as an organization.

These solutions construct on the teachings we’ve been studying primarily based on the work we’ve been doing for a number of years. Microsoft CEO Satya Nadella set us on a transparent course when he wrote in 2016 that, “Maybe the best debate we are able to have isn’t considered one of good versus evil: The talk must be concerning the values instilled within the individuals and establishments creating this expertise.”

Since that point, we’ve outlined, printed, and applied moral rules to information our work. And we’ve constructed out consistently enhancing engineering and governance methods to place these rules into observe. At the moment, we’ve got almost 350 individuals engaged on accountable AI at Microsoft, serving to us implement greatest practices for constructing protected, safe, and clear AI methods designed to profit society.

New alternatives to enhance the human situation

The ensuing advances in our method have given us the potential and confidence to see ever-expanding methods for AI to enhance individuals’s lives. We’ve seen AI assist save people’ eyesight, make progress on new cures for most cancers, generate new insights about proteins, and supply predictions to guard individuals from hazardous climate. Different improvements are heading off cyberattacks and serving to to guard elementary human rights, even in nations troubled by overseas invasion or civil struggle.

On a regular basis actions will profit as effectively. By appearing as a copilot in individuals’s lives, the facility of basis fashions like GPT-4 is popping search right into a extra highly effective device for analysis and enhancing productiveness for individuals at work. And, for any dad or mum who has struggled to recollect tips on how to assist their 13-year-old little one by means of an algebra homework project, AI-based help is a useful tutor.

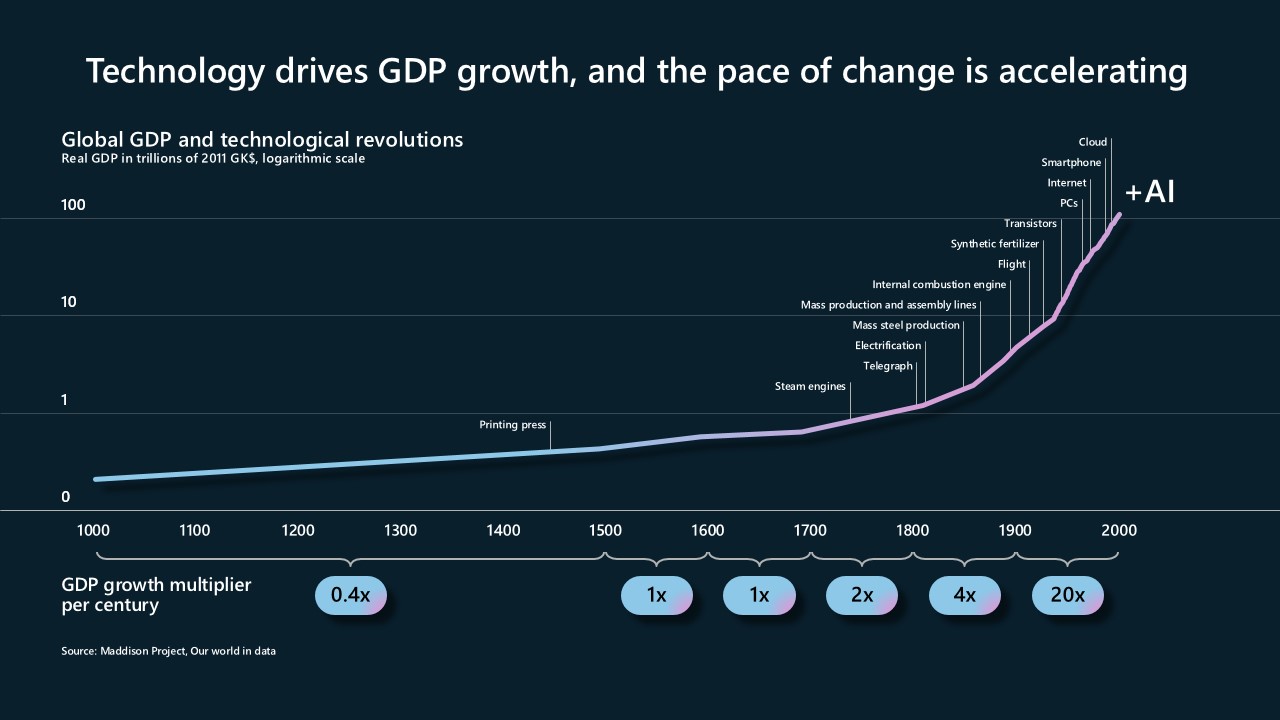

In so some ways, AI presents maybe much more potential for the nice of humanity than any invention that has preceded it. For the reason that invention of the printing press with movable sort within the 1400s, human prosperity has been rising at an accelerating fee. Innovations just like the steam engine, electrical energy, the auto, the airplane, computing, and the web have offered most of the constructing blocks for contemporary civilization. And, just like the printing press itself, AI presents a brand new device to genuinely assist advance human studying and thought.

Guardrails for the longer term

One other conclusion is equally necessary: It’s not sufficient to focus solely on the various alternatives to make use of AI to enhance individuals’s lives. That is maybe some of the necessary classes from the position of social media. Little greater than a decade in the past, technologists and political commentators alike gushed concerning the position of social media in spreading democracy through the Arab Spring. But, 5 years after that, we realized that social media, like so many different applied sciences earlier than it, would grow to be each a weapon and a device – on this case geared toward democracy itself.

At the moment we’re 10 years older and wiser, and we have to put that knowledge to work. We have to suppose early on and in a clear-eyed approach concerning the issues that might lie forward. As expertise strikes ahead, it’s simply as necessary to make sure correct management over AI as it’s to pursue its advantages. We’re dedicated and decided as an organization to develop and deploy AI in a protected and accountable approach. We additionally acknowledge, nonetheless, that the guardrails wanted for AI require a broadly shared sense of accountability and shouldn’t be left to expertise corporations alone.

After we at Microsoft adopted our six moral rules for AI in 2018, we famous that one precept was the bedrock for every part else – accountability. That is the basic want: to make sure that machines stay topic to efficient oversight by individuals, and the individuals who design and function machines stay accountable to everybody else. Briefly, we should at all times be certain that AI stays beneath human management. This should be a first-order precedence for expertise corporations and governments alike.

This connects immediately with one other important idea. In a democratic society, considered one of our foundational rules is that no individual is above the legislation. No authorities is above the legislation. No firm is above the legislation, and no product or expertise must be above the legislation. This results in a important conclusion: Individuals who design and function AI methods can’t be accountable until their choices and actions are topic to the rule of legislation.

In some ways, that is on the coronary heart of the unfolding AI coverage and regulatory debate. How do governments greatest be certain that AI is topic to the rule of legislation? Briefly, what type ought to new legislation, regulation, and coverage take?

A five-point blueprint for the general public governance of AI

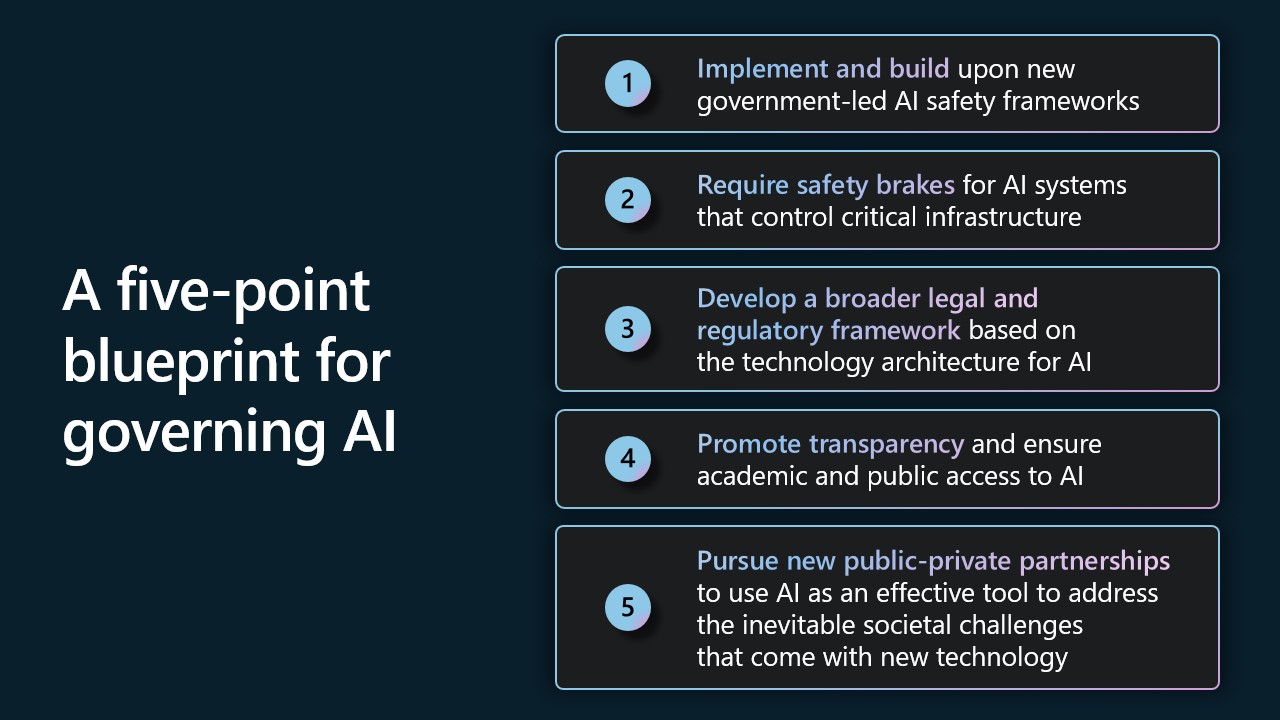

Part Considered one of this paper presents a five-point blueprint to handle a number of present and rising AI points by means of public coverage, legislation, and regulation. We provide this recognizing that each a part of this blueprint will profit from broader dialogue and require deeper improvement. However we hope this may contribute constructively to the work forward.

First, implement and construct upon new government-led AI security frameworks. The easiest way to succeed is commonly to construct on the successes and good concepts of others. Particularly when one needs to maneuver shortly. On this occasion, there is a crucial alternative to construct on work accomplished simply 4 months in the past by the U.S. Nationwide Institute of Requirements and Expertise, or NIST. A part of the Division of Commerce, NIST has accomplished and launched a brand new AI Danger Administration Framework.

We provide 4 concrete solutions to implement and construct upon this framework, together with commitments Microsoft is making in response to a current White Home assembly with main AI corporations. We additionally imagine the administration and different governments can speed up momentum by means of procurement guidelines primarily based on this framework.

Second, require efficient security brakes for AI methods that management important infrastructure. In some quarters, considerate people more and more are asking whether or not we are able to satisfactorily management AI because it turns into extra highly effective. Issues are generally posed concerning AI management of important infrastructure like {the electrical} grid, water system, and metropolis visitors flows.

That is the proper time to debate this query. This blueprint proposes new security necessities that, in impact, would create security brakes for AI methods that management the operation of designated important infrastructure. These fail-safe methods could be a part of a complete method to system security that may hold efficient human oversight, resilience, and robustness high of thoughts. In spirit, they’d be much like the braking methods engineers have lengthy constructed into different applied sciences resembling elevators, faculty buses, and high-speed trains, to securely handle not simply on a regular basis eventualities, however emergencies as effectively.

On this method, the federal government would outline the category of high-risk AI methods that management important infrastructure and warrant such security measures as a part of a complete method to system administration. New legal guidelines would require operators of those methods to construct security brakes into high-risk AI methods by design. The federal government would then be certain that operators check high-risk methods usually to make sure that the system security measures are efficient. And AI methods that management the operation of designated important infrastructure could be deployed solely in licensed AI datacenters that may guarantee a second layer of safety by means of the power to use these security brakes, thereby making certain efficient human management.

Third, develop a broad authorized and regulatory framework primarily based on the expertise structure for AI. We imagine there’ll should be a authorized and regulatory structure for AI that displays the expertise structure for AI itself. Briefly, the legislation might want to place varied regulatory obligations upon totally different actors primarily based upon their position in managing totally different elements of AI expertise.

Because of this, this blueprint consists of details about among the important items that go into constructing and utilizing new generative AI fashions. Utilizing this as context, it proposes that totally different legal guidelines place particular regulatory obligations on the organizations exercising sure obligations at three layers of the expertise stack: the functions layer, the mannequin layer, and the infrastructure layer.

This could first apply current authorized protections on the functions layer to using AI. That is the layer the place the security and rights of individuals will most be impacted, particularly as a result of the influence of AI can fluctuate markedly in several expertise eventualities. In lots of areas, we don’t want new legal guidelines and rules. We as a substitute want to use and implement current legal guidelines and rules, serving to businesses and courts develop the experience wanted to adapt to new AI eventualities.

There’ll then be a have to develop new legislation and regulations for extremely succesful AI basis fashions, greatest applied by a brand new authorities company. This can influence two layers of the expertise stack. The primary would require new rules and licensing for these fashions themselves. And the second will contain obligations for the AI infrastructure operators on which these fashions are developed and deployed. The blueprint that follows presents advised targets and approaches for every of those layers.

In doing so, this blueprint builds partly on a precept developed in current a long time in banking to guard towards cash laundering and felony or terrorist use of economic providers. The “Know Your Buyer” – or KYC – precept requires that monetary establishments confirm buyer identities, set up danger profiles, and monitor transactions to assist detect suspicious exercise. It might make sense to take this precept and apply a KY3C method that creates within the AI context sure obligations to know one’s cloud, one’s clients, and one’s content material.

Within the first occasion, the builders of designated, highly effective AI fashions first “know the cloud” on which their fashions are developed and deployed. As well as, resembling for eventualities that contain delicate makes use of, the corporate that has a direct relationship with a buyer – whether or not or not it’s the mannequin developer, software supplier, or cloud operator on which the mannequin is working – ought to “know the purchasers” which can be accessing it.

Additionally, the general public must be empowered to “know the content material” that AI is creating by means of using a label or different mark informing individuals when one thing like a video or audio file has been produced by an AI mannequin moderately than a human being. This labeling obligation must also defend the general public from the alteration of authentic content material and the creation of “deep fakes.” This can require the event of latest legal guidelines, and there might be many necessary questions and particulars to handle. However the well being of democracy and way forward for civic discourse will profit from considerate measures to discourage using new expertise to deceive or defraud the general public.

Fourth, promote transparency and guarantee tutorial and nonprofit entry to AI. We imagine a important public purpose is to advance transparency and broaden entry to AI sources. Whereas there are some necessary tensions between transparency and the necessity for safety, there exist many alternatives to make AI methods extra clear in a accountable approach. That’s why Microsoft is committing to an annual AI transparency report and different steps to broaden transparency for our AI providers.

We additionally imagine it’s important to broaden entry to AI sources for tutorial analysis and the nonprofit neighborhood. Fundamental analysis, particularly at universities, has been of elementary significance to the financial and strategic success of the USA because the Forties. However until tutorial researchers can get hold of entry to considerably extra computing sources, there’s a actual danger that scientific and technological inquiry will undergo, together with regarding AI itself. Our blueprint calls for brand spanking new steps, together with steps we’ll take throughout Microsoft, to handle these priorities.

Fifth, pursue new public-private partnerships to make use of AI as an efficient device to handle the inevitable societal challenges that include new expertise. One lesson from current years is what democratic societies can accomplish once they harness the facility of expertise and produce the private and non-private sectors collectively. It’s a lesson we have to construct upon to handle the influence of AI on society.

We’ll all profit from a powerful dose of clear-eyed optimism. AI is a rare device. However, like different applied sciences, it can also grow to be a strong weapon, and there might be some world wide who will search to make use of it that approach. However we should always take some coronary heart from the cyber entrance and the final year-and-a-half within the struggle in Ukraine. What we discovered is that when the private and non-private sectors work collectively, when like-minded allies come collectively, and once we develop expertise and use it as a protect, it’s extra highly effective than any sword on the planet.

Essential work is required now to make use of AI to guard democracy and elementary rights, present broad entry to the AI expertise that can promote inclusive development, and use the facility of AI to advance the planet’s sustainability wants. Maybe greater than something, a wave of latest AI expertise supplies an event for considering large and appearing boldly. In every space, the important thing to success might be to develop concrete initiatives and produce governments, revered corporations, and energetic NGOs collectively to advance them. We provide some preliminary concepts on this report, and we stay up for doing way more within the months and years forward.

Governing AI inside Microsoft

In the end, each group that creates or makes use of superior AI methods might want to develop and implement its personal governance methods. Part Two of this paper describes the AI governance system inside Microsoft – the place we started, the place we’re at the moment, and the way we’re shifting into the longer term.

As this part acknowledges, the event of a brand new governance system for brand spanking new expertise is a journey in and of itself. A decade in the past, this subject barely existed. At the moment, Microsoft has virtually 350 staff specializing in it, and we’re investing in our subsequent fiscal yr to develop this additional.

As described on this part, over the previous six years we’ve got constructed out a extra complete AI governance construction and system throughout Microsoft. We didn’t begin from scratch, borrowing as a substitute from greatest practices for the safety of cybersecurity, privateness, and digital security. That is all a part of the corporate’s complete enterprise danger administration (ERM) system, which has grow to be a important a part of the administration of firms and lots of different organizations on this planet at the moment.

In terms of AI, we first developed moral rules after which needed to translate these into extra particular company insurance policies. We’re now on model 2 of the company customary that embodies these rules and defines extra exact practices for our engineering groups to comply with. We’ve applied the usual by means of coaching, tooling, and testing methods that proceed to mature quickly. That is supported by extra governance processes that embody monitoring, auditing, and compliance measures.

As with every part in life, one learns from expertise. In terms of AI governance, a few of our most necessary studying has come from the detailed work required to evaluate particular delicate AI use instances. In 2019, we based a delicate use evaluate program to topic our most delicate and novel AI use instances to rigorous, specialised evaluate that leads to tailor-made steering. Since that point, we’ve got accomplished roughly 600 delicate use case critiques. The tempo of this exercise has quickened to match the tempo of AI advances, with virtually 150 such critiques going down within the 11 months.

All of this builds on the work we’ve got achieved and can proceed to do to advance accountable AI by means of firm tradition. Which means hiring new and numerous expertise to develop our accountable AI ecosystem and investing within the expertise we have already got at Microsoft to develop expertise and empower them to suppose broadly concerning the potential influence of AI methods on people and society. It additionally means that rather more than previously, the frontier of expertise requires a multidisciplinary method that mixes nice engineers with gifted professionals from throughout the liberal arts.

All that is supplied on this paper within the spirit that we’re on a collective journey to forge a accountable future for synthetic intelligence. We are able to all study from one another. And regardless of how good we might imagine one thing is at the moment, we’ll all have to hold getting higher.

As technological change accelerates, the work to control AI responsibly should hold tempo with it. With the proper commitments and investments, we imagine it might probably.