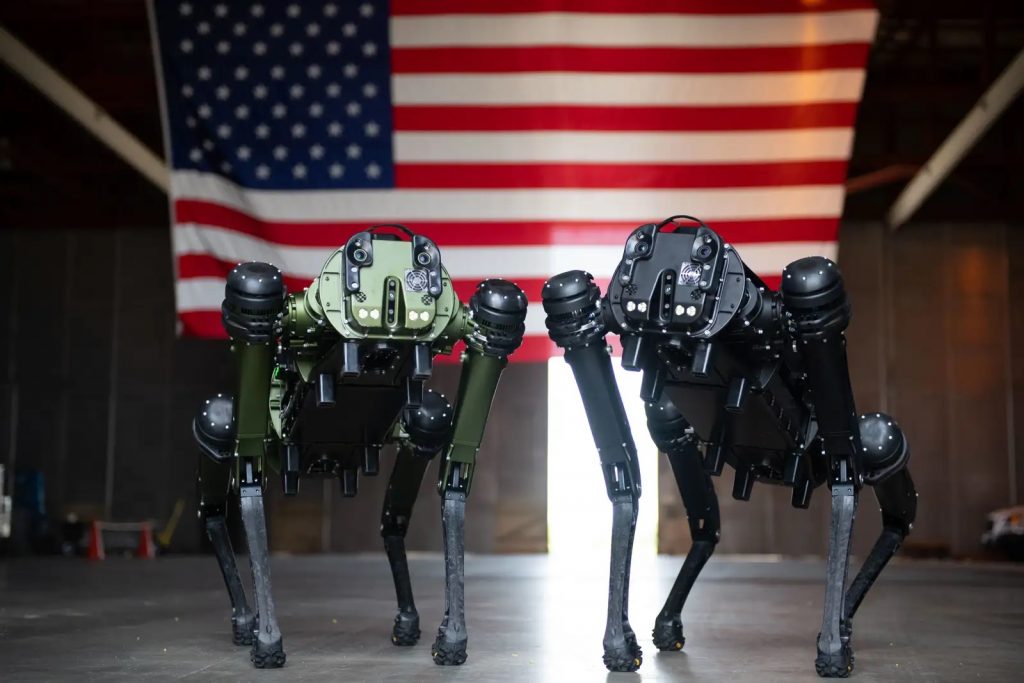

Ghost Robotics Imaginative and prescient 60 Q-UGV. US House Drive picture by Senior Airman Samuel Becker

By Toby Walsh (Professor of AI at UNSW, Analysis Group Chief, UNSW Sydney)

You would possibly suppose Hollywood is nice at predicting the longer term. Certainly, Robert Wallace, head of the CIA’s Workplace of Technical Service and the US equal of MI6’s fictional Q, has recounted how Russian spies would watch the most recent Bond film to see what applied sciences may be coming their manner.

Hollywood’s persevering with obsession with killer robots would possibly due to this fact be of serious concern. The latest such film is Apple TV’s forthcoming intercourse robotic courtroom drama Dolly.

I by no means thought I’d write the phrase “intercourse robotic courtroom drama”, however there you go. Primarily based on a 2011 quick story by Elizabeth Bear, the plot considerations a billionaire killed by a intercourse robotic that then asks for a lawyer to defend its murderous actions.

The true killer robots

Dolly is the most recent in a protracted line of films that includes killer robots – together with HAL in Kubrick’s 2001: A House Odyssey, and Arnold Schwarzenegger’s T-800 robotic within the Terminator sequence. Certainly, battle between robots and people was on the centre of the very first feature-length science fiction movie, Fritz Lang’s 1927 traditional Metropolis.

However every one of these films get it improper. Killer robots gained’t be sentient humanoid robots with evil intent. This would possibly make for a dramatic storyline and a field workplace success, however such applied sciences are many a long time, if not centuries, away.

Certainly, opposite to current fears, robots might by no means be sentient.

It’s a lot easier applied sciences we ought to be worrying about. And these applied sciences are beginning to flip up on the battlefield in the present day in locations like Ukraine and Nagorno-Karabakh.

A warfare remodeled

Motion pictures that characteristic a lot easier armed drones, like Angel has Fallen (2019) and Eye within the Sky (2015), paint maybe probably the most correct image of the true way forward for killer robots.

On the nightly TV information, we see how fashionable warfare is being remodeled by ever-more autonomous drones, tanks, ships and submarines. These robots are solely a little bit extra subtle than these you should buy in your native interest retailer.

And more and more, the selections to establish, observe and destroy targets are being handed over to their algorithms.

That is taking the world to a harmful place, with a bunch of ethical, authorized and technical issues. Such weapons will, for instance, additional upset our troubled geopolitical state of affairs. We already see Turkey rising as a significant drone energy.

And such weapons cross an ethical purple line right into a horrible and terrifying world the place unaccountable machines determine who lives and who dies.

Robotic producers are, nonetheless, beginning to push again towards this future.

A pledge to not weaponise

Final week, six main robotics corporations pledged they might by no means weaponise their robotic platforms. The businesses embrace Boston Dynamics, which makes the Atlas humanoid robotic, which might carry out a formidable backflip, and the Spot robotic canine, which seems to be prefer it’s straight out of the Black Mirror TV sequence.

This isn’t the primary time robotics corporations have spoken out about this worrying future. 5 years in the past, I organised an open letter signed by Elon Musk and greater than 100 founders of different AI and robotic corporations calling for the United Nations to manage using killer robots. The letter even knocked the Pope into third place for a world disarmament award.

Nonetheless, the truth that main robotics corporations are pledging to not weaponise their robotic platforms is extra advantage signalling than anything.

Now we have, for instance, already seen third events mount weapons on clones of Boston Dynamics’ Spot robotic canine. And such modified robots have confirmed efficient in motion. Iran’s prime nuclear scientist was assassinated by Israeli brokers utilizing a robotic machine gun in 2020.

Collective motion to safeguard our future

The one manner we will safeguard towards this terrifying future is that if nations collectively take motion, as they’ve with chemical weapons, organic weapons and even nuclear weapons.

Such regulation gained’t be good, simply because the regulation of chemical weapons isn’t good. However it can stop arms corporations from brazenly promoting such weapons and thus their proliferation.

Due to this fact, it’s much more vital than a pledge from robotics corporations to see the UN Human Rights council has lately unanimously determined to discover the human rights implications of recent and rising applied sciences like autonomous weapons.

A number of dozen nations have already known as for the UN to manage killer robots. The European Parliament, the African Union, the UN Secretary Common, Nobel peace laureates, church leaders, politicians and 1000’s of AI and robotics researchers like myself have all known as for regulation.

Australian just isn’t a rustic that has, to date, supported these calls. However if you wish to keep away from this Hollywood future, it’s possible you’ll wish to take it up together with your political consultant subsequent time you see them.

![]()

Toby Walsh doesn’t work for, seek the advice of, personal shares in or obtain funding from any firm or organisation that might profit from this text, and has disclosed no related affiliations past their tutorial appointment.

This text appeared in The Dialog.

tags: c-Army-Protection

The Dialog

is an impartial supply of reports and views, sourced from the tutorial and analysis group and delivered direct to the general public.

The Dialog

is an impartial supply of reports and views, sourced from the tutorial and analysis group and delivered direct to the general public.