After telegraphing the transfer in media appearances, OpenAI has launched a software that makes an attempt to differentiate between human-written and AI-generated textual content — just like the textual content produced by the corporate’s personal ChatGPT and GPT-3 fashions. The classifier isn’t notably correct — its success price is round 26%, OpenAI notes — however OpenAI argues that it, when utilized in tandem with different strategies, might be helpful in serving to stop AI textual content mills from being abused.

“The classifier goals to assist mitigate false claims that AI-generated textual content was written by a human. Nevertheless, it nonetheless has quite a few limitations — so it must be used as a complement to different strategies of figuring out the supply of textual content as a substitute of being the first decision-making software,” an OpenAI spokesperson advised TechCrunch by way of e-mail. “We’re making this preliminary classifier accessible to get suggestions on whether or not instruments like this are helpful, and hope to share improved strategies sooner or later.”

Because the fervor round generative AI — notably text-generating AI — grows, critics have referred to as on the creators of those instruments to take steps to mitigate their doubtlessly dangerous results. A few of the U.S.’ largest faculty districts have banned ChatGPT on their networks and units, fearing the impacts on pupil studying and the accuracy of the content material that the software produces. And websites together with Stack Overflow have banned customers from sharing content material generated by ChatGPT, saying that the AI makes it too simple for customers to flood dialogue threads with doubtful solutions.

OpenAI’s classifier — aptly referred to as OpenAI AI Textual content Classifier — is intriguing architecturally. It, like ChatGPT, is an AI language mannequin skilled on many, many examples of publicly accessible textual content from the net. However in contrast to ChatGPT, it’s fine-tuned to foretell how seemingly it’s {that a} piece of textual content was generated by AI — not simply from ChatGPT, however any text-generating AI mannequin.

Extra particularly, OpenAI skilled the OpenAI AI Textual content Classifier on textual content from 34 text-generating programs from 5 completely different organizations, together with OpenAI itself. This textual content was paired with related (however not precisely related) human-written textual content from Wikipedia, web sites extracted from hyperlinks shared on Reddit and a set of “human demonstrations” collected for a earlier OpenAI text-generating system. (OpenAI admits in a assist doc, nevertheless, that it’d’ve inadvertently misclassified some AI-written textual content as human-written “given the proliferation of AI-generated content material on the web.”)

The OpenAI Textual content Classifier received’t work on simply any textual content, importantly. It wants a minimal of 1,000 characters, or about 150 to 250 phrases. It doesn’t detect plagiarism — an particularly unlucky limitation contemplating that text-generating AI has been proven to regurgitate the textual content on which it was skilled. And OpenAI says that it’s extra more likely to get issues fallacious on textual content written by youngsters or in a language apart from English, owing to its English-forward information set.

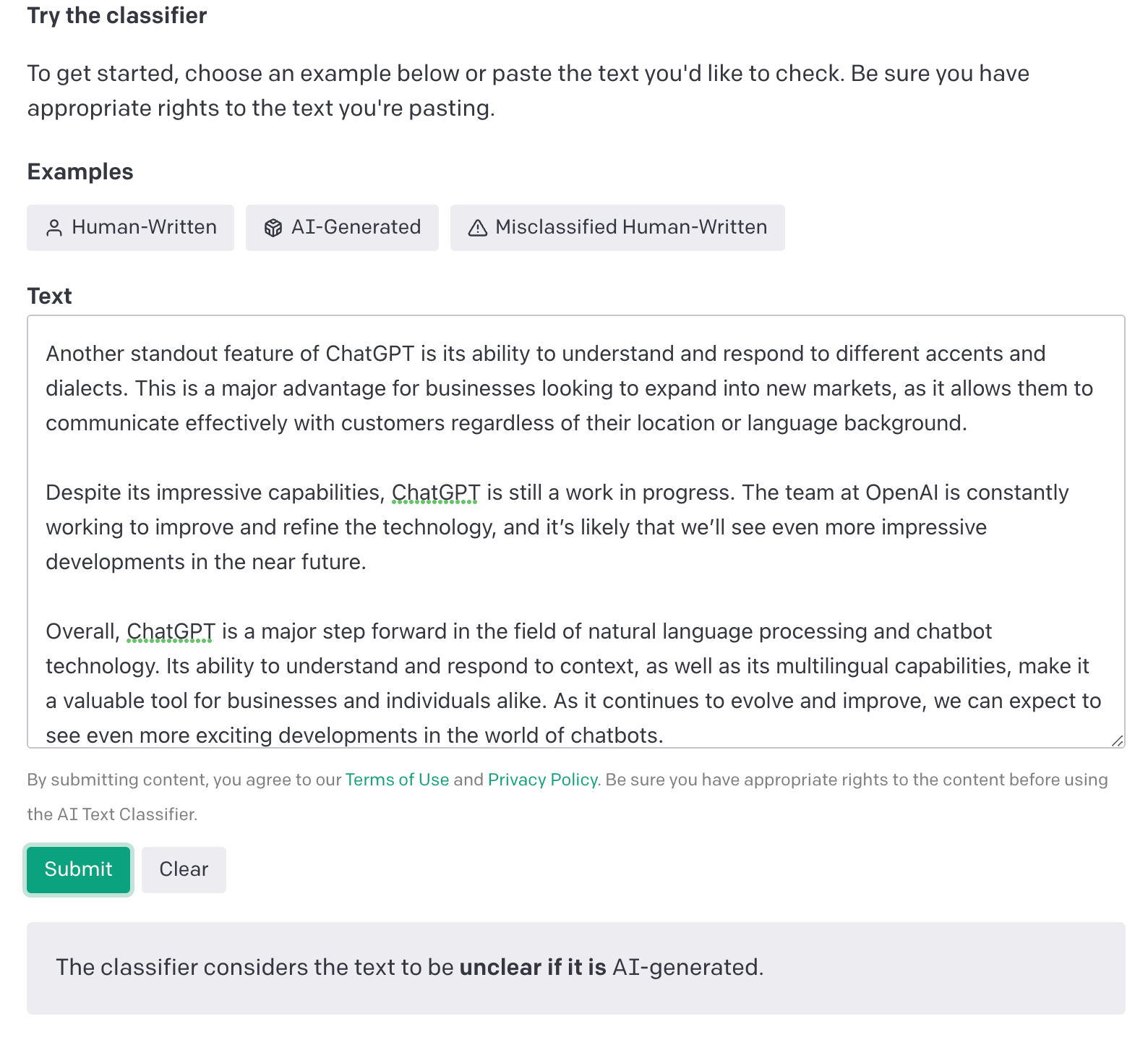

The detector hedges its reply a bit when evaluating whether or not a given piece of textual content is AI-generated. Relying on its confidence degree, it’ll label textual content as “impossible” AI-generated (lower than a ten% probability), “unlikely” AI-generated (between a ten% and 45% probability), “unclear whether it is” AI-generated (a forty five% to 90% probability), “probably” AI-generated (a 90% to 98% probability) or “seemingly” AI-generated (an over 98% probability).

Out of curiosity, I fed some textual content by way of the classifier to see the way it would possibly handle. Whereas it confidently, accurately predicted that a number of paragraphs from a TechCrunch article about Meta’s Horizon Worlds and a snippet from an OpenAI assist web page weren’t AI generated, the classifier had a more durable time with article-length textual content from ChatGPT, in the end failing to categorise it altogether. It did, nevertheless, efficiently spot ChatGPT output from a Gizmodo piece about — what else? — ChatGPT.

In keeping with OpenAI, the classifier incorrectly labels human-written textual content as AI-written 9% of the time. That mistake didn’t occur in my testing, however I chalk that as much as the small pattern measurement.

Picture Credit: OpenAI

On a sensible degree, I discovered the classifier not notably helpful for evaluating shorter items of writing. 1,000 characters is a troublesome threshold to achieve within the realm of messages, for instance emails (at the very least those I get regularly). And the constraints give pause — OpenAI emphasizes that the classifier may be evaded by modifying some phrases or clauses in generated textual content.

That’s to not recommend the classifier is ineffective — removed from it. But it surely definitely received’t cease dedicated fraudsters (or college students, for that matter) in its present state.

The query is, will different instruments? One thing of a cottage business has sprung as much as meet the demand for AI-generated textual content detectors. ChatZero, developed by a Princeton College pupil, makes use of standards together with “perplexity” (the complexity of textual content) and “burstiness” (the variations of sentences) to detect whether or not textual content is perhaps AI-written. Plagiarism detector Turnitin is growing its personal AI-generated textual content detector. Past these, a Google search yields a least a half-dozen different apps that declare to have the ability to separate the AI-generated wheat from the human-generated chaff, to torture the metaphor.

It’ll seemingly develop into a cat-and-mouse sport. As text-generating AI improves, so will the detectors — a endless back-and-forth much like that between cybercriminals and safety researchers. And as OpenAI writes, whereas the classifiers would possibly assist in sure circumstances, they’ll by no means be a dependable sole piece of proof in deciding whether or not textual content was AI-generated.

That’s all to say that there’s no silver bullet to resolve the issues AI-generated textual content poses. Fairly seemingly, there by no means can be.