Introduction

I’m joyful to report a brand new main launch of lime has landed on CRAN. lime is

an R port of the Python library of the identical title by Marco Ribeiro that permits

the person to pry open black field machine studying fashions and clarify their

outcomes on a per-observation foundation. It really works by modelling the result of the

black field within the native neighborhood across the statement to clarify and utilizing

this native mannequin to clarify why (not how) the black field did what it did. For

extra details about the speculation of lime I’ll direct you to the article

introducing the methodology.

New options

The meat of this launch facilities round two new options which are considerably

linked: Native help for keras fashions and help for explaining picture fashions.

keras and pictures

J.J. Allaire was variety sufficient to namedrop lime throughout his keynote introduction

of the tensorflow and keras packages and I felt compelled to help them

natively. As keras is by far the preferred technique to interface with tensorflow

it’s first in line for build-in help. The addition of keras implies that

lime now straight helps fashions from the next packages:

When you’re engaged on one thing too obscure or innovative to not have the ability to use

these packages it’s nonetheless potential to make your mannequin lime compliant by

offering predict_model() and model_type() strategies for it.

keras fashions are used identical to every other mannequin, by passing it into the lime()

perform together with the coaching information in an effort to create an explainer object.

As a result of we’re quickly going to speak about picture fashions, we’ll be utilizing one of many

pre-trained ImageNet fashions that’s obtainable from keras itself:

Mannequin

______________________________________________________________________________________________

Layer (kind) Output Form Param #

==============================================================================================

input_1 (InputLayer) (None, 224, 224, 3) 0

______________________________________________________________________________________________

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

______________________________________________________________________________________________

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

______________________________________________________________________________________________

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

______________________________________________________________________________________________

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

______________________________________________________________________________________________

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

______________________________________________________________________________________________

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

______________________________________________________________________________________________

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

______________________________________________________________________________________________

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

______________________________________________________________________________________________

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

______________________________________________________________________________________________

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

______________________________________________________________________________________________

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

______________________________________________________________________________________________

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

______________________________________________________________________________________________

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

______________________________________________________________________________________________

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

______________________________________________________________________________________________

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

______________________________________________________________________________________________

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

______________________________________________________________________________________________

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

______________________________________________________________________________________________

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

______________________________________________________________________________________________

flatten (Flatten) (None, 25088) 0

______________________________________________________________________________________________

fc1 (Dense) (None, 4096) 102764544

______________________________________________________________________________________________

fc2 (Dense) (None, 4096) 16781312

______________________________________________________________________________________________

predictions (Dense) (None, 1000) 4097000

==============================================================================================

Whole params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

______________________________________________________________________________________________

The vgg16 mannequin is a picture classification mannequin that has been construct as a part of

the ImageNet competitors the place the aim is to categorise photos into 1000

classes with the best accuracy. As we are able to see it’s pretty difficult.

As a way to create an explainer we might want to go within the coaching information as

properly. For picture information the coaching information is actually solely used to inform lime that we

are coping with a picture mannequin, so any picture will suffice. The format for the

coaching information is solely the trail to the photographs, and since the web runs on

kitten photos we’ll use one in every of these:

As with textual content fashions the explainer might want to know how you can put together the enter

information for the mannequin. For keras fashions this implies formatting the picture information as

tensors. Fortunately keras comes with quite a lot of instruments for reshaping picture information:

image_prep <- perform(x) {

arrays <- lapply(x, perform(path) {

img <- image_load(path, target_size = c(224,224))

x <- image_to_array(img)

x <- array_reshape(x, c(1, dim(x)))

x <- imagenet_preprocess_input(x)

})

do.name(abind::abind, c(arrays, record(alongside = 1)))

}

explainer <- lime(img_path, mannequin, image_prep)We now have an explainer mannequin for understanding how the vgg16 neural community

makes its predictions. Earlier than we go alongside, lets see what the mannequin consider our

kitten:

res <- predict(mannequin, image_prep(img_path))

imagenet_decode_predictions(res)[[1]]

class_name class_description rating

1 n02124075 Egyptian_cat 0.48913878

2 n02123045 tabby 0.15177219

3 n02123159 tiger_cat 0.10270492

4 n02127052 lynx 0.02638111

5 n03793489 mouse 0.00852214So, it’s fairly certain about the entire cat factor. The explanation we have to use

imagenet_decode_predictions() is that the output of a keras mannequin is all the time

only a anonymous tensor:

[1] 1 1000NULLWe’re used to classifiers figuring out the category labels, however this isn’t the case

for keras. Motivated by this, lime now have a technique to outline/overwrite the

class labels of a mannequin, utilizing the as_classifier() perform. Let’s redo our

explainer:

model_labels <- readRDS(system.file('extdata', 'imagenet_labels.rds', bundle = 'lime'))

explainer <- lime(img_path, as_classifier(mannequin, model_labels), image_prep)There’s additionally an

as_regressor()perform which tellslime, no doubt,

that the mannequin is a regression mannequin. Most fashions might be introspected to see

which kind of mannequin they’re, however neural networks doesn’t actually care.lime

guesses the mannequin kind from the activation used within the final layer (linear

activation == regression), but when that heuristic fails then

as_regressor()/as_classifier()can be utilized.

We at the moment are able to poke into the mannequin and discover out what makes it assume our

picture is of an Egyptian cat. However… first I’ll have to speak about yet one more

idea: superpixels (I promise I’ll get to the reason half in a bit).

As a way to create significant permutations of our picture (bear in mind, that is the

central concept in lime), we have now to outline how to take action. The permutations wants

to be substantial sufficient to have an effect on the picture, however not a lot that

the mannequin fully fails to recognise the content material in each case – additional,

they need to result in an interpretable outcome. The idea of superpixels lends

itself properly to those constraints. Briefly, a superpixel is a patch of an space

with excessive homogeneity, and superpixel segmentation is a clustering of picture

pixels into a variety of superpixels. By segmenting the picture to clarify into

superpixels we are able to flip space of contextual similarity on and off through the

permutations and discover out if that space is necessary. It’s nonetheless essential to

experiment a bit because the optimum variety of superpixels rely on the content material of

the picture. Bear in mind, we’d like them to be massive sufficient to have an effect however not

so massive that the category chance turns into successfully binary. lime comes

with a perform to evaluate the superpixel segmentation earlier than starting the

rationalization and it is strongly recommended to play with it a bit — with time you’ll

probably get a really feel for the fitting values:

# default

plot_superpixels(img_path)

# Altering some settings

plot_superpixels(img_path, n_superpixels = 200, weight = 40)

The default is ready to a reasonably low variety of superpixels — if the topic of

curiosity is comparatively small it could be needed to extend the variety of

superpixels in order that the complete topic doesn’t find yourself in a single, or a number of

superpixels. The weight parameter will let you make the segments extra

compact by weighting spatial distance increased than color distance. For this

instance we’ll stick to the defaults.

Bear in mind that explaining picture

fashions is way heavier than tabular or textual content information. In impact it should create 1000

new photos per rationalization (default permutation measurement for photos) and run these

by the mannequin. As picture classification fashions are sometimes fairly heavy, this

will end in computation time measured in minutes. The permutation is batched

(default to 10 permutations per batch), so that you shouldn’t be afraid of operating

out of RAM or hard-drive house.

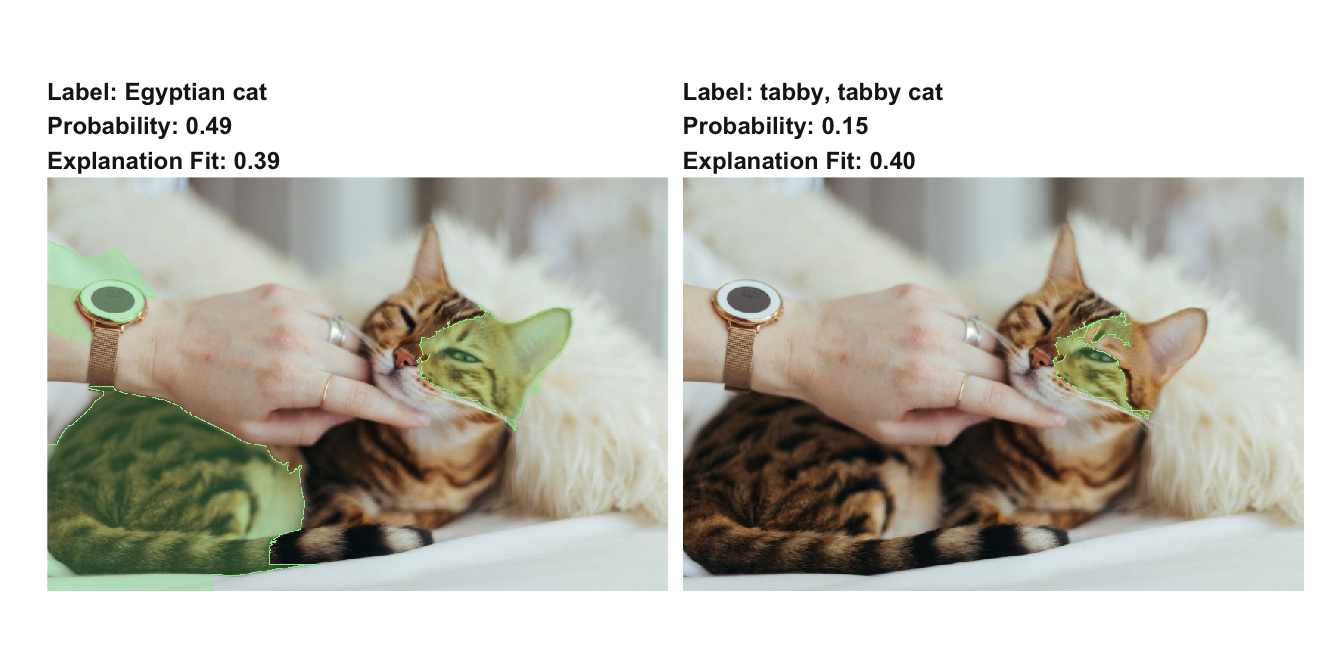

rationalization <- clarify(img_path, explainer, n_labels = 2, n_features = 20)The output of a picture rationalization is a knowledge body of the identical format as that

from tabular and textual content information. Every function might be a superpixel and the pixel

vary of the superpixel might be used as its description. Normally the reason

will solely make sense within the context of the picture itself, so the brand new model of

lime additionally comes with a plot_image_explanation() perform to do exactly that.

Let’s see what our rationalization have to inform us:

plot_image_explanation(rationalization)

We are able to see that the mannequin, for each the foremost predicted courses, focuses on the

cat, which is good since they’re each totally different cat breeds. The plot perform

acquired a number of totally different features that can assist you tweak the visible, and it filters low

scoring superpixels away by default. An alternate view that places extra focus

on the related superpixels, however removes the context might be seen through the use of

show = 'block':

plot_image_explanation(rationalization, show = 'block', threshold = 0.01)

Whereas not as frequent with picture explanations it’s also potential to have a look at the

areas of a picture that contradicts the category:

plot_image_explanation(rationalization, threshold = 0, show_negative = TRUE, fill_alpha = 0.6)

As every rationalization takes longer time to create and must be tweaked on a

per-image foundation, picture explanations should not one thing that you just’ll create in

massive batches as you would possibly do with tabular and textual content information. Nonetheless, a number of

explanations would possibly let you perceive your mannequin higher and be used for

speaking the workings of your mannequin. Additional, because the time-limiting issue

in picture explanations are the picture classifier and never lime itself, it’s sure

to enhance as picture classifiers turns into extra performant.

Seize again

Other than keras and picture help, a slew of different options and enhancements

have been added. Right here’s a fast overview:

- All rationalization plots now embody the match of the ridge regression used to make

the reason. This makes it simple to evaluate how good the assumptions about

native linearity are saved. - When explaining tabular information the default distance measure is now

'gower'

from thegowerbundle.gowermakes it potential to measure distances

between heterogeneous information with out changing all options to numeric and

experimenting with totally different exponential kernels. - When explaining tabular information numerical options will not be sampled from

a standard distribution throughout permutations, however from a kernel density outlined

by the coaching information. This could make sure that the permutations are extra

consultant of the anticipated enter.

Wrapping up

This launch represents an necessary milestone for lime in R. With the

addition of picture explanations the lime bundle is now on par or above its

Python relative, feature-wise. Additional growth will concentrate on bettering the

efficiency of the mannequin, e.g. by including parallelisation or bettering the native

mannequin definition, in addition to exploring various rationalization varieties resembling

anchor.

Pleased Explaining!