If a robotic goes to be greedy delicate objects, then that bot had higher know what these objects are, so it might deal with them accordingly. A brand new robotic hand permits it to take action, by sensing the form of the thing alongside the size of its three digits.

Developed by a group of scientists at MIT, the experimental system is called the GelSight EndoFlex. And true to its identify, it incorporates the college’s GelSight know-how, which had beforehand solely been utilized within the fingertip pads of robotic palms.

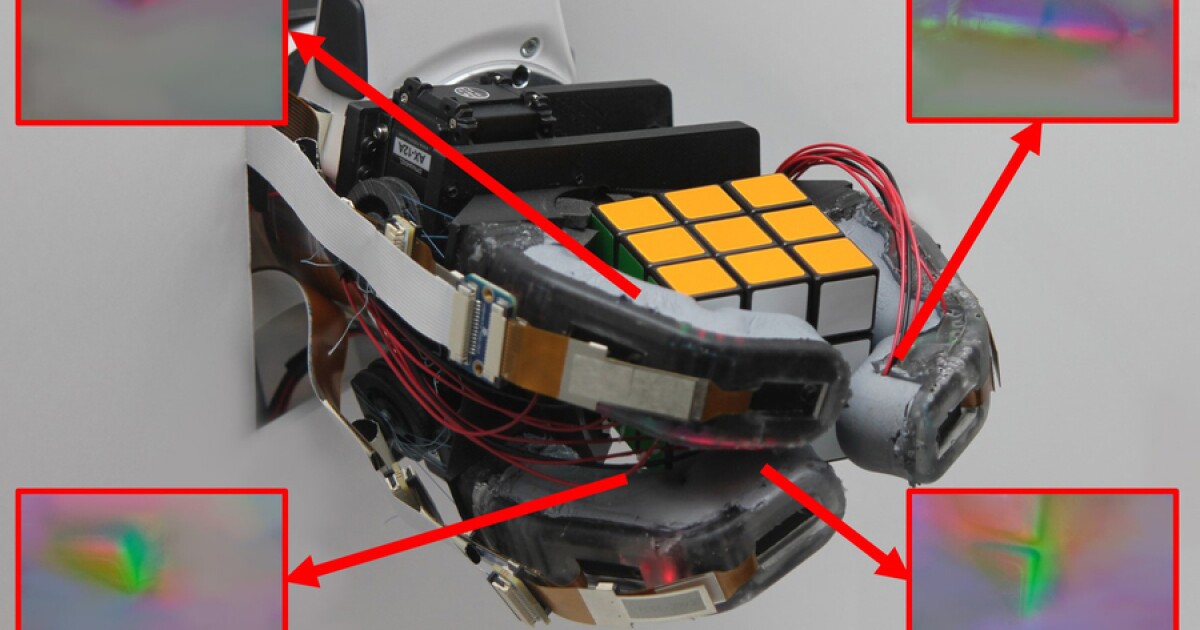

The EndoFlex’s three mechanical digits are organized in a Y form – there are two “fingers” on the high, with an opposable “thumb” on the backside. Every one consists of an articulated arduous polymer skeleton, encased inside a tender and versatile outer layer. The GelSight sensors themselves – two per digit – are situated on the underside of the highest and center sections of these digits.

Every sensor incorporates a slab of clear, artificial rubber that’s coated on one facet with a layer of metallic paint – that paint serves because the finger’s pores and skin. When the paint is pressed towards a floor, it deforms to the form of that floor. Wanting via the other, unpainted facet of the rubber, a tiny built-in digicam (with assist from three coloured LEDs) can picture the minute contours of the floor, urgent up into the paint.

Particular algorithms on a linked laptop flip these contours into 3D photographs which seize particulars lower than one micrometer in depth and roughly two micrometers in width. The paint is critical to be able to standardize the optical qualities of the floor, in order that the system is not confused by a number of colours or supplies.

Within the case of the EndoFlex, by combining photographs from six such sensors without delay (two on every of the three digits), it is potential to create a three-dimensional mannequin of the merchandise being grasped. Machine-learning-based software program is then capable of determine what object that mannequin represents, after the hand has grasped the thing only one time. The system has an accuracy price of about 85% in its current type, though that quantity ought to enhance because the know-how is developed additional.

“Having each tender and inflexible components is essential in any hand, however so is with the ability to carry out nice sensing over a very massive space, particularly if we wish to think about doing very difficult manipulation duties like what our personal palms can do,” stated mechanical engineering graduate pupil Sandra Liu, who co-led the analysis together with undergraduate pupil Leonardo Zamora Yañez and Prof. Edward Adelson.

“Our purpose with this work was to mix all of the issues that make our human palms so good right into a robotic finger that may do duties different robotic fingers can’t presently do.”

Supply: MIT