Upon images and drawing on their previous experiences, people can usually understand depth in photos which can be, themselves, completely flat. Nevertheless, getting computer systems to do the identical factor has proved fairly difficult.

The issue is troublesome for a number of causes, one being that info is inevitably misplaced when a scene that takes place in three dimensions is diminished to a two-dimensional (2D) illustration. There are some well-established methods for recovering 3D info from a number of 2D photos, however they every have some limitations. A brand new method referred to as “digital correspondence,” which was developed by researchers at MIT and different establishments, can get round a few of these shortcomings and achieve circumstances the place typical methodology falters.

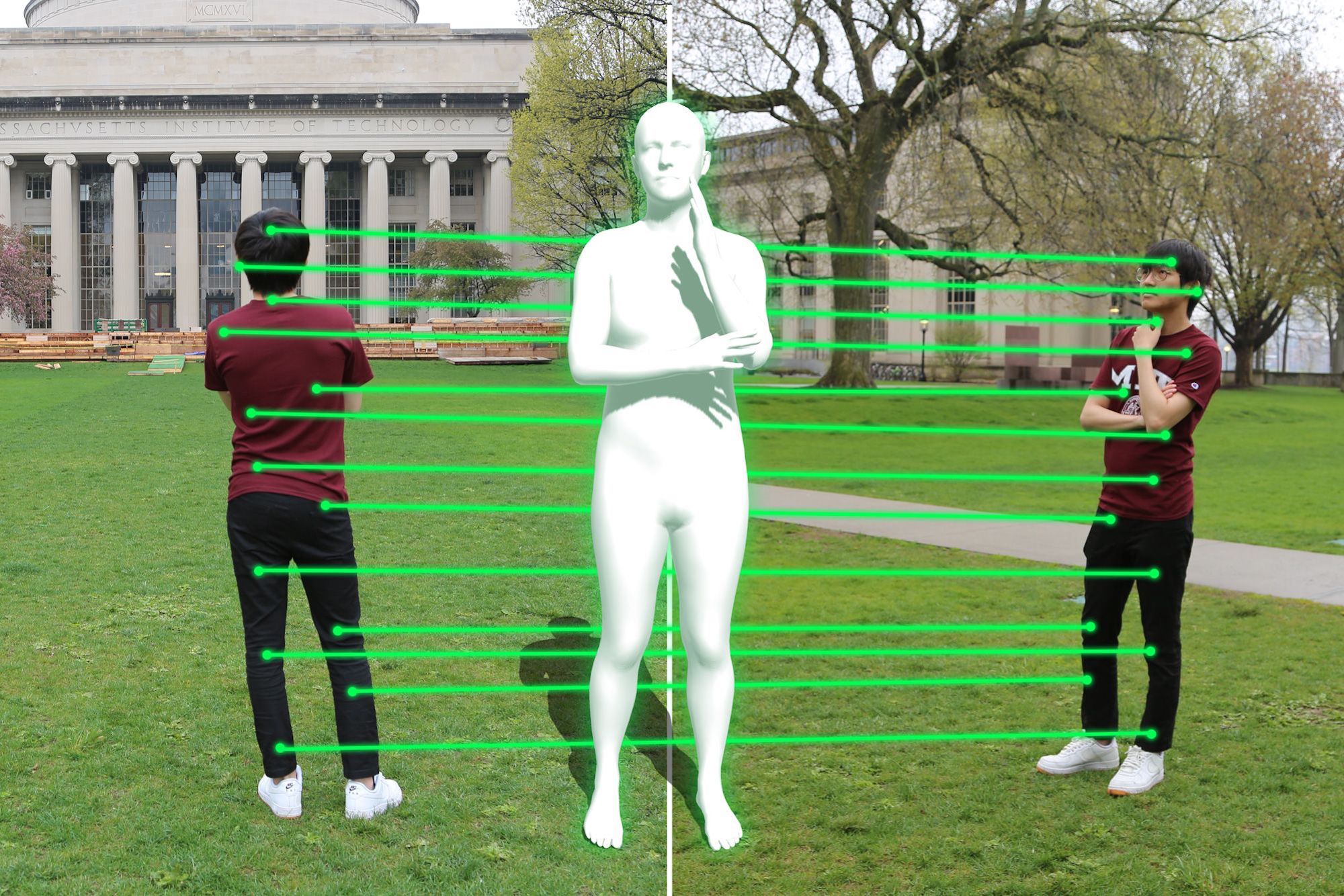

Present strategies that reconstruct 3D scenes from 2D photos depend on the pictures that comprise a few of the identical options. Digital correspondence is a technique of 3D reconstruction that works even with photos taken from extraordinarily completely different views that don’t present the identical options.

The usual method, referred to as “construction from movement,” is modeled on a key side of human imaginative and prescient. As a result of our eyes are separated from one another, they every supply barely completely different views of an object. A triangle will be shaped whose sides include the road phase connecting the 2 eyes, plus the road segments connecting every eye to a typical level on the article in query. Realizing the angles within the triangle and the gap between the eyes, it’s potential to find out the gap to that time utilizing elementary geometry — though the human visible system, after all, could make tough judgments about distance with out having to undergo arduous trigonometric calculations. This identical primary concept — of triangulation or parallax views — has been exploited by astronomers for hundreds of years to calculate the gap to faraway stars.

Triangulation is a key component of construction from movement. Suppose you have got two photos of an object — a sculpted determine of a rabbit, for example — one taken from the left aspect of the determine and the opposite from the suitable. Step one can be to search out factors or pixels on the rabbit’s floor that each photos share. A researcher may go from there to find out the “poses” of the 2 cameras — the positions the place the photographs had been taken from and the course every digital camera was going through. Realizing the gap between the cameras and the way in which they had been oriented, one may then triangulate to work out the gap to a specific level on the rabbit. And if sufficient widespread factors are recognized, it is perhaps potential to acquire an in depth sense of the article’s (or “rabbit’s”) total form.

Appreciable progress has been made with this method, feedback Wei-Chiu Ma, a PhD scholar in MIT’s Division of Electrical Engineering and Laptop Science (EECS), “and other people are actually matching pixels with higher and higher accuracy. As long as we will observe the identical level, or factors, throughout completely different photos, we will use current algorithms to find out the relative positions between cameras.” However the method solely works if the 2 photos have a big overlap. If the enter photos have very completely different viewpoints — and therefore comprise few, if any, factors in widespread — he provides, “the system could fail.”

Throughout summer season 2020, Ma got here up with a novel method of doing issues that might enormously increase the attain of construction from movement. MIT was closed on the time as a result of pandemic, and Ma was dwelling in Taiwan, enjoyable on the sofa. Whereas trying on the palm of his hand and his fingertips specifically, it occurred to him that he may clearly image his fingernails, despite the fact that they weren’t seen to him.

That was the inspiration for the notion of digital correspondence, which Ma has subsequently pursued together with his advisor, Antonio Torralba, an EECS professor and investigator on the Laptop Science and Synthetic Intelligence Laboratory, together with Anqi Joyce Yang and Raquel Urtasun of the College of Toronto and Shenlong Wang of the College of Illinois. “We wish to incorporate human information and reasoning into our current 3D algorithms” Ma says, the identical reasoning that enabled him to take a look at his fingertips and conjure up fingernails on the opposite aspect — the aspect he couldn’t see.

Construction from movement works when two photos have factors in widespread, as a result of which means a triangle can all the time be drawn connecting the cameras to the widespread level, and depth info can thereby be gleaned from that. Digital correspondence provides a option to carry issues additional. Suppose, as soon as once more, that one photograph is taken from the left aspect of a rabbit and one other photograph is taken from the suitable aspect. The primary photograph would possibly reveal a spot on the rabbit’s left leg. However since gentle travels in a straight line, one may use normal information of the rabbit’s anatomy to know the place a lightweight ray going from the digital camera to the leg would emerge on the rabbit’s different aspect. That time could also be seen within the different picture (taken from the right-hand aspect) and, if that’s the case, it could possibly be used through triangulation to compute distances within the third dimension.

Digital correspondence, in different phrases, permits one to take a degree from the primary picture on the rabbit’s left flank and join it with a degree on the rabbit’s unseen proper flank. “The benefit right here is that you simply don’t want overlapping photos to proceed,” Ma notes. “By trying by the article and popping out the opposite finish, this method gives factors in widespread to work with that weren’t initially obtainable.” And in that method, the constraints imposed on the standard technique will be circumvented.

One would possibly inquire as to how a lot prior information is required for this to work, as a result of should you needed to know the form of every part within the picture from the outset, no calculations can be required. The trick that Ma and his colleagues make use of is to make use of sure acquainted objects in a picture — such because the human kind — to function a sort of “anchor,” they usually’ve devised strategies for utilizing our information of the human form to assist pin down the digital camera poses and, in some circumstances, infer depth throughout the picture. As well as, Ma explains, “the prior information and customary sense that’s constructed into our algorithms is first captured and encoded by neural networks.”

The crew’s final purpose is much extra bold, Ma says. “We wish to make computer systems that may perceive the three-dimensional world identical to people do.” That goal remains to be removed from realization, he acknowledges. “However to transcend the place we’re immediately, and construct a system that acts like people, we want a more difficult setting. In different phrases, we have to develop computer systems that may not solely interpret nonetheless photos however may also perceive brief video clips and finally full-length films.”

A scene within the movie “Good Will Looking” demonstrates what he has in thoughts. The viewers sees Matt Damon and Robin Williams from behind, sitting on a bench that overlooks a pond in Boston’s Public Backyard. The following shot, taken from the alternative aspect, provides frontal (although totally clothed) views of Damon and Williams with a completely completely different background. Everybody watching the film instantly is aware of they’re watching the identical two individuals, despite the fact that the 2 photographs don’t have anything in widespread. Computer systems can’t make that conceptual leap but, however Ma and his colleagues are working laborious to make these machines more proficient and — a minimum of on the subject of imaginative and prescient — extra like us.

The crew’s work will probably be offered subsequent week on the Convention on Laptop Imaginative and prescient and Sample Recognition.