Stability.AI, the corporate that developed Secure Diffusion, launched a brand new model of the AI mannequin in late November. A spokesperson says that the unique mannequin was launched with a security filter, which Lensa doesn’t seem to have used, as it will take away these outputs. A method Secure Diffusion 2.0 filters content material is by eradicating photographs which are repeated usually. The extra usually one thing is repeated, comparable to Asian girls in sexually graphic scenes, the stronger the affiliation turns into within the AI mannequin.

Caliskan has studied CLIP (Contrastive Language Picture Pretraining), which is a system that helps Secure Diffusion generate photographs. CLIP learns to match photographs in an information set to descriptive textual content prompts. Caliskan discovered that it was filled with problematic gender and racial biases.

“Ladies are related to sexual content material, whereas males are related to skilled, career-related content material in any necessary area comparable to medication, science, enterprise, and so forth,” Caliskan says.

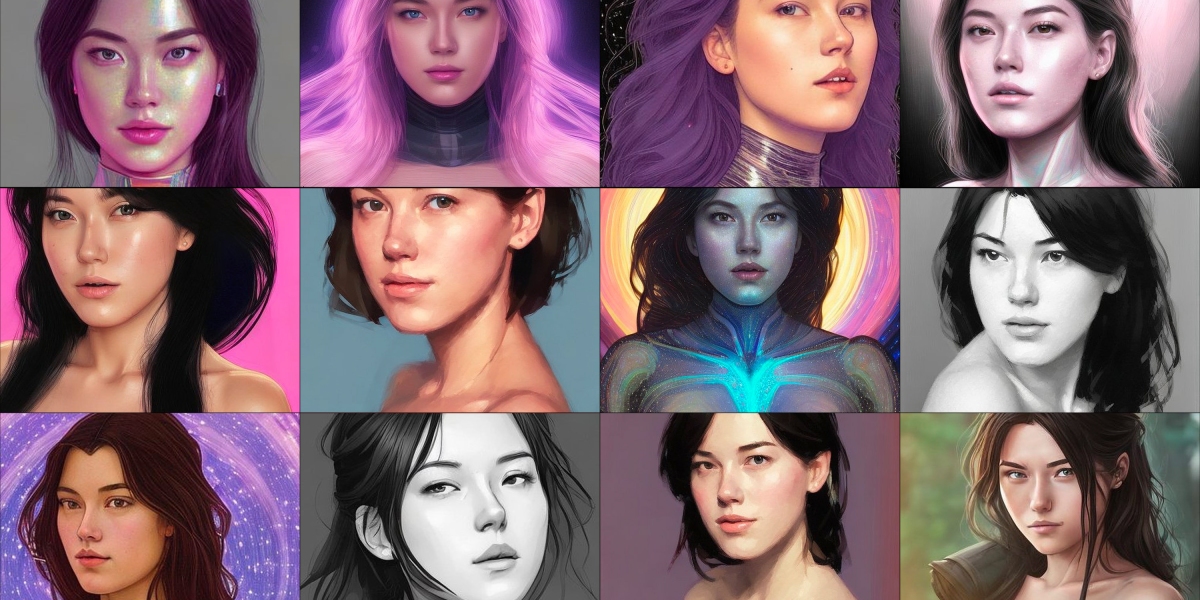

Funnily sufficient, my Lensa avatars have been extra lifelike when my photos went via male content material filters. I obtained avatars of myself carrying garments (!) and in impartial poses. In a number of photographs, I used to be carrying a white coat that appeared to belong to both a chef or a physician.

But it surely’s not simply the coaching knowledge that’s guilty. The businesses growing these fashions and apps make lively selections about how they use the info, says Ryan Steed, a PhD pupil at Carnegie Mellon College, who has studied biases in image-generation algorithms.

“Somebody has to decide on the coaching knowledge, resolve to construct the mannequin, resolve to take sure steps to mitigate these biases or not,” he says.

The app’s builders have made a selection that male avatars get to seem in house fits, whereas feminine avatars get cosmic G-strings and fairy wings.

A spokesperson for Prisma Labs says that “sporadic sexualization” of images occurs to individuals of all genders, however in several methods.