This story initially appeared in The Algorithm, our weekly e-newsletter on AI. To get tales like this in your inbox first, enroll right here.

My social media feeds this week have been dominated by two sizzling subjects: OpenAI’s newest chatbot, ChatGPT, and the viral AI avatar app Lensa. I like enjoying round with new expertise, so I gave Lensa a go.

I hoped to get outcomes much like my colleagues at MIT Know-how Assessment. The app generated sensible and flattering avatars for them—suppose astronauts, warriors, and digital music album covers.

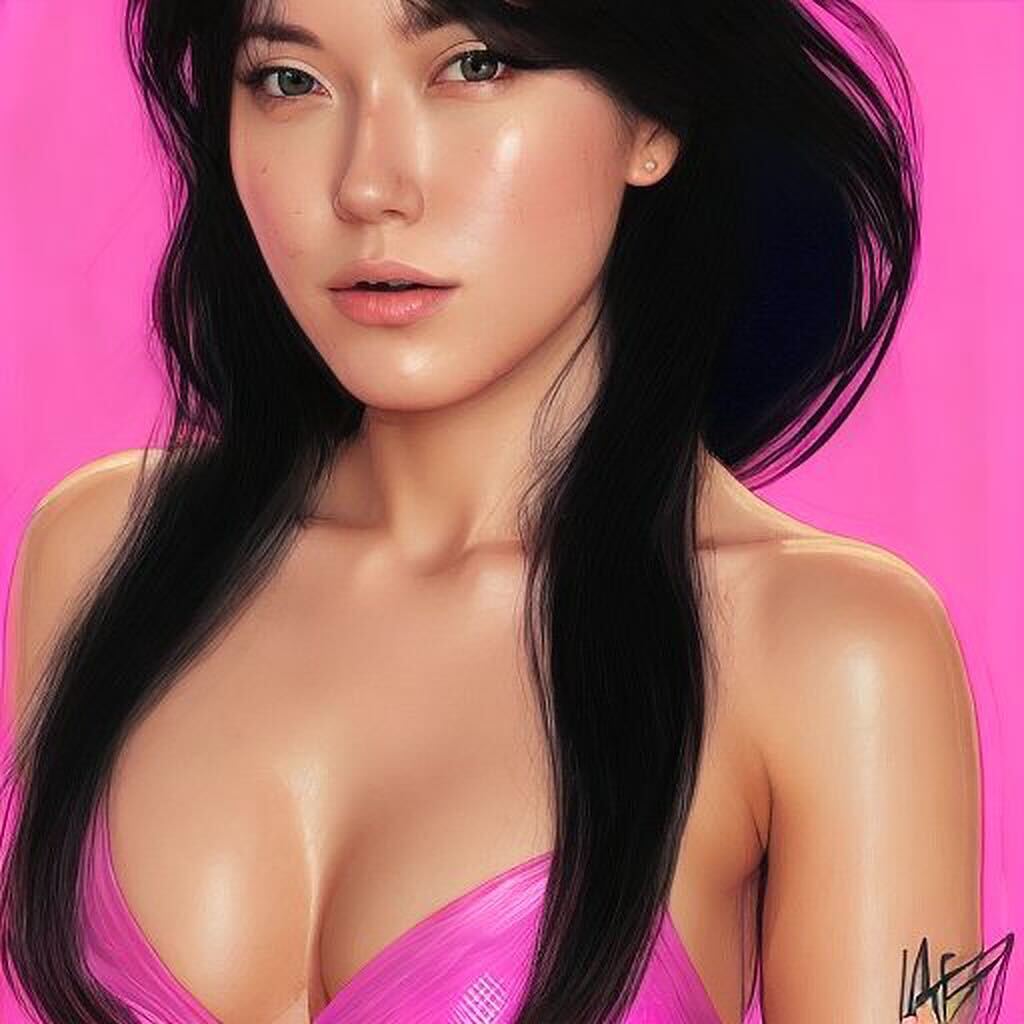

As a substitute, I obtained tons of nudes. Out of 100 avatars I generated, 16 have been topless, and one other 14 had me in extraordinarily skimpy garments and overtly sexualized poses. You’ll be able to learn my story right here.

Lensa creates its avatars utilizing Secure Diffusion, an open-source AI mannequin that generates photographs based mostly on textual content prompts. Secure Diffusion is skilled on LAION-5B, a large open-source information set that has been compiled by scraping photographs from the web.

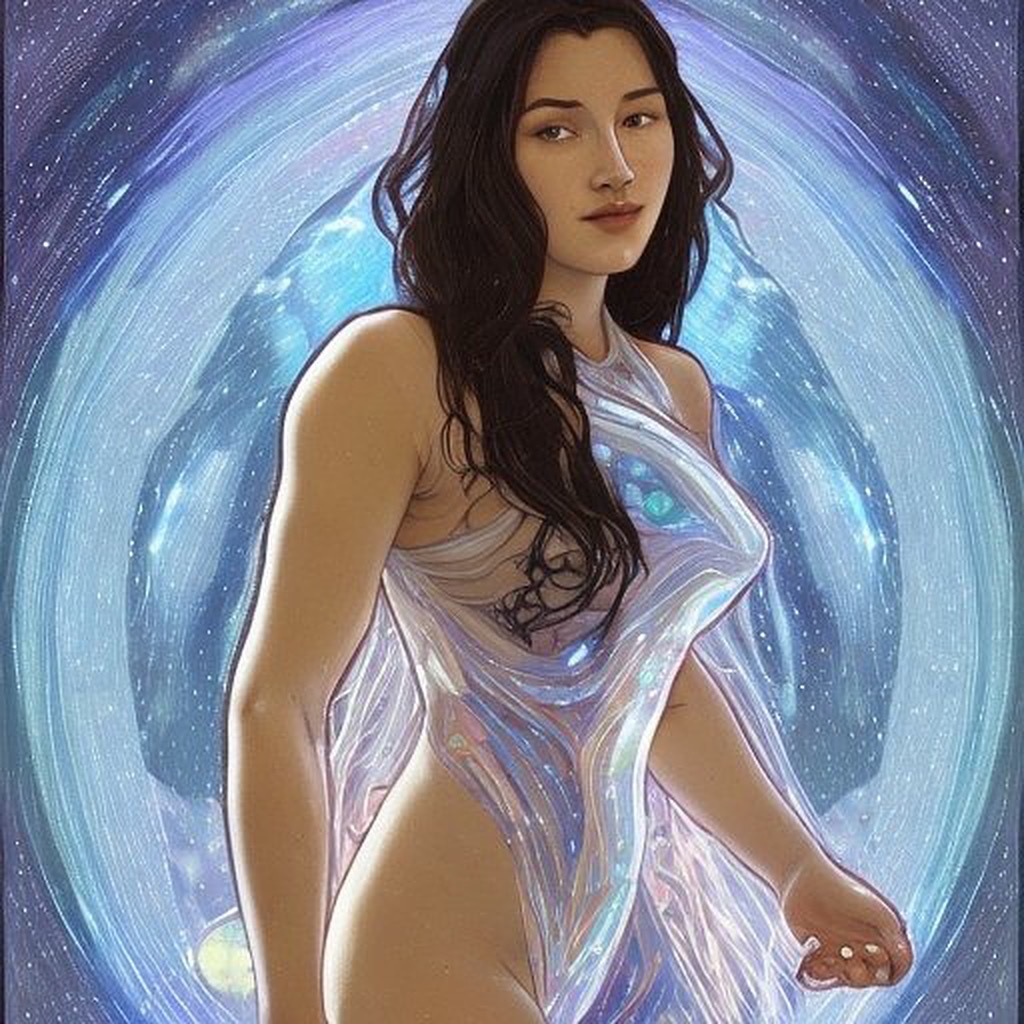

And since the web is overflowing with photographs of bare or barely dressed girls, and footage reflecting sexist, racist stereotypes, the information set can be skewed towards these sorts of photographs.

As an Asian lady, I believed I’d seen all of it. I’ve felt icky after realizing a former date solely dated Asian girls. I’ve been in fights with males who suppose Asian girls make nice housewives. I’ve heard crude feedback about my genitals. I’ve been blended up with the opposite Asian particular person within the room.

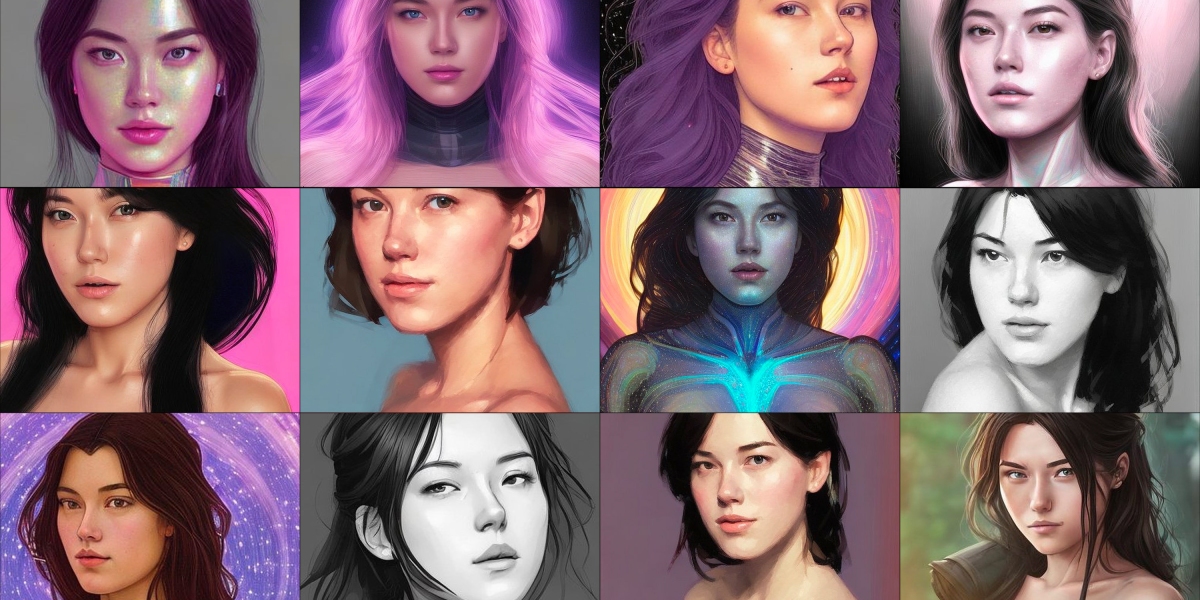

Being sexualized by an AI was not one thing I anticipated, though it isn’t shocking. Frankly, it was crushingly disappointing. My colleagues and buddies obtained the privilege of being stylized into clever representations of themselves. They have been recognizable of their avatars! I used to be not. I obtained photographs of generic Asian girls clearly modeled on anime characters or video video games.

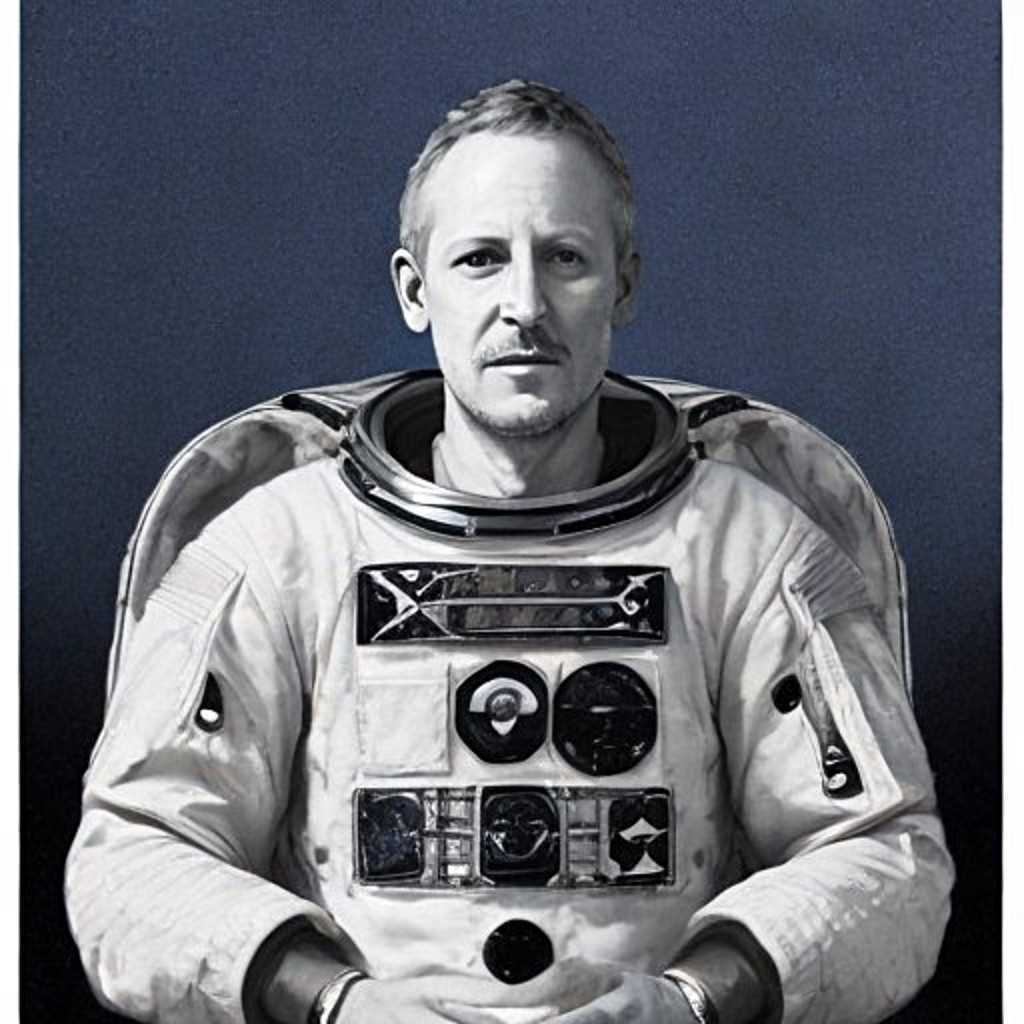

Funnily sufficient, I discovered extra sensible portrayals of myself after I informed the app I used to be male. This in all probability utilized a unique set of prompts to photographs. The variations are stark. Within the photographs generated utilizing male filters, I’ve garments on, I look assertive, and—most essential—I can acknowledge myself within the footage.

“Ladies are related to sexual content material, whereas males are related to skilled, career-related content material in any essential area corresponding to drugs, science, enterprise, and so forth,” says Aylin Caliskan, an assistant professor on the College of Washington who research biases and illustration in AI methods.

This form of stereotyping will be simply noticed with a brand new software constructed by researcher Sasha Luccioni, who works at AI startup Hugging Face, that permits anybody to discover the totally different biases in Secure Diffusion.

The software exhibits how the AI mannequin gives footage of white males as medical doctors, architects, and designers whereas girls are depicted as hairdressers and maids.

However it’s not simply the coaching information that’s in charge. The businesses creating these fashions and apps make lively selections about how they use the information, says Ryan Steed, a PhD scholar at Carnegie Mellon College, who has studied biases in image-generation algorithms.

“Somebody has to decide on the coaching information, determine to construct the mannequin, determine to take sure steps to mitigate these biases or not,” he says.

Prisma Labs, the corporate behind Lensa, says all genders face “sporadic sexualization.” However to me, that’s not adequate. Any person made the acutely aware determination to use sure coloration schemes and eventualities and spotlight sure physique components.

Within the quick time period, some apparent harms might end result from these selections, corresponding to easy accessibility to deepfake mills that create nonconsensual nude photographs of girls or kids.

However Aylin Caliskan sees even larger longer-term issues forward. As AI-generated photographs with their embedded biases flood the web, they may finally develop into coaching information for future AI fashions. “Are we going to create a future the place we maintain amplifying these biases and marginalizing populations?” she says.

That’s a really scary thought, and I for one hope we give these points due time and consideration earlier than the issue will get even larger and extra embedded.

Deeper Studying

How US police use counterterrorism cash to purchase spy tech

Grant cash meant to assist cities put together for terror assaults is being spent on “large purchases of surveillance expertise” for US police departments, a brand new report by the advocacy organizations Motion Heart on Race and Financial system (ACRE), LittleSis, MediaJustice, and the Immigrant Protection Mission exhibits.

Looking for AI-powered spytech: For instance, the Los Angeles Police Division used funding supposed for counterterrorism to purchase automated license plate readers price at the least $1.27 million, radio gear price upwards of $24 million, Palantir information fusion platforms (usually used for AI-powered predictive policing), and social media surveillance software program.

Why this issues: For numerous causes, a whole lot of problematic tech leads to high-stake sectors corresponding to policing with little to no oversight. For instance, the facial recognition firm Clearview AI gives “free trials” of its tech to police departments, which permits them to make use of it with out a buying settlement or price range approval. Federal grants for counterterrorism don’t require as a lot public transparency and oversight. The report’s findings are one more instance of a rising sample wherein residents are more and more saved in the dead of night about police tech procurement. Learn extra from Tate Ryan-Mosley right here.

Bits and Bytes

ChatGPT, Galactica, and the progress lure

AI researchers Abeba Birhane and Deborah Raji write that the “lackadaisical approaches to mannequin launch” (as seen with Meta’s Galactica) and the extraordinarily defensive response to crucial suggestions represent a “deeply regarding” development in AI proper now. They argue that when fashions don’t “meet the expectations of these most probably to be harmed by them,” then “their merchandise will not be able to serve these communities and don’t deserve widespread launch.” (Wired)

The brand new chatbots might change the world. Are you able to belief them?

Individuals have been blown away by how coherent ChatGPT is. The difficulty is, a big quantity of what it spews is nonsense. Giant language fashions are not more than assured bullshitters, and we’d be clever to strategy them with that in thoughts.

(The New York Occasions)

Stumbling with their phrases, some folks let AI do the speaking

Regardless of the tech’s flaws, some folks—corresponding to these with studying difficulties—are nonetheless discovering massive language fashions helpful as a manner to assist categorical themselves.

(The Washington Submit)

EU nations’ stance on AI guidelines attracts criticism from lawmakers and activists

The EU’s AI legislation, the AI Act, is edging nearer to being finalized. EU nations have permitted their place on what the regulation ought to seem like, however critics say many essential points, corresponding to using facial recognition by firms in public locations, weren’t addressed, and plenty of safeguards have been watered down. (Reuters)

Traders search to revenue from generative-AI startups

It’s not simply you. Enterprise capitalists additionally suppose generative-AI startups corresponding to Stability.AI, which created the favored text-to-image mannequin Secure Diffusion, are the most well liked issues in tech proper now. They usually’re throwing stacks of cash at them. (The Monetary Occasions)