Researchers in South Korea have developed an ultra-small, ultra-thin LiDAR gadget that splits a single laser beam into 10,000 factors overlaying an unprecedented 180-degree area of view. It is able to 3D depth-mapping a whole hemisphere of imaginative and prescient in a single shot.

Autonomous automobiles and robots want to have the ability to understand the world round them extremely precisely if they are going to be protected and helpful in real-world circumstances. In people, and different autonomous organic entities, this requires a variety of various senses and a few fairly extraordinary real-time information processing, and the identical will doubtless be true for our technological offspring.

LiDAR – brief for Mild Detection and Ranging – has been round for the reason that Nineteen Sixties, and it is now a well-established rangefinding know-how that is notably helpful in creating 3D point-cloud representations of a given area. It really works a bit like sonar, however as an alternative of sound pulses, LiDAR units ship out brief pulses of laser mild, after which measure the sunshine that is mirrored or backscattered when these pulses hit an object.

The time between the preliminary mild pulse and the returned pulse, multiplied by the pace of sunshine and divided by two, tells you the space between the LiDAR unit and a given level in area. In the event you measure a bunch of factors repeatedly over time, you get your self a 3D mannequin of that area, with details about distance, form and relative pace, which can be utilized along with information streams from multi-point cameras, ultrasonic sensors and different programs to flesh out an autonomous system’s understanding of its atmosphere.

In keeping with researchers on the Pohang College of Science and Know-how (POSTECH) in South Korea, one of many key issues with current LiDAR know-how is its area of view. If you wish to picture a large space from a single level, the one strategy to do it’s to mechanically rotate your LiDAR gadget, or rotate a mirror to direct the beam. This sort of gear could be cumbersome, power-hungry and fragile. It tends to wear down pretty shortly, and the pace of rotation limits how typically you possibly can measure every level, decreasing the body charge of your 3D information.

Stable state LiDAR programs, then again, use no bodily shifting components. A few of them, in line with the researchers – just like the depth sensors Apple makes use of to be sure you’re not fooling an iPhone’s face detect unlock system by holding up a flat picture of the proprietor’s face – venture an array of dots all collectively, and search for distortion within the dots and the patterns to discern form and distance info. However the area of view and determination are restricted, and the staff says they’re nonetheless comparatively massive units.

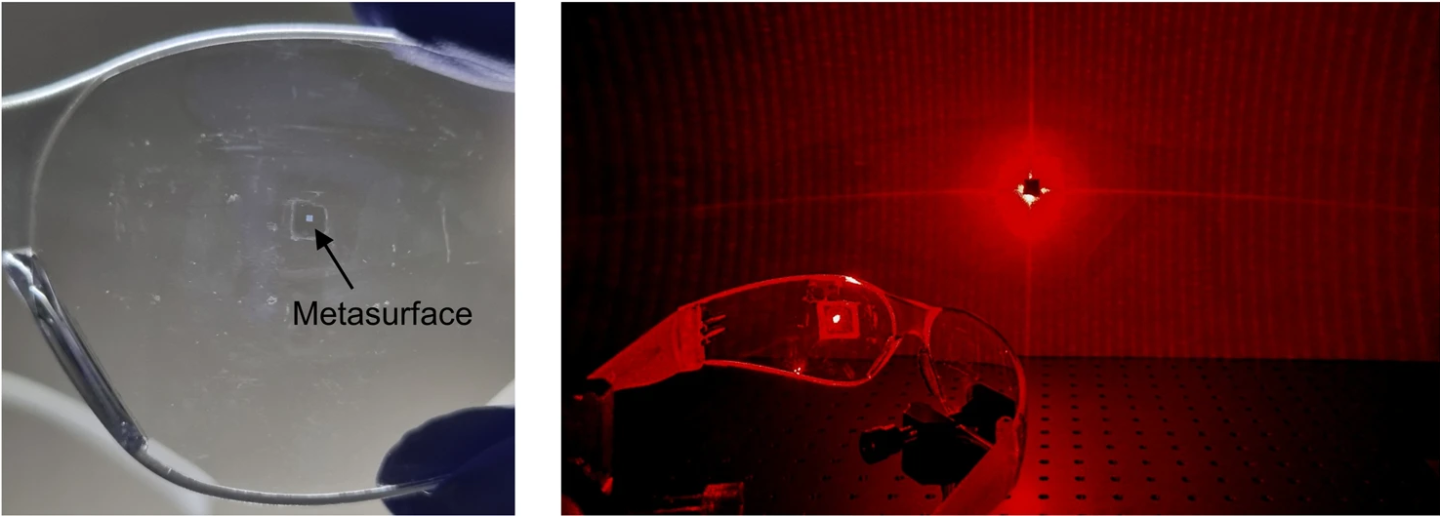

The Pohang staff determined to shoot for the tiniest doable depth-sensing system with the widest doable area of view, utilizing the extraordinary light-bending talents of metasurfaces. These 2-D nanostructures, one thousandth the width of a human hair, can successfully be considered as ultra-flat lenses, constructed from arrays of tiny and exactly formed particular person nanopillar parts. Incoming mild is break up into a number of instructions because it strikes by a metasurface, and with the correct nanopillar array design, parts of that mild could be diffracted to an angle of practically 90 levels. A totally flat ultra-fisheye, when you like.

POSTECH

The researchers designed and constructed a tool that shoots laser mild by a metasurface lens with nanopillars tuned to separate it into round 10,000 dots, overlaying an excessive 180-degree area of view. The gadget then interprets the mirrored or backscattered mild through a digital camera to offer distance measurements.

“We now have proved that we are able to management the propagation of sunshine in all angles by creating a know-how extra superior than the standard metasurface units,” stated Professor Junsuk Rho, co-author of a brand new examine printed in Nature Communications. “This can be an unique know-how that may allow an ultra-small and full-space 3D imaging sensor platform.”

The sunshine depth does drop off as diffraction angles turn out to be extra excessive; a dot bent to a 10-degree angle reached its goal at 4 to seven occasions the facility of 1 bent out nearer to 90 levels. With the gear of their lab setup, the researchers discovered they acquired greatest outcomes inside a most viewing angle of 60° (representing a 120° area of view) and a distance lower than 1 m (3.3 ft) between the sensor and the item. They are saying higher-powered lasers and extra exactly tuned metasurfaces will improve the candy spot of those sensors, however excessive decision at higher distances will at all times be a problem with ultra-wide lenses like these.

POSTECH

One other potential limitation right here is picture processing. The “coherent level drift” algorithm used to decode the sensor information right into a 3D level cloud is very advanced, and processing time rises with the purpose depend. So high-resolution full-frame captures decoding 10,000 factors or extra will place a fairly robust load on processors, and getting such a system working upwards of 30 frames per second can be an enormous problem.

Then again, this stuff are extremely tiny, and metasurfaces could be simply and cheaply manufactured at huge scale. The staff printed one onto the curved floor of a set of security glasses. It is so small you’d barely distinguish it from a speck of mud. And that is the potential right here; metasurface-based depth mapping units could be extremely tiny and simply built-in into the design of a variety of objects, with their area of view tuned to an angle that is smart for the appliance.

The staff sees these units as having big potential in issues like cell units, robotics, autonomous automobiles, and issues like VR/AR glasses. Very neat stuff!

The analysis is open entry within the journal Nature Communications.

Supply: POSTECH